Lauren E. Palmer, Bradley T. Erford

This study explored the prediction of student outcome variables from the ASCA national model level of program implementation. A total sampling of schools from two suburban school districts was conducted. Outcome variables were measures of math and reading achievement scores, attendance and graduation rates. Such measures play a central role in promoting school counselors as an integral part of the educational process.

Keywords: ASCA national model, outcome variables, attendance, graduation rates, achievement scores

At a time when accountability within the school counseling profession is at the forefront, school counselors are required to present evidence which validates the effectiveness of daily practices. The American School Counselor Association (ASCA) created a framework for implementing comprehensive developmental school counseling programs through specification of standards and competencies. But does degree of implementation of the ASCA National Model (2005) affect the ability of school counselors to meet student needs? This assertion is supported by correlative or indirect research which indicates the positive effects of fully implemented school counseling programs (Brigman & Campbell, 2003; Carrell & Carrell, 2006; Lapan, Gysbers, & Kayson, 2007; Lapan, Gysbers, & Petroski, 2001; Lapan, Gysbers, & Sun, 1997; McGannon, Carey, & Dimmitt, 2005; Nelson, Gardner, & Fox, 1998; Sink, 2005; Sink & Stroh, 2003; Whiston & Wachter, 2008).

A focus on attaining the goals of a comprehensive program is essential to initiate systemic change and to establish the school counseling program as an integral part of the total educational process. School counselors develop and refine their roles in order to meet the diverse needs of students and the school community. Transitioning from the traditional guidance program, or no program at all, to a comprehensive, developmental school counseling program is a demanding task, but is attainable through collaboration among school counselors and stakeholders. A program audit is a fundamental step in this process as well as in evaluating where a counseling program currently is and establishing where the program aims to go in the future.

ASCA (2005) presented a standardized framework for creating a comprehensive school counseling program that supports the academic, career and personal/social development of students throughout their academic careers. According to ASCA, a school counseling program is comprehensive, preventative and developmental in nature. This framework provides school counselors with an all-inclusive approach to program foundation, delivery, management and accountability. Similar to the Education Trust (2009) and College Board (2009), ASCA promotes a new vision for the school counseling profession which reflects accountability, advocacy, leadership, collaboration, and systemic change within schools, positioning professional school counselors as essential contributors to student success.

The extant literature reveals much support for the positive benefits of school counseling programs for students. As a result of fully implemented school counseling programs, students enjoy higher grades (Lapan et al., 2001; Lapan et al., 1997), better school climate (Lapan et al., 1997), higher satisfaction with education (Lapan et al., 2001; Lapan et al., 1997), more relevant education (Lapan et al., 2001), higher ACT scores (Nelson et al., 1998), and greater access to more advanced math, science, technical and vocational courses (Nelson et al., 1998). Studies also have provided evidence of fewer classroom disruptions and improved peer behavior among students who participated in comprehensive school counseling programs (Brigman & Campbell, 2003; Lapan, 2001; Lapan et al., 2007; Lapan et al., 1997; Sink, 2005; Sink & Stroh, 2003).

The program audit is an evaluation tool used to determine the extent to which components of a comprehensive program are implemented and helps to make decisions concerning future directions that a school counseling program will take. A program audit, or process evaluation, assists school counselors in implementing the standards and components of a comprehensive school counseling program, in addition to identifying areas for improvement or enhancement (ASCA, 2005). ASCA has suggested that a program audit be completed annually to determine the strengths and weaknesses evident within a school counseling program with regard to the four main elements of the ASCA National Model: foundation, delivery system, management system and accountability. Specific criteria under each component are used as a way to evaluate implementation of the school counseling program.

This study examined the prediction of student outcomes, including achievement scores, attendance and graduation rate, using level of implementation of the ASCA National Model (2005) as a predictor variable. It was hypothesized that level of program implementation would be a significant predictor of student outcomes at each of the three school levels: elementary, middle and high school. The study also determined coefficients alpha for the ASCA Program Audit for the total sample and each academic level.

Method

Participants

A nonrandomized cluster sampling of two public school districts located in Maryland was conducted to select participants for the study. These two public school systems housed a total of 111 elementary schools, 30 middle schools, and 23 high schools for a total of 164 schools. Each participating school had at least one professional school counselor and a school counseling program in place. In the instances where multiple school counselors were assigned to schools, the data were provided by the guidance chair or lead counselor. School counselors from two alternative schools responded, but were eliminated from the sample due to dissimilarity with the traditional high schools and small sample size. Thus, a total of 78 (70%) elementary schools, 17 (57%) middle schools, and 18 (78%) high schools participated for a total sample of 113 schools (69%) within the two participating school districts.

Instrument

The ASCA Program Audit (ASCA, 2005) served as an independent variable for this study. The audit takes approximately 30–45 minutes to complete the 115 prompts and uses a Likert-type scale to evaluate the components of a counseling program along the continuum of “None” (meaning not in place), “In progress” (perhaps begun, but not completed), “Completed” (but perhaps not as yet implemented), “Implemented” (fully implemented), or “Not applicable” (for situations where the component does not apply). For the purposes of this study, these response choices were coded 0, 1, 2, 3, and 0, respectively. Once a program audit is completed, the information can be used to determine implementation strengths of the program, areas of the program which need strengthening, and short-range and long-range goals for implementation improvement.

This is the first published study to use the complete ASCA Program Audit as a study independent variable. One other study, a dissertation (Wong, 2008), used facets of the ASCA Program Audit as an independent variable. Wong constructed a survey which was modified from the ASCA Program Audit in a study designed to describe the relationship between comprehensive school counseling programs and school performance. Wong’s use of regression analysis yielded a positive relationship and predictive model between these two variables, but no information regarding the psychometric characteristics of the scale. Internal consistency information from the current study’s sample is provided in the results section.

Procedures

The method used to select participants was a nonrandomized cluster sampling of two districts from among 24 public school districts located in Maryland. Once IRB approval was received, letters were mailed out over the summer and early in the academic year to school counselors of elementary, middle and high schools within each of the two school districts selected for participation. Inclusion of school counselor supervisors assisted in the distribution and administration of this study and increased return rates of completed program audits. The school counselors of each participating school were provided with the program audit from the ASCA National Model (2005), a statement of rationale for the study and a consent form. The school counselors completed the program audit during the months of June through February with instructions to retrospectively evaluate implementation of the school counseling program components at the end of the previous (2009-2010) academic year. Demographic data, graduation rates, attendance and scores from the Maryland State Assessment (MSA) for grades 5, 8 and 10 were obtained from 2009-2010 Maryland Report Cards as retrieved from the Maryland State Department of Education website (http://mdreportcard.org/).

The dependent variable of achievement was measured using MSA math and reading scores and defined operationally as the percentage of those students of a given grade not meeting the criterion for passing (i.e., percentage of students receiving only basic scores), separately for the reading and math components. The MSA is administered to students in grades 3–5 at the elementary level, grades 6–8 at the middle school level and during the 10th grade in high school. Fifth grade scores, 8th grade scores and 10th grade scores (English and algebra) were used for these analyses, reasoning that these scores reflected the cumulative intervention of prolonged exposure to the school’s curricular experience.

The dependent variable of attendance was defined as the percent of average daily attendance including ungraded students in special education programs (Maryland State Department of Education, 2010). The dependent variable of graduation rate was defined by MSDE as the percentage of students who received a Maryland high school diploma during the school year. More specifically, the graduation rate is calculated by “dividing the number of high school graduates by the sum of the dropouts for grades 9 through 12, respectively, in consecutive years, plus the number of number of high school graduates (MSDE, 2010, para 1).” Since graduation rate and dropout rate in this sample were highly correlated (r = -.752, p < .001, n = 18), graduation rate was used in the analysis, while dropout rate was excluded as redundant.

Analysis

The data from the demographic and program audit forms were coded into an SPSS database. The total audit score was used to determine the level of program implementation. Data marked as “N/A” or “none” were coded as 0 to reflect no attempt at implementation, even though the actual audit reported them separately. “In progress” was coded as a 1, “completed” was coded as a 2, and “implemented” was coded as a 3. The total audit score was the simple sum of scores for the 115 responses. Appropriate Pearson family correlation coefficients were applied to analyze relationships between the total audit score (program implementation), student-to-counselor ratio and school outcome measures. Simple linear regression analyses were conducted to determine whether degree of model program implementation was a significant predictor of student outcomes of achievement scores, attendance and graduation rate at each level: elementary, middle and high school.

Results

Of the 164 schools in the two participating school districts, 115 (70%) returned completed consent, demographic and program audit forms for analysis. Two high schools were eliminated because they were designated alternative schools. Thus, a total participation rate of 113 schools (69%) was obtained. Type I error (α) was set at the .05 level of probability for all analyses. Trends were indicated by probability levels of p < .10. Effect sizes for r or R were interpreted as follows (Cohen, 1988): .10 indicated a small effect; .30 indicated a medium effect; and .50 indicated a large effect.

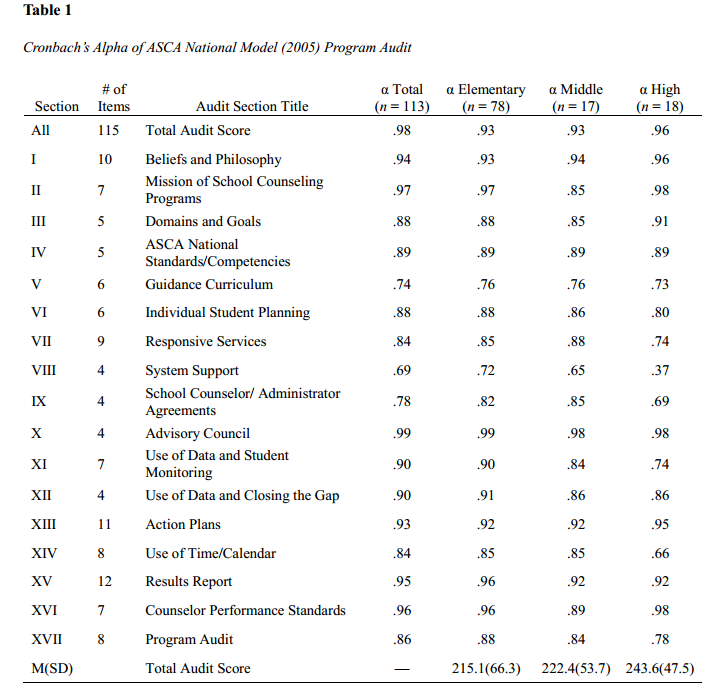

This study provides the first reported analysis of internal consistency of a program audit (ASCA, 2005). Internal consistency was measured using Cronbach’s coefficient alpha. Alphas were calculated to determine the level of internal consistency of the total scale and each of the 17 sections of the program audit on the current total sample (n = 113), and separately for the elementary (n = 78), middle (n = 17) and high school (n = 18) samples. Table 1 provides a summary of these coefficients alphas for the total sample and disaggregated by elementary, middle and high school samples. For the total scale of 115 items, the α of .98 indicated an extraordinarily high degree of internal consistency. The program audit yielded an adequate degree of internal consistency for all 17 sections, ranging from α = .69–.99 for the total sample, with a median α of .89.

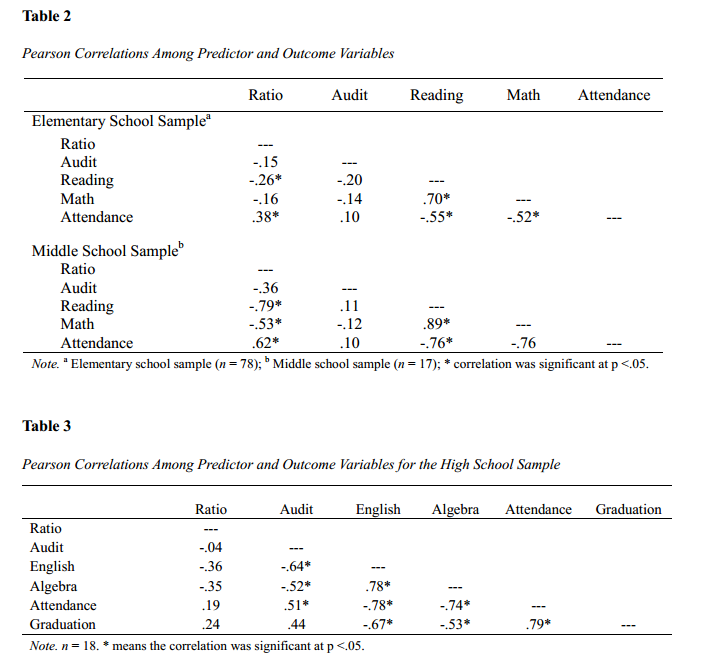

Correlation coefficients were calculated between the predictor and outcome variables and presented in Table 2 for the elementary and middle schools, and Table 3 for the high school samples. A cursory inspection of the outcome variables indicates strong intercorrelations, yielding magnitudes of r >.50 in all instances, which are large effect sizes. Correlations between the program audit predictor variable and outcome measures at the elementary and middle school levels were not significant (p > .05, see Table 2) and yielded small effect sizes ranging from .10 to .20 (adjusted for directional effects). However, at the high school level (see Table 3), significant correlations and large effect sizes were noted between the program audit predictor variable and high school outcome measures. Descriptive statistical analysis indicated that all variables were normally distributed with the one exception: the elementary reading outcome had a skewness index of 1.42.

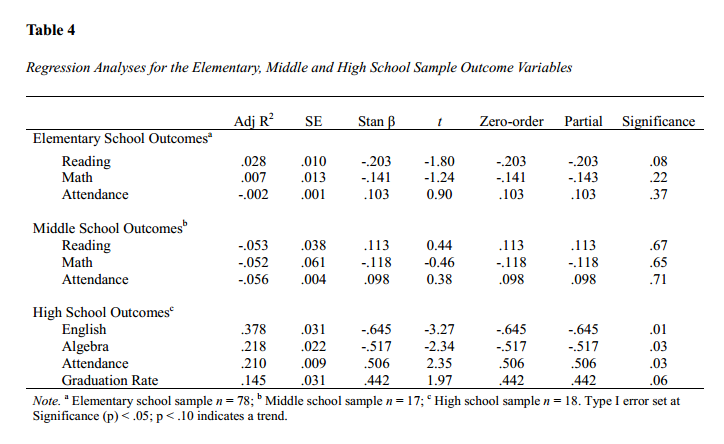

To assess the proportion of variance in outcomes that could be uniquely accounted for by the ASCA program audit, simple linear regression analysis was used to test the hypothesis that a program audit would significantly predict each outcome variable at each of the three school levels (elementary, middle and high). These regression results are presented in Table 4. Program audit scores did not significantly predict any student outcome measure scores at either the elementary or middle school level, although the prediction of fifth grade reading achievement trended toward significance (p = .08). However, at the high school level (n = 18), program audit predicted English (β = -.645, t = -3.27, p < .05, large effect), algebra (β = -.517, t = -2.34, p < .05, large effect), and attendance (β = .506, t = 2.35, p < .05, large effect) outcomes, and a trend was noted in the prediction of high school graduation rate (β = .442, t = 1.97, p = .06, medium to large effect).

Discussion

The purpose of the study was to determine whether level of ASCA National Model (2005) program implementation predicted student outcomes (i.e., achievement scores, attendance and graduation rates). Results indicated that no prediction was observed at either the elementary or middle school levels on any of the outcome variables (reading, math or attendance). At the high school level, the data showed that as program implementation increased the percentage of students scoring basic on the MSA English and algebra decreased, which is a positive result. Likewise, at the high school level when program implementation increased, so did attendance and the related trend of increased high school graduation rates. Thus, the hypothesis that higher program implementation would predict better student achievement received mixed support and suggested a need for high school counselors to implement comprehensive developmental programs in order to benefit all students and improve important school and student outcomes.

Why these high school findings were not replicated at the elementary and middle school levels is puzzling, as the extant literature demonstrates a significant relationship between program implementation and student outcomes at all levels of schooling. One explanation may lie in the samples used for this study. The sample sizes used at the middle and high school levels were small, 17 and 18, respectively, reducing the power of the analyses, while the elementary sample was much larger (n = 78). A cursory inspection of the means and standard deviations from these three samples (see Table 1) indicates that the elementary sample had the lowest level of overall program implementation and the largest spread in scores (M = 215.1, SD = 66.3), compared to middle school (M = 222.4, SD = 53.7) and high school (M = 243.6, SD = 47.5). However, usually greater variations in scores lead to better predictions.

Study Limitations and Areas for Future Research

Additional inquiry regarding the implementation of comprehensive school counseling programs and student outcomes is necessary to determine the link between student outcomes and school counseling services. Some researchers have pointed out that previous investigations into this area of study have yielded deceiving results (Brown & Trusty, 2005; McGannon et al., 2005). For example, many of the studies used research designs and procedures that did not justify a causal relationship between counseling programs and positive outcomes. Indeed, the present study was correlational in nature, so causative inferences cannot be made. This study did not use a controlled treatment intervention and cannot determine a causal relationship between level of program implementation and more positive student outcomes. The small sample sizes of the middle school and high school counselors may have affected the results as well.

Various confounding variables exist in the current and previous studies, such as other co-occurring educational programs, and school organizational structure and leadership, all of which tend to influence academic achievement. Moreover, some of the data collected within these previous studies are self-reported and not cross-validated with multiple sources of information or informants. In studies that compare counseling programs and student achievement, Berliner and Biddle (1995) noted that researchers often fail to control for pupil expenditure, which is not always equivalent to socio-economic status as many presume because of high correlations (Brown & Trusty, 2005). Failure to control for socio-economic status also can confound the results which may be a factor in this study, although using only two large school systems may have provided some control for per pupil expenditure rates.

McGannon et al. (2005) emphasized the need for standardized achievement scores and other institutional data, intervention effect sizes and a measure of the quality of implementation of the program to be included in future studies to ensure worthy findings. Brown and Trusty (2005) recommended the use of proximal outcomes which include the target of interventions used with students (e.g., the development of specific ASCA competencies). Instead of using proximal outcomes, Brown and Trusty pointed out the overuse of distal outcomes (e.g., ACT scores, achievement test scores, school grades) which are affected by a number of factors rather than as a direct result of school counselor services. While proximal outcomes such as developing competencies including those within the ASCA model may be beneficial to report, the methods used to establish these competencies also becomes the focus of scrutiny.

Longitudinal and experimental design studies which include control and treatment groups are necessary to establish causal relationships. Correlational studies are often selected as the analysis tool of choice because of expediency and ease. Longitudinal studies take years to complete and are subject to student attrition. Experimental studies in schools also are complicated by trying to locate a school willing to serve as the control group (i.e., a school that does not have a counseling program in place or a school counselor on staff).

Outcome research plays a central role in promoting school counselors as an integral part of the educational process. It is critical for school counselors to use interventions and program components which provide positive student outcomes (McGannon et al., 2005) and to be knowledgeable of current research relevant to their position and the population they serve.

References

American School Counselor Association. (2005). The ASCA national model: A framework for school counseling programs (2nd ed.). Alexandria, VA: Author.

Berliner, D. C., & Biddle, B. J. (1995). The manufactured crisis: Myths, fraud, and the attack on America’s public schools. Reading, MA: Addison-Wesley.

Brigman, G., & Campbell, C. (2003). Helping students improve academic achievement and school success behavior. Professional School Counseling, 7, 91–99.

Brown, D., & Trusty, J. (2005). School counselors, comprehensive school counseling programs, and academic achievement: Are school counselors promising more than they can deliver? Professional School Counseling, 9, 1–8.

Carrell, S. E., & Carrell, S. A. (2006). Do lower student to counselor ratios reduce school disciplinary problems? Contributions to Economic Analysis & Policy, 5, 1–24.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Hillsdale, NJ: Erlbaum.

College Board. (2009). About us: The College Board. Retrieved from http://www.collegeboard.com/about/index.html

Education Trust. (2009). About the Education Trust. Retrieved from http://www.edtrust.org/dc/about

Lapan, R. T. (2001). Results-based comprehensive guidance and counseling program: A framework for planning and evaluation. Professional School Counseling, 4, 289–299.

Lapan, R. T., Gysbers, N. C., & Kayson, K. (2007). How implementing comprehensive guidance programs improves academic achievement for all Missouri students. Jefferson City, MO: Missouri Department of Elementary and Secondary Education, Division of Career Education.

Lapan, R. T., Gysbers, N. C., & Petroski, G. (2001). Helping 7th graders be safe and academically successful: A statewide study of the impact of comprehensive guidance programs. Journal of Counseling and Development, 79, 320–330.

Lapan, R. T., Gysbers, N. C., & Sun, Y. (1997). The impact of more fully implemented guidance programs on the school experiences of high school students: A statewide evaluation study. Journal of Counseling and Development, 75, 292–302.

McGannon, W., Carey, J., & Dimmitt, C. (2005). The current status of school counseling outcome research. Amherst, MA: Center for School Counseling Outcome Research, University of Massachusetts, Amherst.

Maryland State Department of Education. (2010). 2010 Maryland Report Card. [Data File]. Retrieved from

www.mdreportcard.org

Nelson, D. E., Gardner, J. L., & Fox, D. G. (1998). An evaluation of the comprehensive guidance program in Utah public schools. Salt Lake City, UT: Utah State Office of Education.

Sink, C. A. (2005). Comprehensive school counseling programs and academic achievement—A rejoinder to Brown and Trusty. Professional School Counseling, 9, 9–12.

Sink, C. A., & Stroh, H. R. (2003). Raising achievement test scores of early elementary school students through comprehensive school counseling programs. Professional School Counseling, 6, 350–364.

Whiston, S. C., & Wachter, C. (2008). School counseling, student achievement, and dropout rates: Student outcome research in the state of Indiana (Special Report). Indianapolis, IN: Indiana State Department of Education.

Wong, K. S. (2008). School counseling and student achievement: The relationship between comprehensive school counseling programs and school performance. [Doctoral dissertation]. Retrieved from Dissertation Abstracts International Section A: Humanities and Social Sciences. (AAT 3322290) .

Lauren E. Palmer, NCC, is a school counseling graduate student at Loyola University Maryland. Bradley T. Erford, NCC, is a Professor at Loyola University Maryland. Correspondence can be addressed to Bradley T. Erford, Loyola University Maryland, 2034 Greenspring Drive, Timonium, MD 21093, berford@loyola.edu.