Feb 26, 2021 | Volume 11 - Issue 1

Lisbeth A. Leagjeld, Phillip L. Waalkes, Maribeth F. Jorgensen

Researchers have frequently described rural women as invisible, yet at 28 million, they represent over half of the rural population in the United States. We conducted a transcendental phenomenological study using semi-structured interviews and artifacts to explore 12 Midwestern rural-based mental health counselors’ experiences counseling rural women through a feminist lens. Overall, we found eight themes organized under two main categories: (a) perceptions of work with rural women (e.g., counselors’ sense of purpose, a rural heritage, a lack of training for work with rural women, and the need for additional research); and (b) perceptions of rural women and mental health (e.g., challenges, resiliency, protective factors, and barriers to mental health services for rural women). We offer specific implications for counselors to address the unique mental health needs of rural women, including hearing their stories through their personal lenses and offering them opportunities for empowerment at their own pace.

Keywords: rural women, mental health counselors, feminist, perceptions, phenomenological

More than 28 million women, ages 18 and older, live in rural America and represent over half of the rural population in the United States (Bennett et al., 2013; U.S. Census Bureau, 2010). Researchers have discussed women’s issues as a distinct category within counseling for over 50 years, yet few counseling programs offer training specific to counseling women (American Psychological Association [APA], 2018; Broverman et al., 1970; Enns, 2017). Rural women have garnered even less attention within counseling literature and training over time (Bennett et al., 2013; Fifield & Oliver, 2016). In addition, rural mental health researchers have focused on rural populations in general, encapsulating women under the entire family unit (U.S. Department of Agriculture, 2015). However, in all environments, women experience mental health needs in unique ways (Mulder & Lambert, 2006; Wong, 2017). Although government agencies have increased efforts to alleviate mental health disparities in rural areas, there is limited research available on rural women’s mental health to guide these efforts (Carlton & Simmons, 2011; Hill et al., 2016). Thus, more studies focused on rural women can assist in comprehensive data-based decision-making efforts of federal, state, and local policymakers (Van Montfoort & Glasser, 2020). Mental health counselors who work with rural women have a unique perspective in understanding the needs of rural women and the disparities they face.

The Invisibility of Rural Women’s Mental Health

Researchers have described rural women as invisible within the mental health literature. Specifically, they have used words such as “unnoticed,” “lack of recognition,” “overlooked,” and “no voice and no choice,” which may illuminate why rural women have less access to appropriate mental health services and may underlie the noticeable absence of rural women as participants within research (Mulder & Lambert, 2006; Weeks et al., 2016). Members of rural communities have traditionally seen women as an extension of their nuclear and extended families and as responsible for involvement in community and church activities (Mulder & Lambert, 2006). Rural women, as a population with unique mental health needs, may need help (i.e., representation in research) getting their voices heard on a more macro level to promote systemic changes (Van Montfoort & Glasser, 2020). A research approach based in feminist theory may amplify the voices of rural women (Schwarz, 2017).

Feminism is a theoretical approach that evolved following the women’s movement in the 1960s, and grew to effect change in social, political, and cultural beliefs about women’s roles (Evans et al., 2005). Many of the early feminist writers spoke of women as “oppressed” and “having no voice” (Evans et al., 2005). Those words have been similarly found throughout the literature on rural women (Weeks et al., 2016). Feminist theory has traditionally challenged the status quo of the patriarchy by working to reduce the invisibility of women’s experiences (Evans et al., 2005; Schwarz, 2017). Further, feminist theory has evolved to amplify voices of all oppressed and marginalized individuals and to promote recognition of the intersectionality of identity. The feminist perspective can facilitate insight into the context of rural women’s experiences (Wong, 2017).

Challenges Faced by Rural Women

The definition of rural areas has historically been based on population size (U.S. Census Bureau, 2010). Some consider rurality a more accurate term than rural, as it may include population density, economic concerns, travel distances to providers, religion, agricultural heritage, behavioral norms, a shared history, and geographical location (Smalley & Warren, 2014). Rural women face unique needs related to the intersection of gender with race, ethnicity, age, and sexual orientation (Barefoot et al., 2015). Rural women have less access to educational opportunities, are often the head of household, and are more likely to live in poverty than urban women (Watson, 2019). Lesbian and bisexual rural women face challenges of bias, lack of support, and increased victimization (Barefoot et al., 2015). Although urban women also experience mental health issues related to motherhood, rural women often must travel long distances to services and have limited access to postpartum care (Radunovich et al., 2017). Residents in many rural communities experience food insecurity and related disordered eating with less proximity to grocery stores and limited food choices (Doudna et al., 2015). Isolation also creates a greater risk for partner abuse that is complicated by long distances to shelters, lack of anonymity, and a widely held view of traditional gender roles (Weeks et al., 2016). The lack of research regarding rural women and mental health compromises the efforts of rural counselors to provide care that is culturally responsive and efficacious (Imig, 2014). In addition, the recognized barriers of accessibility, availability, and acceptability of mental health services in rural areas disproportionally affect rural women (Radunovich et al., 2017).

Barriers to Mental Health Services

A lack of professionals, limited training for work in rural areas, high rates of turnover of mental health professionals, and limited research about rural demographics can negatively impact the quality of services (Smalley & Warren, 2014). In addition, rural residents may experience barriers such as long distances to services, adverse weather conditions, affordability of services, and a lack of insurance coverage (Smalley & Warren, 2014). Rural women may also feel reluctant to seek out mental health services for fear of loss of anonymity and the stigma attached to seeking mental health services in rural areas (Snell-Rood et al., 2019). Approximately 40% of rural residents with mental health issues opt to seek treatment from primary care physicians (PCPs), as these professionals may represent the only health care provider in the area (Snell-Rood et al., 2017). However, these professionals often have limited expertise in diagnosing and treating mental health issues (Hill et al., 2016).

Currently, the Council for Accreditation of Counseling and Related Educational Programs (CACREP; 2015) does not specify rurality or other cultural identities when referencing cultural competence within required curriculum. This omission may contribute to minimal specialized training, in addition to the limited research for mental health counselors to use as a guide for understanding the unique needs of rural women (Watson, 2019). Additionally, agencies have difficulty recruiting mental health counselors because of isolation from colleagues and supervisors, lower salaries, limited social and cultural opportunities, and few training opportunities specific to rural mental health (Fifield & Oliver, 2016).

Addressing Mental Health Needs of Rural Women

Given the limited research about rural women and their unique mental health needs, rural counselors are left with few evidence-based practices to utilize when working with this population (Imig, 2014). Historically, counseling researchers have equated “mentally healthy adults” with “mentally healthy adult males,” resulting in literature that is focused on best practices more appropriate for men (Broverman et al., 1970), and potentially upholding sex-role stereotypes within the fields of psychology, social work, medicine, and mental health counseling (APA, 2018; Schwarz, 2017). More recent researchers have demonstrated the efficacy of gender-specific counseling approaches (Enns, 2017). However, the approaches often do not consider the additional barriers to services that rural women may face, such as long distances to services, limited availability of mental health professionals, and the stigma of seeking services in a rural area (Hill et al., 2016).

In this transcendental phenomenological study, we sought to explore the lived experiences of licensed professional counselors (LPCs) who work with rural women in terms of their perceptions of rural Midwestern women’s mental health, and the academic training they received to prepare them for working with rural women. The study sought to answer the following research questions: (a) What are the lived experiences of LPCs who work with rural women?; (b) What are the challenges and benefits of working with rural women?; (c) How are mental health services perceived by those working with rural women?; and (d) What training, if any, did the participants receive that was specific to work with rural women?

Method

Qualitative research, by its very nature, validates individuals who may be disempowered (Morrow, 2007; Ponterotto, 2010). Phenomenology is a qualitative method that helps researchers describe the common meaning of participants’ lived experiences specific to a particular phenomenon (Creswell & Poth, 2018). In this study, the phenomenon was the lived experiences of LPCs who worked with rural women. Transcendental phenomenology (Moustakas, 1994) provided a framework for the study that began with epoché, a process of bracketing the researchers’ experiences and biases, and the collection of participant stories (Creswell & Poth, 2018). For this study, postpositivist elements of transcendental phenomenology (e.g., bracketing and data analysis) were utilized to reduce researcher biases (Moustakas, 1994). Specifically, we viewed bracketing as essential because participants might not share the feminist viewpoint of the researchers. The infusion of feminism into the study came from a constructivist/interpretivist standpoint as I (i.e., first author and lead researcher) believed—based on literature—the stories of rural women were not being heard and, thus, designed the study to help illuminate the experiences, mental health needs, and resiliency of rural women (Morrow, 2007).

Participants

For this study, participants were recruited using criterion and snowball sampling. Criterion sampling involved selecting individuals on the basis of their shared experiences and their abilities to articulate those experiences (Heppner et al., 2016). Snowball sampling allowed for selecting participants who previously had a demonstrated interest in this area of research based on their connection to other participants. Criteria for participation included a degree from a CACREP-accredited counseling program, licensure within their jurisdiction, current practice, and clinical work that included rural women. To recruit participants, we collected names and emails from a Midwestern state counseling association; however, this method produced only two responses. So, we utilized snowball sampling by asking participants to refer us to others who met our eligibility criteria (Creswell & Poth, 2018). We determined the number of LPCs needed to describe the phenomena by achieving saturation of the data collected (Heppner et al., 2016). This saturation was reflected by eventual redundancy in participant responses.

Following approval from the appropriate IRB, an invitation to participate was emailed to potential participants and included a link to a demographic form and informed consent for those who met the criteria and wished to participate. Rural areas were defined as those geographic areas containing counties with populations of less than 50,000, a definition that did not include population density but was appropriate for the Midwestern areas included in the study (Smalley & Warren, 2014). Twelve mental health counselors met the eligibility criteria for participation and enrolled in the study.

All participants had graduated from a CACREP-accredited counseling program, were licensed to practice within their jurisdiction, were currently practicing privately or in an agency, and had a clinical caseload that included rural women. The designation of LPC was used throughout the study and included all levels of licensure within the various jurisdictions. All of the LPCs reported working with a wide variety of mental health issues; three of the LPCs had addiction counseling credentials. Eleven participants self-identified as female and one self-identified as non-binary. Eleven participants self-identified as Caucasian, and one self-identified as Native American. Years of experience working as a mental health professional ranged from 4 years to 27 years, with an average of approximately 12 years. All participants reported working with both urban and rural clients, and one participant listed a reservation as the primary location for her work. LPCs’ clients included adult rural women from the upper Midwest. The rural women were single or married with children, working or unemployed, Caucasian or Native American. In addition, all the participants expressed a connection to rural areas, either through personal experience of growing up in a rural area or through connections with extended family. Each participant chose a pseudonym that is referred to throughout the manuscript.

Data Collection

We collected data through individual semi-structured interviews and participant artifacts. The semi-structured interview format allowed for more collaboration and interaction between interviewer and interviewee (Creswell & Poth, 2018). In this way, the interview format aligned with a feminist research approach and helped eliminate a power differential between researcher and participant (Heppner et al., 2016). There were 12 interview questions aimed at exploring participants’ work with rural women, participants’ perceptions of the unique mental health needs of rural women, the influence of participants’ rural heritage on their work with rural women, challenges and benefits of participants’ work with rural women, and participants’ training specific to work with rural women (see Appendix for all 12 interview questions). As lead researcher, I conducted all 12 interviews in order to maximize consistency in employing the interview protocol while allowing participants to elaborate on responses. Interviews ranged from 30–45 minutes. All research documents, such as informed consents, demographic questionnaires, and transcriptions, were securely stored on a password-protected device.

Participants were invited to share artifacts that represented their work with rural women. Artifacts could include personal letters, poems, artwork, and photos (Heppner et al., 2016). The artifacts in this study provided an opportunity for broader expression of the counselors’ experiences as well as understanding their connection to rural life. Seven artifacts were pictures of objects or individuals that inspired participants’ work with rural women, two were stories about experiences of rural women, and one was an original poem entitled “Rural Woman.”

Data Analysis

Brown and Gilligan’s (1992) research of young women and relationships utilized a Listener’s Guide for analyzing data. This guide is feminist and relational and allows researchers to pay attention to unheard voices. The Listening Guide is considered a psychological method that reflects the “social and cultural frameworks that affect what can and cannot be spoken or heard” (Gilligan & Eddy, 2017, p. 76). The method included three successive “listenings”—one for plot, one for “I” statements, and one for the individual in relationship to others (Brown & Gilligan, 1992). Throughout the listening process, I looked for and highlighted significant statements the participants made during the interview process that reflected the experiences of the phenomenon. I organized information via a phenomenological template under the heading “Essence of the Phenomenon” and included personal bracketing (epoché), significant statements, meaning units, and textural and structural descriptions (Creswell & Poth, 2018). Although a transcription service was utilized to transcribe the interviews, I read through the transcripts several times and coded data into categories or themes, which emerged organically from the transcripts. An independent peer reviewer then examined the transcriptions and helped to develop the codes and themes. We developed clusters of meaning from the significant statements into themes, followed by a textural and structural description that encompassed the significant statements and related themes. The rich and thick descriptions became the essence of the phenomenon enhanced by continual review of the interview tapes, journal notes, artifacts, and other data collected (Morrow, 2005).

Epoché

The epoché section was written from my perspective as the primary researcher and first author. I was responsible for designing the study, collecting and analyzing data, and writing the manuscript. My co-authors served as consultants in designing the study and helped to write and edit the manuscript. As the primary researcher, I sought to see the lived experiences of participants from a perspective that was free from my assumptions (Creswell & Poth, 2018). I grew up in a Midwestern rural area, steeped in traditional gender roles, while witnessing significant change for all women in expectations and opportunities. During the process of the study, it became apparent that my perceptions of rural women as stay-at-home farmwives have changed to reflect a population more diverse in ethnicity, family structure, and socioeconomic status; however, the traditional patriarchal expectations have not changed. My work as a mental health professional shaped my desire to explore the perceptions of other LPCs’ experiences of their work with rural women. Prior to the data analysis, I bracketed my personal and professional rural experiences about power differentials within rural areas.

Trustworthiness

To promote trustworthiness, I utilized self-reflective journaling, member checks, the achievement of data saturation, independent peer review, and an external audit. I kept a journal and made notes throughout the data collection process to facilitate an awareness of biases and/or assumptions that emerged during the process (Heppner et al., 2016; Morrow, 2005). I also conducted member checks, asking all participants to review and provide feedback via email on descriptions or themes (Creswell & Poth, 2018; Morrow, 2005). Frequently, participants would elaborate on themes by adding clarification to their responses to the interview questions. The “prolonged interaction” (Ponterotto, 2010, p. 583) with participants was significant for developing an egalitarian and unbiased relationship between researcher and participant. This strategy was congruent with feminist theory because it acknowledged the subjectivity of the researcher within the study and facilitated a collaborative relationship between researcher and participant (Morrow, 2007).

Coding the data into categories or themes helped arrange the large amount of data that was collected. The process was made easier by taking notes, or “memoing,” when reading through the information. The peer reviewer evaluated potential researcher bias by checking the coding against all transcripts, serving as a “mirror” that reflected my responses to the research process (Morrow, 2005, p. 254). Next, we discussed possible themes that emerged from the data (Heppner et al., 2016). I also utilized an external auditor to aid in establishing confirmability of the results rather than objectivity (Morrow, 2005). The auditor examined the entire process and determined whether the data supported my interpretations (Creswell & Poth, 2018). Both individuals had participated in phenomenological research and were not authors of this article.

Results

Analysis of the interview transcripts, the artifacts, and the journal reflections resulted in eight themes, organized into two categories. I further categorized each theme as: 1) textural, a subjective experience of the LPC’s experience with rural women; or 2) structural, the context of the experience. According to Moustakas (1994), the textural themes represent phenomenological reduction, a way of understanding that includes an external and internal experience; the structural themes represent imaginative variation, the context of the experience. One of the themes, counselor experience, fit the description of both textural and structural. The categories represented two distinct dimensions of the phenomenon: (a) LPCs’ perceptions of their work with rural women, and (b) LPCs’ perceptions of rural women and issues related to mental health.

Dimension 1: LPCs’ Perceptions of Their Work With Rural Women

Five textural themes emerged from the coding process; I took the names of three of these verbatim from the interviews. The textural themes included 20 codes that represented the subjective experiences of LPCs’ work with rural women. The participants’ pseudonyms were inserted into the direct quotes included in theme descriptions. Artifacts offered by participants were also included.

Bootstraps

Rooted in the familiar saying of “pull yourself up by your bootstraps,” this theme included codes of resilient, stoic, self-sufficient, and independent. According to LPCs’ perceptions of rural women, bootstraps described an acceptance of the current conditions of rural life and a reliance on past experiences for guidance. Many of the LPCs believed that rural women came to counseling with a skill set that, as Nancy said, “can teach us and others about how to be resilient.” Fave commented that working with rural women also required patience:

It’s this sense of “I can do this.” There are more demands with farming, and rural women still believe they should be able to do it all. When they come into counseling it can be difficult because they have worked hard to sort of protect this thing and keep it close to them because they’re pretty sure they can figure it out themselves.

Courtney shared a story about a ranch woman who was grieving the loss of her husband and was struggling with family issues. She remarked in one session, “Today I decided it was time to put on my red cowboy boots.” For Courtney, this represented her client’s resiliency and stoicism—“I’ve got this, and I’ve got my red boots on to prove it.”

Trailblazer

Trailblazer included pioneer, open-minded, resourceful, educated, and empowered; these words described LPCs’ perceptions of rural women’s abilities to move past accepting the realities of rural living and work toward change for improving themselves, their families, and their communities. According to the LPCs, this theme is distinct from bootstraps in that it is future-oriented rather than past-oriented. Elsie first referred to trailblazer when she told a story about a client who began recycling in the early 1980s: “She had bins and bins of recycling because she said, ‘I’m gonna leave this planet in a different shape than I found it.’ Rural women very much can be trailblazers.” The LPCs’ perceptions represented a new perspective that reflected resourceful change-makers, educated and empowered to challenge the status quo.

As one of her artifacts, Courtney offered a story about one woman’s determination to make Christmas special even though there were no resources for gifts and decorations. The woman found a large tumbleweed, covered it with lights and decorations, and declared it beautiful. Courtney said, “She was not just making do, but making things better.”

Challenges of Rural Women

LPCs observed multiple challenges for rural women including isolation, poverty/financial insecurity, role overload, grief, and generational trauma. Layla talked about the complex grief that was experienced by Native American women. She commented that “the death of a family member can mean losing someone from three or four generations. There is grief from loss of jobs, moving from the reservation, and loss of culture.” LPCs cited role overload as one of the most common experiences among rural women. Many rural women worked full-time jobs in addition to caring for family members while contributing to the farm/ranch operation. Jean observed that rural women “are responsible for everyone’s emotions in the family, sometimes leaving them isolated within the family.” LPCs believed that the isolation contributed to vulnerability. Rural women faced domestic violence, anxiety, depression, and addictions, exacerbated by having no one to talk with and long distances to services. Jean noted that resistance to change was perpetuated by the fear and control inherent in domestic abuse for many of her clients and led to complacency in reporting. The challenges of rural women described by participants defined the issues that LPCs faced when working in rural areas and increased their awareness of the critical needs of rural women.

Protective Factors

Protective factors included a sense of identity and the strong support systems of families and community that gave rural women “a lot of people that you can draw upon to help you through hard times,” according to Nancy. Her clients valued the easy access to nature and the opportunity to “immerse yourself in something bigger than yourself. It’s a way to build resilience and find meaning and joy spending time outside.” Layla found a strong sense of identity evident in rural Native women as central to the ability to teach their children cultural beliefs—a protective factor for future generations.

Nancy shared a picture of a family moving their 100-year-old home to a new location as her artifact. Her description of the house and rural heritage symbolized part of what she believed was important for rural women—the connection to family and heritage along with a sense of purpose in maintaining family culture. She said, “It’s a good way to pass down the family stories and even the family culture.”

Counselor Experience

Counselor experience (textural) included the reasons why participants chose to become LPCs. These included the motivations that sustained their work and advice for new counselors. Assumptions about diversity, a sense of purpose, listening, and connections to resources encapsulated this theme.

Layla became a counselor because she wanted “to give back to my Native people.” Nancy believed that the work with rural women helped her build a rural counselor identity. Woods’ early experience with rural women felt profound because of the chaos she observed in the lives of her clients, many of them impoverished single mothers struggling to survive. She was given a sense of purpose in her work saying, “These women are burned into my head.”

When asked about advice for new counselors who anticipate working with rural women, participants offered the following brief statements:

“Don’t make assumptions.” (Courtney)

“Ask to be taught.” (Marie)

“Hear their story without filtering through your own personal lens.” (Nancy)

“There is a difference in working in rural areas—a conservative mind-set, practicality—and you need to meet people where they are.” (Kay)

“Listen more than you talk.” (Suzie)

“Have respect for their culture.” (Layla)

LPCs’ Perceptions of Rural Women and Issues Related to Mental Health

Three structural themes represented what Moustakas (1994) termed imaginative variation, the acknowledgment of the context of multiple perspectives. The themes were derived from nine codes that provided a vital aspect of further describing the phenomenon. The theme descriptions included participants’ quotes and artifacts.

Perceptions of Rural Heritage

This theme represented LPCs’ view of rural life, including traditional values, heritage, and expectations/perfectionism. According to participants, many of the rural women embraced the traditional values of their rural heritage, and the roles of rural life; this theme honors that perspective. Fave talked about the expectations that rural women often have of themselves: “It’s a perfectionist perspective, meaning they can do it all.” Even in light of the increased demands on rural women’s time and energy, Marie found that rural women were often hesitant to seek outside professional mental health counseling, choosing instead to rely on family and community.

Barriers to Mental Health Services

The barriers included codes of lack of resources, stigma, and invisibility. All LPCs felt concerned about the lack of resources for rural women. Suzie talked about the dearth of women’s shelters on the reservation and resources for women who are victims of domestic violence. Suzie said, “They often stay because there are no resources for them to leave, and they can’t afford it.” Woods noted the lack of daycare providers and the fact that many rural women cannot afford these services and depend on family members for childcare. According to several LPCs, rural women do not prioritize their mental health needs, possibly because of the many demands on them.

Kay and Marie practiced in an urban area but saw many rural women who chose to travel long distances for mental health services because it gave them a sense of anonymity. Kay said, “They know if their car is parked at the counselor’s office, it won’t be recognized by everyone in town.” Rural women also feared exposing family secrets if they disclosed something to a counselor who lived in the same area.

Poignantly, LPCs acknowledged the invisibility and minimization of rural women’s mental health needs. The following comments by participants exemplified the rural woman’s experiences of being unnoticed or dismissed. Elsie stated, “Even if rural women are speaking, they don’t have the platform like urban women do, and they feel like nobody gets this life.” Kay stated, “Everything is fine, everything’s great and we’re not going to talk about the fact that Grandma is crying all the time and wearing sunglasses.”

The statements of the participants provided powerful examples of the ramifications of the silencing imposed on rural women through traditional or cultural norms. The stigma of accessing mental health services created a loss of connection between the rural women who needed the services and their community. In addition, rural women often felt selfish in seeking services just for themselves. The consensus among LPCs was that rural women suffer to a greater extent than other rural populations because their needs are minimized or not recognized. Elsie remarked that rural women do not often see their stories in mainstream media, leading them to believe “I’m living this experience that nobody else lives.”

The description of the artifact contributed for this theme may further elucidate the invisibility of rural women. Woods’ artifact was a picture of two locally designed sculptures of women. Woods said, “They are so rooted and earthy.” One sculpture had no arms or legs and, for Woods, that “speaks to the limited access to needed supports and the lack of voice.”

Counselor Experience

Counselor experience (structural) described how LPCs provide mental health services to rural women and included connection to rural life, distances and dual relationships, and lack of academic training/postgraduate training. Although not all the participants grew up in rural areas, many had rural ties through extended family. Marie’s upbringing on a ranch influenced her understanding of rural women: “There is a more intense work ethic; women are very strong and independent and hardworking.”

The LPCs seemed to feel a strong sense of purpose in their work; some of them chose to become counselors and returned to their home communities to work. They discovered that the connections of shared experiences fostered trust in the counseling relationship and process. Most felt that they were helping to make positive change. Although all participants believed the connection to a rural heritage was critical in their work with rural women, some LPCs did not live and work in the same location, saying it helped to reduce the possibility of multiple relationships. Nancy commuted almost an hour to her work “because you really want to have the counseling relationship be through your therapeutic lens and not through the community lens.”

None of the participants recalled receiving academic training specific to rural areas; however, all participants agreed on the need for academic training focused on rural areas and rural women. Elsie believed that textbooks should “include women’s voices and rural voices.” Jean expressed her concern that “We don’t necessarily address rural women or what they need from the communities around them or even what their typical experience is. I think that’s a disservice to our counseling students.”

Two artifacts aligned with this theme: Marie’s picture of a young girl, dressed in overalls, pitching hay, and Mae’s great-grandmother’s writing desk (see Figure 1). Marie’s artifact exemplified the family’s connection to rural life and the physical strength of rural women that she observed in her work. Mae now uses the writing desk in her practice and feels it gives her a strong connection to her rural heritage.

Figure 1

Mae’s Great-Grandmother’s Writing Desk

Note. Mae presented this picture of her great-grandma’s writing desk when asked to provide

an artifact that demonstrated her work with rural women.

Discussion

LPCs described rural women as strong, independent, resourceful, and resilient. However, this image of rural women was not corroborated within the research literature. An APA report on the behavioral health care needs of rural women (Mulder et al., 2000) did not mention resiliency as a coping strategy; however, in 2006, the report’s lead author recognized the need for additional research about resiliency in rural women, saying it would offer “significant potential benefit to rural women” (Mulder & Lambert, 2006, p. 15). In the present study, LPCs’ perceptions of rural women as resilient called attention to the innate strengths of rural women that developed out of necessity, cultivated by connections with family, community, and earth.

Rural heritage represented a dichotomy of rural tradition. From a positive perspective, participants believed the traditional roles of rural women provided a sense of identity and belonging. From a negative perspective, the traditional patriarchy evident in many rural areas dictated social and cultural norms, leaving rural women with the expectation that they should be able to “do it all.” Both perspectives defined a critical aspect of LPCs’ understanding of rural women. Even though many of the rural women participants described worked full-time to contribute to household income and health insurance (in addition to caretaker responsibilities), they faced gender inequities in income, employment, and educational opportunities (Watson, 2019). In addition, rural women have had little political power to effect needed policy changes for better access to care (Van Montfoort & Glasser, 2020).

LPCs highlighted multiple challenges that rural women experience: isolation, poverty, grief, role overload, and generational trauma. Barriers to obtaining services included stigma of mental health issues, loss of anonymity, a lack of resources, invisibility, and minimization of mental health issues. The general population also faces barriers of accessibility, acceptability, and availability of counseling services (Smalley & Warren, 2014); however, there were fewer references to the mental health barriers and challenges specific to rural women (Van Montfoort & Glasser, 2020). This is surprising given that the population of rural women exceeds that of any other population group in rural areas (Bennett et al., 2013). Rural women experience higher risks of depression, domestic violence, and poverty (Snell-Rood et al., 2019). The mental health services available in rural areas, often described as “loosely organized, of uneven quality, and low in resources” (Snell-Rood et al., 2019, p. 63), compound the challenges for rural women.

As evident in the themes of assumptions and diversity, rural women represent a unique population who deserve mental health services that reflect their specific needs. Rural communities and rural women are more diverse than once believed. LPCs’ observations are corroborated by research that acknowledged differences among rural women in socioeconomic status, family structure, age, sexual identity, ethnicity, education, and geographical location (Barefoot et al., 2015). In addition, there remains a misconception that the mental health needs of urban and rural women are the same; in fact, much of the literature about women and mental health is based on an urban context (Weaver & Gjesfjeld, 2014). The findings of the current study support the lack of recognition of the context of rural women’s issues and their status as an invisible population (Bender, 2016). Two LPCs’ observations of the isolation felt by rural women reinforced previous research of the invisibility of rural women. Elsie said, “Rural women don’t see their story a lot,” and Fave shared that “a lot of the women I work with don’t feel like they’re heard.”

None of the participants recalled academic training or postgraduate opportunities specific to work in rural areas or with rural women. Even though rural areas represent the largest population subgroup in the United States (Smalley & Warren, 2014), this study suggests that new counselors may not feel prepared to meet the needs of this underserved population. The shortage of mental health professionals working in rural areas and the lack of counselors who have training specific to rural mental health care suggest a need for rural-based training that might include an elective course in rural mental health and rural internships (Fifield & Oliver, 2016).

Implications

The recognition of the challenges and benefits of working with rural women may validate rural LPCs’ experiences, promote their professional identity as rural counselors, and potentially decrease the isolation felt when working in rural areas. Protective factors, including connections to family, community, and nature, may be critical for building resiliency in both rural women and rural LPCs. The increasing diversity of rural women is often contrary to the traditional stereotype of a stay-at-home farmwife (Carpenter-Song & Snell-Rood, 2017); diverse rural women may face unique barriers to accessing culturally relevant mental health services. In addition, many rural women experience role overload from working full-time and caring for families while contributing to the farm/ranch operation. Counselors should avoid interacting with rural women clients in ways that limit their identities based on stereotypes and work to make their services accessible for all women.

The study results also have implications for counselor educators. Rural-based counselors in this study did not report being taught how to work with rural women. A review of the 2016 CACREP programs found few gender-based counseling courses and none that addressed rural mental health. Programs could offer electives on counseling in rural areas, incorporate the context of gender and rural mental health into current curricula, and encourage rural internships. Collaborating with other rural health professionals may provide more informed approaches to working in rural areas. Rural residents may see their PCPs for mental health–related treatment, as PCPs may be the only health care provider in rural areas (Snell-Rood et al., 2017). Lloyd-Hazlett et al. (2020) suggested creating additional training for LPCs who choose to work in settings offering integrated care. Incorporating LPCs who have the appropriate training and skills into rural medical settings may offer mental health services in a familiar clinical context and one that does not broadcast engagement in mental health care. The collaboration may also provide more awareness of the mental health needs of rural women.

Limitations

The study has several limitations. Although I took measures to reduce any personal bias as a non-traditional rural woman, I do not believe it is possible to eliminate all biases. Many of the participants talked about empowering rural women and working toward making their clients’ voices heard, both tenets of feminist theory (Evans et al., 2005); however, participants rarely used the language of feminism. Several of the participants related personal stories of their connections with rurality and, often, their stories of rural women were from decades ago. Their stories may not have represented the current generation of rural women. Another limitation relates to the demographics of LPCs because a majority of participants self-identified as Caucasian and female and represented rural areas in the Midwest. LPCs working in other areas of the United States may encounter different demographics of rural women, mental health challenges specific to region, and unique intersections of their clients’ identities. Finally, the experiences of rural women were heard through LPCs and not from rural women clients themselves.

Directions for Future Research

This study included a sample of rural LPCs who were primarily Caucasian females from the Midwestern United States; future researchers may seek professional perspectives from participants who represent a blend of race, ethnicities, gender identities, and geographical locations. Research with rural women as participants themselves is also an important opportunity. Based on findings from this study, future researchers might also explore training needs related to work with rural women and rural populations. Studying counselor educators who teach in counseling programs based in rural areas could also offer unique insights. This may reveal information about ways educators currently infuse rural culture and work with rural women into the curriculum. Future researchers may study counselors, health care providers, and rural women in finding ways to integrate health care services in rural areas to provide better access to services and reduce the stigma often associated with mental health. Finally, additional studies about working with rural PCPs may highlight issues (e.g., intimate partner violence) that could benefit from early screening of symptoms.

Conclusion

Gilligan offers these words: “To have something to say is to be a person. But speaking depends on listening and being heard; it is an intensely relational act” (1982/1993, p. xvi). As indicated in our findings, rural women are too often invisible and unheard. This study represents a first step in amplifying the voices of rural women regarding their specific mental health needs. The experiences of the LPCs in this study have illuminated ways to connect with rural women, listen to their stories, and validate unique aspects of their cultural identities that seem to be well illustrated in one participant’s poem:

Rural Women

Resilient; stubborn; motivated

frightened; broken; courageous

Struggling; down-trodden; strong

Relentless in self-expectation

Armed with determination.

A common thread unites us

The heart gently calls, and the

soul asks only—please—listen to me.

Conflict of Interest and Funding Disclosure

The authors reported no conflict of interest

or funding contributions for the development

of this manuscript.

References

American Psychological Association. (2018). APA guidelines for psychological practice with girls and women.

http://www.apa.org/about/policy/psychological-practice-girls-women.pdf

Barefoot, K. N., Rickard, A., Smalley, K. B., & Warren, J. C. (2015). Rural lesbians: Unique challenges and implications for mental health providers. Journal of Rural Mental Health, 39(1), 22–33.

https://doi.org/10.1037/rmh0000014

Bender, A. K. (2016). Health care experiences of rural women experiencing intimate partner violence and substance abuse. Journal of Social Work Practice in the Addictions, 16(1–2), 202–221.

https://doi.org/10.1080/1533256X.2015.1124783

Bennett, K. J., Lopes, J. E., Jr., Spencer, K., & van Hecke, S. (2013). Rural women’s health. National Rural Health Association Policy Brief. https://www.ruralhealthweb.org/getattachment/Advocate/Policy-Documents/RuralWomensHealth-(1).pdf.aspx

Broverman, I. K., Broverman, D. M., Clarkson, F. E., Rosencrantz, P. S., & Vogel, S. R. (1970). Sex-role stereotypes and clinical judgments of mental health. Journal of Counseling and Clinical Psychology, 34(1), 1–7.

https://doi.org/10.1037/h0028797

Brown, L. M., & Gilligan, C. (1992). Meeting at the crossroads: Women’s psychology and girls’ development. Harvard University Press.

Carlton, E., & Simmons, L. (2011). Health decision-making among rural women: Physician access and prescription adherence. Journal of Rural and Remote Health Research, Education, Practice and Policy, 11, 1–10.

Carpenter-Song, E., & Snell-Rood, C. (2017). The changing context of rural America: A call to examine the impact of social change on mental health and mental health care. Psychiatric Services, 68(5), 503–506.

https://doi.org/10.1176/appi.ps.201600024

Council for Accreditation of Counseling and Related Educational Programs. (2015). 2016 CACREP standards. http://www.cacrep.org/for-programs/2016-cacrep-standards

Creswell, J. W., & Poth, C. N. (2018). Qualitative inquiry & research design: Choosing among five approaches (4th ed.). SAGE.

Doudna, K. D., Reina, A. S., & Greder, K. A. (2015). Longitudinal associations among food insecurity, depressive symptoms, and parenting. Journal of Rural Mental Health, 39(3–4), 178–187.

https://doi.org/10.1037/rmh0000036

Enns, C. Z. (2017). Contemporary adaptations of traditional approaches to counseling women. In M. Kopala & M. Keitel (Eds.), Handbook of counseling women (2nd ed., pp. 51–62). SAGE.

Evans, K. M., Kincade, E. A., Marbley, A. F., & Seem, S. R. (2005). Feminism and feminist therapy: Lessons from the past and hopes for the future. Journal of Counseling & Development, 83(3), 269–277.

https://doi.org/10.1002/j.1556-6678.2005.tb00342.x

Fifield, A. O., & Oliver, K. J. (2016). Enhancing the perceived competence and training of rural mental health practitioners. Journal of Rural Mental Health, 40(1), 77–83. https://doi.org/10.1037/rmh0000040

Gilligan, C. (1993). In a different voice: Psychological theory and women’s development. Harvard University Press. (Original work published 1982)

Gilligan, C., & Eddy, J. (2017). Listening as a path to psychological discovery: An introduction to the Listening Guide. Perspectives on Medical Education, 6, 76–81. https://doi.org/10.1007/s40037-017-0335-3

Heppner, P. P., Wampold, B. E., Owen, J., Thompson, M. N., & Wang, K. T. (2016). Research design in counseling (4th ed.). Cengage.

Hill, S. K., Cantrell, P., Edwards, J., & Dalton, W. (2016). Factors influencing mental health screening and treatment among women in a rural south central Appalachian primary care clinic. Journal of Rural Health, 32(1), 82–91. https://doi.org/10.1111/jrh.12134

Imig, A. (2014). Small but mighty: Perspectives of rural mental health counselors. The Professional Counselor, 4(4), 404–412. https://doi.org/10.15241/aii.4.4.404

Lloyd-Hazlett, J., Knight, C., Ogbeide, S., Trepal, H., & Blessing, N. (2020). Strengthening the behavioral health workforce: Spotlight on PITCH. The Professional Counselor, 10(3), 306–317.

https://doi.org/10.15241/jlh.10.3.306

Morrow, S. L. (2005). Quality and trustworthiness in qualitative research in counseling psychology. Journal of Counseling Psychology, 52(2), 250–260. https://doi.org/10.1037/0022-0167.52.2.250

Morrow, S. L. (2007). Qualitative research in counseling psychology: Conceptual foundations. The Counseling Psychologist, 35(2), 209–235. https://doi.org/10.1177/0011000006286990

Moustakas, C. (1994). Phenomenological research methods. SAGE.

Mulder, P. L., & Lambert, W. (2006). Behavioral health of rural women: Challenges and stressors. In R. T. Coward, L. A. Davis, C. H. Gold, H. Smiciklas-Wright, L. E. Thorndyke, & F. W. Vondracek (Eds.), Rural women’s health: Mental, behavioral, and physical issues (pp. 15–30). Springer.

Mulder, P. L., Shellenberger, S., Streiegel, R., Jumper-Thurman, P., Danda, C. E., Kenkel, M. B., Constantine, M. G., Sears, S. F., Kalodner, M., & Hager, A. (2000). The behavioral healthcare needs of rural women.

http://www.apa.org/practice/programs/rural/rural-women.pdf

Ponterotto, J. G. (2010). Qualitative research in multicultural psychology: Philosophical underpinnings, popular approaches, and ethical considerations. Cultural Diversity and Ethnic Minority Psychology, 16(4), 581–589. https://doi.org/10.1037/a0012051

Radunovich, H. L., Smith, S. R., Ontai, L., Hunter, C., & Cannella, R. (2017). The role of partner support in the physical and mental health of poor, rural mothers. Journal of Rural Mental Health, 41(4), 237–247.

https://doi.org/10.1037/rmh0000077

Schwarz, J. (2017). Counseling women and girls: Introduction to empowerment feminist therapy. In J. E. Schwarz (Ed.), Counseling women across the life span: Empowerment, advocacy, and intervention (pp. 1–20). Springer.

Smalley, K. B., & Warren, J. C. (2014). Mental health in rural areas. In J. C. Warren & K. B. Smalley (Eds.), Rural public health: Best practices and preventive models (pp. 85–93). Springer.

Snell-Rood, C., Feltner, F., & Schoenberg, N. (2019). What role can community health workers play in connecting rural women with depression to the “de facto” mental health care system? Community Mental Health Journal, 55, 63–73. https://doi.org/10.1007/s10597-017-0221-9

Snell-Rood, C., Hauenstein, E., Leukefeld, C., Feltner, F., Marcum, A., & Schoenberg, N. (2017). Mental health treatment seeking patterns and preferences of Appalachian women with depression. American Journal of Orthopsychiatry, 87(3), 233–241. https://doi.org/10.1037/ort0000193

U.S. Census Bureau. (2010). 2010 census urban and rural classification and urban area criteria. http://census.gov/programs-surveys/geography/guidance/geo-areas/urban-rural/2010-urban-rural.html

U.S. Department of Agriculture. (2015). Rural America at a glance. https://www.ers.usda.gov/webdocs/publications/

44015/55581_eib145.pdf?v=751.6

Van Montfoort, A., & Glasser, M. (2020). Rural women’s mental health: Status and need for services. Journal of Depression and Anxiety, 9(3), 1–7.

Watson, D. M. (2019). Counselor knows best: A grounded theory approach to understanding how working class, rural women experience the mental health counseling process. Journal of Rural Mental Health, 43(4), 150–163. https://doi.org/10.1037/rmh0000120

Weaver, A., & Gjesfjeld, C. (2014). Barriers to preventive services use for rural women in the southeastern United States. Social Work Research, 38(4), 225–234. https://doi.org/10.1093/swr/svu023

Weeks, L. E., Macquarrie, C., Begley, L., Gill, C., & Leblanc, K. D. (2016). Strengthening resources for midlife and older rural women who experience intimate partner violence. Journal of Women & Aging, 28(1), 46–57. https://doi.org/10.1080/08952841.2014.950500

Wong, A. (2017). Intersectionality: Understanding power, privilege, and the intersecting identities of women. In J. E. Schwarz (Ed.), Counseling women across the life span: Empowerment, advocacy, and intervention (pp. 39–56). Springer.

Appendix

Twelve Interview Questions

- Tell me about what comes to mind when you think about working with rural women.

- Tell me about where you grew up and how that has influenced your work with rural women.

- Tell me about how you began your work with rural women.

- What have you learned about rural women through your work with them?

- What are the unique mental health needs of rural women that you have seen in your work?

- Tell me about some of the benefits and rewards, if any, you have experienced working with rural women.

- Tell me about some of the challenges, if any, you have experienced working with rural women.

- How have your experiences working with rural women changed you as a mental health counselor?

- Tell me about any academic/classroom experiences in your graduate program that involved the mental health issues of rural women (e.g., class discussions, special projects, conversations with colleagues, internship experiences).

- Tell me about any training experience post-graduation that have involved the mental health issues of rural women (e.g., workshops, conference presentations, webinars, conversations with colleagues).

- What would you like other counselors to know about working with rural women?

- Please describe how the artifact that you have chosen relates to your work with rural women.

Lisbeth A. Leagjeld, PhD, NCC, LCPC, LPC-MH, is a program liaison and faculty member at South Dakota State University – Rapid City. Phillip L. Waalkes, PhD, NCC, ACS, is an assistant professor and doctoral program coordinator at the University of Missouri – St. Louis. Maribeth F. Jorgensen, PhD, NCC, LPC, LMHC, LIMHP, is an assistant professor at Central Washington University. Correspondence may be addressed to Lisbeth A. Leagjeld, 4300 Cheyenne Blvd., Rapid City, SD 57709, Lisbeth.leagjeld@sdstate.edu.

Feb 26, 2021 | Volume 11 - Issue 1

David E. Jones, Jennifer S. Park, Katie Gamby, Taylor M. Bigelow, Tesfaye B. Mersha, Alonzo T. Folger

Epigenetics is the study of modifications to gene expression without an alteration to the DNA sequence. Currently there is limited translation of epigenetics to the counseling profession. The purpose of this article is to inform counseling practitioners and counselor educators about the potential role epigenetics plays in mental health. Current mental health epigenetic research supports that adverse psychosocial experiences are associated with mental health disorders such as schizophrenia, anxiety, depression, and addiction. There are also positive epigenetic associations with counseling interventions, including cognitive behavioral therapy, mindfulness, diet, and exercise. These mental health epigenetic findings have implications for the counseling profession such as engaging in early life span health prevention and wellness, attending to micro and macro environmental influences during assessment and treatment, collaborating with other health professionals in epigenetic research, and incorporating epigenetic findings into counselor education curricula that meet the standards of the Council for Accreditation of Counseling and Related Educational Programs (CACREP).

Keywords: epigenetics, mental health, counseling, prevention and wellness, counselor education

Epigenetics, defined as the study of chemical changes at the cellular level that alter gene expression but do not alter the genetic code (T.-Y. Zhang & Meaney, 2010), has emerging significance for the profession of counseling. Historically, people who studied abnormal behavior focused on determining whether the cause of poor mental health outcomes was either “nature or nurture” (i.e., either genetics or environmental factors). What we now understand is that both nature and nurture, or the interaction between the individual and their environment (e.g., neglect, trauma, substance abuse, diet, social support, exercise), can modify gene expression positively or negatively (Cohen et al., 2017; Suderman et al., 2014).

In the concept of nature and nurture, there is evidence that psychosocial experiences can change the landscape of epigenetic chemical tags across the genome. This change in landscape influences mental health concerns, such as addiction, anxiety, and depression, that are addressed by counseling practitioners (Lester et al., 2016; Provençal & Binder, 2015; Szyf et al., 2016). Because the field of epigenetics is evolving and there is limited attention to epigenetics in the counseling profession, our purpose is to inform counseling practitioners and educators about the role epigenetics may play in clinical mental health counseling.

Though many counselors and counselor educators may have taken a biology class that covered genetics sometime during their professional education, we provide pedagogical scaffolding from genetics to epigenetics. Care was taken to ensure accessibility of information for readers across this continuum of genetics knowledge. Much of what we offer below on genetics is putative knowledge, as we desire to establish a foundation for the reader in genetics so they may be able to have a greater understanding of epigenetics and a clearer comprehension of the implications we offer leading to application in counseling. We suggest readers review Brooker (2017) for more detailed information on genetics. We will present an overview of genetics and epigenetics, an examination of mental health epigenetics, and implications for the counseling profession.

Genetics

Genetics is the study of heredity (Brooker, 2017) and the cellular process by which parents pass on biological information via genes. The child inherits genetic coding from both parents. One can think of these parental genes as a recipe book for molecular operations such as the development of proteins, structure of neurons, and other functions across the human body. This total collection of the combination of genes in the human body is called the genome or genotype. The presentation of observable human traits (e.g., eye color, height, blood type) is called the phenotype. Phenotypes can be seen in our clinical work through behavior (e.g., self-injury, aggression, depression, anxiety, inattentiveness).

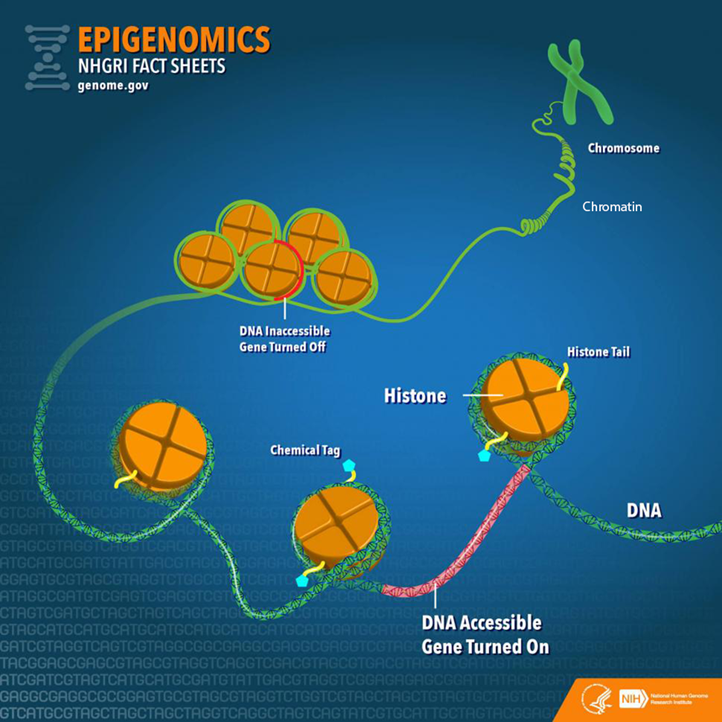

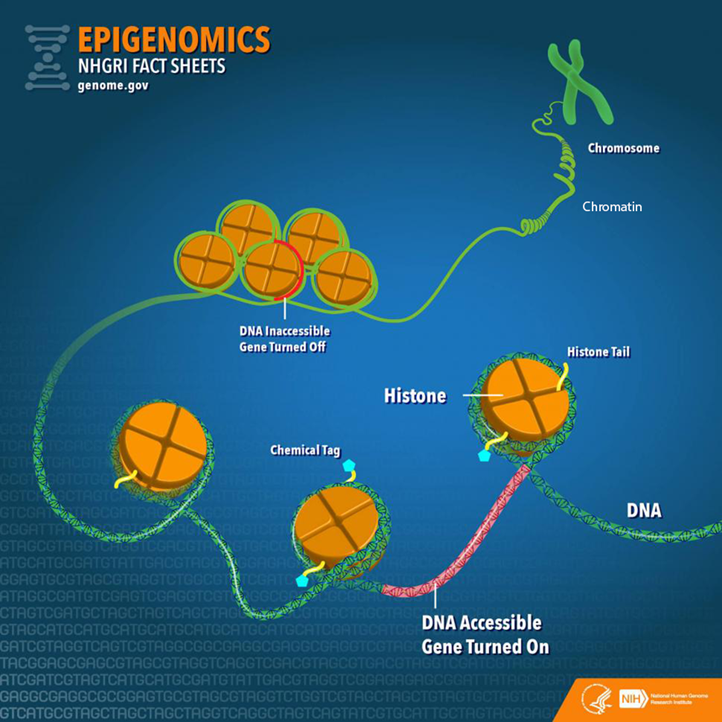

Before going further, it is important to establish a fundamental understanding of genetics by examining the varied molecular components and their relationships (Figure 1). Deoxyribonucleic acid (DNA) is a long-strand molecule that takes the famous double helix or ladder configuration. DNA is made up of four chemical bases called adenine (A), guanine (G), cytosine (C), and thymine (T). These form base pairs—A with T and C with G—creating a nucleic acid. The DNA is also wrapped around a specialized protein called a histone. The collection of DNA wrapped around multiple histones is called the chromatin. This wrapping process is essential for the DNA to fit within the cell nucleus. Finally, as this chromatin continues to grow, it develops a structure called a chromosome. Within every human cell nucleus, there are 23 chromosomes from each parent, totaling 46 chromosomes.

Figure 1

Gene Structure and Epigenetics

From “Epigenomics Fact Sheet,” by National Human Genome Research Institute, 2020

(https://www.genome.gov/about-genomics/fact-sheets/Epigenomics-Fact-Sheet). In the public domain.

Beyond the chromosomes, chromatin, histones, DNA, and genes, there is another key component in genetics: ribonucleic acid (RNA). RNA can be a cellular messenger that carries instructions from a DNA sequence (specific genes) to other parts of the cell (i.e., messenger RNA [mRNA]). RNA can come in several other forms as well, including transfer RNA (tRNA), microRNA (miRNA), and non-coding RNA (ncRNA). In the sections below, we elaborate on mRNA and tRNA and their impact on the genetic processes. Later in the epigenetics section, we provide fuller details on miRNA and ncRNA.

Besides the aforementioned biological aspects, it is important to understand that a child inherits genes from both parents, but they are not exactly the same genes, (i.e., alternative forms of the same gene may have differing expression). Different versions of the same gene are called alleles. Variation in an allele is one reason why we see phenotypic variation between our clients—height, weight, eye color—and this variation can contribute to mental disease susceptibility. Although there are many potential causes of poor mental health, family history is often one of the strongest risk factors because family members most closely represent the unique genetic and environmental interactions that an individual may experience. We also see this as a function of intergenerational epigenetic effects, which are covered later in this paper.

Transcription and Translation

Now that we have provided a foundation of the genetic components, we move toward the primary two-stage processes of genetics: transcription and translation (Brooker, 2017). The first step in the process of gene expression is called transcription. Transcription occurs when a sequence of DNA is copied using RNA polymerase (“ase” notes that it is an enzyme) to make mRNA for protein synthesis. We can liken transcription to the process of someone taking down information from a client’s voicemail message. In this visualization, DNA is the caller, the person writing down the message is the RNA polymerase, and the actual written message is the RNA.

A particular section of a gene, called a promotor region, is bound by the RNA polymerase (Brooker, 2017). The RNA polymerase acts like scissors to separate the double-stranded DNA helix into two strands. One of the strands, called the template, is where the RNA polymerase will read the DNA code A to T, and G to C to build mRNA. There are other modifications that must occur in eukaryotic cells such as splicing introns and exons. In short, sections of unwanted DNA, called introns, are removed by the process of splicing, and the remaining DNA codes are connected back together (exons).

Now that the mRNA has been created by the process of transcription, the next step is for the mRNA to build a protein necessary for the main functions of the body, in a process known as translation (Brooker, 2017). Here, translation is the process in which tRNA decodes or translates the mRNA into a protein in a mobile cellular factory called the ribosome. It is translating the language of a DNA sequence (gene) into the language of a protein. To do this, the tRNA uses a translation device called an anticodon. This anticodon links to the mRNA-based pairs called a codon. A codon is a trinucleotide sequence of DNA or RNA that corresponds to a specific amino acid, or building block of a protein. This process then continues to translate and connect many amino acids together until a polypeptide (a long chain of amino acids) is created. Later, these polypeptides join to form proteins. Depending on the type of cell, the protein may function in a variety of ways. For example, the neuron has several proteins for its function, and different proteins are used for memory, learning, and neuroplasticity.

Epigenetics

There is a wealth of research conducted on genetics, yet the understanding of epigenetics is more limited when focusing on mental health (Huang et al., 2017). Though the term epigenetics has been around since the 1940s, the “science” of epigenetics is in its youth. Epigenetic research in humans has grown in the last 10 years and continues to expand rapidly (Januar et al., 2015). The key concept for counselors to remember about epigenetics is that epigenetics supports the idea of coaction. Factors present in the client’s external environment (e.g., stress from caregiver neglect, foods consumed, drug intake like cigarettes) influence the expression of their genes (transcription and translation) and thus cell activity and related behavioral phenotypes. In the sections below, we will dive deeper into the understanding of epigenetic mechanisms and define key terms including epigenome, chromatin, and chemical modifications.

To start, the more formal definition of epigenetics is the differentiation of gene expression via chemical modifications upon the epigenome that do not alter the genetic code (i.e., the DNA sequence; Szyf et al., 2007). The epigenome, which is composed of chromatin (the combination of DNA and protein forming the chromosomes) and modification of DNA by chemical mechanisms (e.g., DNA methylation, histone modification), programs the process of gene expression (Szyf et al., 2007). The epigenome differs from the genome in that the chemical actions or modifications are on the outside of the genome (i.e., the DNA) or “upon” the genome. Specifically, epigenetic processes act “upon” the genome, which may open or close the chromatin to various degrees to govern access for reading DNA sequences (Figure 1). When the chromatin is opened, transcription and translation can take place; however, when the chromatin is closed, gene expression is silenced (Syzf et al., 2007).

It is important for counselors to conceptualize their client’s psychosocial environment in conjunction with the observed behavioral phenotypes, in that the client’s psychosocial environment may have partially mediated epigenetic expression (Januar et al., 2015). For example, with schizophrenia, a client’s adverse environment (e.g., early childhood trauma) influences the epigenome, or gene expression, which may contribute up to 60% of this disorder’s development (Gejman et al., 2011). Other adverse environmental influences have been associated with the development of schizophrenia, including complications during client’s prenatal development and birth, place and season of client’s birth, abuse, and parental loss (Benros et al., 2011). As we highlight below, epigenetic mechanisms (e.g., DNA methylation) may mediate between these environmental influences and genes with outcomes like schizophrenia (Cariaga-Martinez & Alelú-Paz, 2018; Tsankova et al., 2007).

Epigenetic Mechanisms

There are a variety of chemical mechanisms or tags that change the chromatin structure (either opening for expression or closing to inhibit expression). Some of the most investigated mechanisms for changes in chromatin structure are DNA methylation, histone modification, and microRNA (Benoit & Turecki, 2010; Maze & Nestler, 2011).

DNA Methylation. Methylation is the most studied epigenetic modification (Nestler et al., 2016). It occurs when a methyl group binds to a cytosine base (C) of DNA to form 5-methylcytosine. A methyl group is three hydrogens bonded to a carbon, identified as CH3. Most often, the methyl group is attached to a C followed by a G, called a CpG. These methylation changes are carried out by specific enzymes called DNA methyltransferase. These enzymes add the methyl group to the C base at the CpG site.

Methylation was initially considered irreversible, but recent research has shown that DNA methylation is more stable compared to other chemical modifications like histone modification and is therefore reversible (Nestler et al., 2016). This DNA methylation adaptability evidence is important, conceivably supporting counseling efficacy across the life span. If methylation is indeed reversible beyond 0 to 5 years of age, counseling efforts hold promise to influence mental health outcomes across the life span.

Beyond noted stability, DNA methylation is also important in that it is tissue-specific, meaning it assists in cell differentiation; it may regulate gene expression up or down and is influenced by different environmental exposures (Monk et al., 2012). For example, DNA methylation represses specific areas of a neuron’s genes, thus “turning off” their function. This stabilizes the cell by preventing any tissue-specific cell differentiation and inhibits the neuron from changing into another cell type (Szyf et al., 2016), such as becoming a lung cell later in development.

When looking at up- or downregulation, Oberlander et al. (2008) provided an example from a study using mice. When examining attachment style in mice, they found that decreased quality of mothering to offspring increased risk of anxiety, in part, because of the methylation at the glucocorticoid receptor (GR) gene and fewer GR proteins produced by the hippocampus. This change may lead to lifelong silencing or downregulation with an increased risk of anxiety to the mouse over its life span. Stevens et al. (2018) also established a link between diet, epigenetics, and DNA methylation. They found an epigenetic connection between poor dietary intake with increased risk of behavioral problems and poor mental health outcomes such as autism. The authors also remarked that further investigation is required for a clearer picture of this link and potential effects.

Histone Modification. Another process that has been extensively researched is post-translational histone modification, or changes in the histone after the translation process. The most understood histone modifications are acetylation, methylation, and phosphorylation (Nestler et al., 2016). Acetylation, the most common post-translational modification, occurs by adding an acetyl group to the histone tail, such as the amino acid lysine. The enzymes responsible for histone acetylation are histone acetyltransferases or HATs (Haggarty & Tsai, 2011). Conversely, histone deacetylases (HDACs) are enzymes that remove acetyl groups (Saavedra et al., 2016). The acetylation process promotes gene expression (Nestler et al., 2016).

Through histone methyltransferases (HMTs), histone methylation increases methylation, thereby reducing gene expression. Histone demethylases (HDMs) remove methyl groups to increase gene activity. Phosphorylation can increase or decrease gene expression. Overall, there are more than 50 known histone modifications (Nestler et al., 2016).

From a counseling perspective, it is important to note that histone modification is flexible. Unlike DNA methylation, which is more stable over a lifetime, histone modifications are more transient. To illustrate, if an acetyl group is added to a histone, it may loosen the binding between the DNA and histone, increasing transcription and thereby allowing gene expression across the life span (Nestler et al., 2016). Such acetylation processes have been found in maternal neglect to offspring (early in the life span) and mindfulness practices in adult clients (Chaix et al., 2020; Devlin et al., 2010). Yet, although histone modification can be changed across the life span (Nestler et al., 2016), it is still important for counselors to recognize the importance of early counseling interventions because of how highly active epigenetics mechanisms (e.g., DNA methylation) are in children 0 to 5 years of age.

MicroRNA. Beyond histone modification, another known mechanism is microRNA (miRNA), which is the least understood and most recently investigated epigenetic mechanism when compared to DNA methylation and histone modification (Saavedra et al., 2016). miRNA is one type of non-coding RNA (ncRNA), or RNA that is changed into proteins. Around 98% of the genome does not code for proteins, leading to a supporting hypothesis that ncRNAs play a significant role in gene expression. For example, humans and chimpanzees share 98.8% of the same DNA code. However, epigenetics and specifically ncRNA contribute to the wide phenotypic variation between the species (Zheng & Xiao, 2016). Further, Zheng and Xiao (2016) estimated that miRNA regulates up to 60% of gene expression.

miRNA has also been found to suppress and activate gene expression at the levels of transcription and translation (Saavedra et al., 2016). miRNAs affect gene expression by directly influencing mRNA. Specifically, the miRNA may attach to mRNA and “block” the mRNA from creating proteins or it may directly degrade mRNA. This then decreases the surplus of mRNA in the cell. If the miRNA binds partially with the mRNA, then it inhibits protein production; but if it binds completely, it is marked for destruction. Once the mRNA is identified for destruction, other proteins and enzymes are attracted to the mRNA, and they degrade the mRNA and eliminate it (Zheng & Xiao, 2016). Moreover, when compared to DNA methylation, which may be isolated to a single gene sequence, miRNA can target hundreds of genes (Lewis et al., 2005). Researchers have discovered that miRNA may mediate anxiety-like symptoms (Cohen et al., 2017).

Human Development and Epigenetics

Over the life of an individual, there are critical or sensitive periods in which epigenetic modifications are more heavily influenced by environmental factors (Mulligan, 2016). Early life (ages 0 to 5 years) appears to be one of the most critical time periods when epigenetics is more active. An example of this is the Dutch Famine of 1944–45, also known as the Dutch Hunger Winter (Champagne, 2010; Szyf, 2009). The Nazis occupied the Netherlands and restricted food to the country, bringing about a famine. The individual daily caloric intake estimate varied between 400 and 1800 calories at the climax of the famine. Most notably, women who gave birth during this time experienced the impact of low maternal caloric intake, which impacted their child and the child’s health outcomes into adulthood. One discovery was that male children had a higher risk of adulthood obesity if their famine exposure occurred early in gestation versus a male fetus who experienced famine in late gestation. Findings suggested that fetuses who experienced restricted caloric intake during the development of their autonomic nervous system may have an increased risk of heart disease in adulthood. The findings of epigenetic mechanisms at work between mother and child during a famine are flagrant enough, yet epigenetic researchers have also discovered that epigenetic tags carry across generations, called genomic imprinting (Arnaud, 2010; Yehuda et al., 2016; T.-Y. Zhang & Meaney, 2010).

Genomic imprinting can be defined as the passing on of certain epigenetic modifications to the fetus by parents (Arnaud, 2010). It is allele-specific, and approximately half of the imprinting an offspring receives is from the mother. The imprinting mechanism marks certain areas, or loci, of offspring’s genes as active or repressed. For instance, the loci may exhibit increased or decreased methylation.

An imprinting example is evident in the IGF-2 (insulin-like growth factor II) gene and those fetuses exposed to the Dutch Hunger Winter (Heijmans et al., 2008). Sixty years after the famine, a decrease in DNA methylation on IGF-2 was found in adults with fetal exposure during the famine compared to their older siblings. Researchers also found these intergenerational imprinting effects associated with the grandchildren of women who were pregnant during the Dutch Hunger Winter. Similar imprinting is also apparent in Holocaust survivors (Yehuda et al., 2016) and children born to mothers who experienced PTSD from the World Trade Center collapse of 9/11 (Yehuda et al., 2005). These imprinting mechanisms are important for counselors to understand in that we see the interplay between the client and the environment across generations. The client becomes the embodiment of their environment at the cellular level. This is no longer the dichotomous “nature vs. nurture” debate but the passing on of biological effects from one generation to another through the interplay of nature and nurture.

Epigenetics and Mental Health Disorders

Now we turn our focus to the influence of epigenetics on the profession of counseling. What we do know is that epigenetic mechanisms, (e.g., DNA methylation, histone modifications, miRNA) are associated with various mental health disorders. It is hypothesized that epigenetics contributes to the development of mental disorders after exposure to environmental stressors, such as traumatic life events, but it may also have positive effects based on salutary environments (Syzf, 2009; Yehuda et al., 2005). We will review only those mental health epigenetic findings that have significant implications relative to clinical disorders such as stress, anxiety, childhood maltreatment, depression, schizophrenia, and addiction. We will also offer epigenetic outcomes associated with treatment, including cognitive behavioral therapy (CBT; Roberts et al., 2015), meditation (Chaix et al., 2020), and antidepressants (Lüscher & Möhler, 2019).

Stress and Anxiety

Stress, especially during early life stages, causes long-term effects for neuronal pathways and gene expression (Lester et al., 2016; Palmisano & Pandey, 2017; Perroud et al., 2011; Roberts et al., 2015; Szyf, 2009; T.-Y. Zhang & Meaney, 2010). Currently, research supports the mediating effects of stress on epigenetics through DNA methylation, especially within the gestational environment (Lester & Marsit, 2018). DNA methylation has been associated with upregulation of the hypothalamic-pituitary-adrenal (HPA) axis, increasing anxiety symptoms (McGowan et al., 2009; Oberlander et al., 2008; Romens et al., 2015; Shimada-Sugimoto et al., 2015; Tsankova et al., 2007). DNA methylation has also been linked with increased levels of cortisol for newborns of depressed mothers. This points to an increased HPA stress response in the newborn (Oberlander et al., 2008). Ouellet-Morin et al. (2013) also looked at DNA methylation and stress. They conducted a longitudinal twin study on the effect of bullying on the serotonin transporter gene (SERT) for monozygotic twins and found increased levels of SERT DNA methylation in victims compared to their non-bullied monozygotic co-twin. Finally, Roberts et al. (2015) examined the effect of CBT on DNA methylation for children with severe anxiety, specifically testing changes in the FKBP5 gene. Although the results were not statistically significant, they may be clinically significant. Research participants with a higher DNA methylation on the FKBP5 gene had poorer response to CBT treatment.