Convergent and Divergent Validity of the Student Engagement in School Success Skills Survey

Elizabeth Villares, Kimberly Colvin, John Carey, Linda Webb, Greg Brigman, Karen Harrington

This study examines the convergent validity and divergent validity of the Student Engagement in School Success Skills (SESSS) survey. The SESSS is easy to administer (it takes fewer than 15 minutes to complete) and is used in schools to provide educators with useful information about students’ use of skills and strategies related to school success. A total of 4,342 fifth graders completed the SESSS; the Motivated Strategies for Learning Questionnaire (MSLQ) Cognitive Strategy Use, Self-Regulation, Self-Efficacy and Test Anxiety subscales; and the Self-Efficacy for Self-Regulated Learning (SESRL). The three subscales of the SESSS (Self-Direction of Learning, Support of Classmates’ Learning and Self-Regulation of Arousal) correlated highly with the MSLQ Cognitive Strategy Use and Self-Regulation subscales, moderately correlated with the Self-Efficacy subscale and the SESRL, and did not correlate with the MSLQ Test Anxiety subscale. Future research is needed to use the SESSS subscales as discriminable dimensions.

Keywords: school success, convergent validity, divergent validity, Student Engagement in School Success Skills survey, educators

For more than a decade, researchers have placed increased emphasis on evidence-based practice and a programmatic approach to school counseling (Carey, 2004; Green & Keys, 2001; Gysbers, 2004; Lapan, 2005; Myrick, 2003; Paisley & Hayes, 2003; Whiston, 2002, 2011). This emphasis from the school counseling profession reflects national initiatives. In 2001, the Institute of Education Sciences, the research arm of the U.S. Department of Education, was established to determine, through rigorous and relevant research, what interventions are effective and ineffective for improving student achievement and education outcomes. The What Works Clearinghouse (WWC), an initiative of the Institute of Education Sciences, was created in 2002 to identify studies that provide credible and reliable evidence of the effectiveness of education interventions. The purpose of WWC is to inform researchers, educators and policymakers of interventions designed to improve student outcomes.

The American School Counselor Association’s (ASCA, 2005) response to emerging national policy and initiatives included a call for school counselor-led interventions that contribute to increased student achievement as part of a comprehensive school counseling program. The need for more research to identify evidence-based interventions tying school counselors to improved student academic performance also surfaced in a school counseling Delphi study, which identified the most pressing research questions in the profession (Dimmitt, Carey, McGannon, & Henningson, 2005). The top priority cited by this Delphi study was the need to determine which school counseling interventions resulted in the greatest student achievement gains. In addition, five major reviews of school counseling research all discussed the need for more research to strengthen the link between school counselor interventions and student achievement (Brown & Trusty, 2005; Dimmitt, Carey, & Hatch, 2007; Whiston & Quinby, 2009; Whiston & Sexton, 1998; Whiston, Tai, Rahardja, & Eder, 2011). However, researchers continue to report limitations in the school counseling outcome research. Among the limitations are conclusions drawn from studies based on nonstandardized outcome assessments. For instance, in a review of school counseling studies, Brown and Trusty (2005) concluded that school counseling research has been limited by the lack of valid and reliable instruments that measure the skills, strategies and personal attributes associated with academic and social/relationship success. More recently, Whiston et al. (2011) completed a meta-analytic examination of school counseling interventions and also determined the dominance of nonstandardized outcome assessments in school counseling research as a significant limitation. These limitations continue to be a hindrance for the school counseling profession, given the goal of establishing evidence-based practices that link school counselor interventions to improved student outcomes. The current WWC’s Procedures and Standards Handbook (WWC, 2011) includes review procedures for evaluating studies that determine a particular intervention to be effective in improving student outcomes. The handbook provides nine reasons why a study under review would fail to meet WWC standards for rigorous research. Among the reasons is a failure to use reliable and valid outcome measures.

While a few valid instruments have recently been developed to measure school counseling outcomes (Scarborough, 2005; Sink & Spencer, 2007; Whiston & Aricak, 2008), they do not measure student changes in knowledge and skills related to academic achievement. The Student Engagement in School Success Skills survey (SESSS; Carey, Brigman, Webb, Villares, & Harrington, 2013) was developed to measure student use of the skills and strategies that were (a) identified as most critical for long-term school success and (b) could be taught by school counselors within the scope of the ASCA National Model, through classroom guidance. The importance of continuing to evaluate the psychometric properties of the SESSS lies in the fact that, for school counselors, there has typically been no standardized way to measure these types of outcomes and tie them directly to school counselor interventions. Previous studies on self-report measures of student metacognition indicate that it is feasible to develop such a measure for elementary-level students (Sperling, Howard, Miller, & Murphy, 2002; Yildiz, Akpinar, Tatar, & Ergin, 2009). The foundational concepts, skills and strategies of metacognition as well as the social skills and self-management skills taught in the Student Success Skills (SSS) program are developmentally appropriate for grades 4–10. The questions for the SESSS were developed to parallel these key strategies and skills taught in the SSS program. The instrument was tested for readability and is appropriate for grade 4 and above.

The SESSS is a self-report measure of students’ use of key skills and strategies that have been identified consistently over several decades as critically important to student success in school, as noted in large reviews of educational research literature (Hattie, Biggs, & Purdie, 1996; Masten & Coatsworth, 1998; Wang, Haertel, & Walberg, 1994a). Three skill sets have emerged from this research literature as common threads in contributing to student academic success and social competence: (a) cognitive and metacognitive skills such as goal setting, progress monitoring and memory skills; (b) social skills such as interpersonal skills, social problem solving, listening and teamwork skills; and (c) self-management skills such as managing attention, motivation and anger (Villares, Frain, Brigman, Webb, & Peluso, 2012). Additional research in support of these skills and strategies continues to weave a coherent research tapestry that is useful in separating successful students from students at risk of academic failure (Durlak, Weissberg, Dymnicki, Taylor, & Schellinger, 2011; Greenberg et al., 2003; Marzano, Pickering, & Pollock, 2001; Zins, Weissberg, Wang, & Walberg, 2004).

Linking school counseling programs and interventions to improved student outcomes has become increasingly important (Carey et al., 2013). One way for school counselors to demonstrate the impact of classroom guidance and small group counseling on achievement is by measuring the impact of their interventions on intermediate variables associated with achievement. These intermediate variables include the previously mentioned skills and strategies involving cognitive, social and self-management. Instruments that measure these critically important fundamental learning skills and strategies are limited.

The present article explores the convergent and divergent validity of the SESSS (Carey et al., 2013). The article builds upon previous research describing the item development of the SESSS and exploratory factor analysis (Carey et al., 2013) and a recently completed confirmatory factor analysis (Brigman et al., 2014). The current findings contribute to the establishment of the SESSS as a valid instrument for measuring the impact of school counselor-led interventions on intermediate variables associated with improved student achievement.

Method

The data collected on the SESSS occurred within the context of a multiyear, large-scale, randomized control trial funded through the U.S. Department of Education’s Institute of Education Sciences. The purpose of the grant was to investigate the effectiveness of the SSS program (Brigman & Webb, 2010) with fifth graders from two large school districts (Webb, Brigman, Carey, & Villares, 2011). In order to guard against researcher bias, the authors hired data collectors to administer the SESSS, Motivated Strategies for Learning Questionnaire (MSLQ) and Self-Efficacy for Self-Regulated Learning (SESRL), and standardized the training and data collection process. The authors selected these particular surveys because they reflected factors known to be related to effective learning in different ways and because they provided a range of measures, some of which were theoretically related to the SESSS and some of which were not.

Procedures

During the 2011–2012 academic year, graduate students who were enrolled in master’s-level Counselor Education programs at two universities were hired and trained in a one-day workshop to administer the SESSS, MSLQ and SESRL and handle data collection materials. At the training, each data collector was assigned to five of the 60 schools across two school districts. After obtaining approvals from the university institutional review board and school district, the research team members notified parents of fifth-grade students of the study via district call-home systems and sent a letter home explaining the study, risks, benefits, voluntary nature of the study and directions on how to decline participation. One month later, data collectors entered their assigned schools and participating classrooms to administer the study instruments. Prior to administering the instruments, each data collector read aloud the student assent. Students who gave their assent were instructed to place a precoded generic label at the top of their instrument, and then each data collector read aloud the directions, along with each item and possible response choice on the SESSS, MSLQ and SESRL. Each assigned data collector was responsible for distributing, collecting and returning all the completed instruments to a district project coordinator once the data collector left the school building according to the Survey Data Collection Manual. In addition, the data collector noted any student absences and/or irregularities, and confirmed that all procedures were followed.

The district project coordinators were responsible for verifying that all materials were returned and secured in a locked cabinet until they were ready to be shipped to a partner university for data analysis. The coordinators gathered demographic information from the district databases and matched it to the participating fifth-grade students and the precoded instrument labels through a generic coding system (district #1–2, school #1–30, classroom #1–6, student #1–25). The coordinators then saved the demographic information in a password-protected and encrypted Excel spreadsheet on an external device and shipped it to a partner university for data analysis.

Participants

A total of 4,342 fifth-grade students in two large school districts completed the SESSS. The following is the demographic profile of the total participants: (a) gender = 2,150 (49.52%) female and 2,192 (50.48%) male; (b) ethnicity = 149 (3.43%) Asian, 1,502 (34.59%) Black, 865 (19.92%) Hispanic, 18 (.42%) Native American, 125 (2.88%) Multiracial, 1,682 (38.74%) White, and 1 (.02%) no response; (c) socioeconomic status = 1,999 (46.04%) noneconomically disadvantaged and 2,343 (53.96%) economically disadvantaged; (d) disability = 3,677 (84.68%) nondisabled and 665 (15.32%) disabled; (e) 504 status = 4,155 (95.70%) non-504 and 187 504 (4.3%); and (f) English language learners (ELL) = 3,999 (92.1%) non-ELL and 343 (7.9%) ELL students. Demographic information for fifth-grade students in each school district is reported in Table 1.

Table 1

Fifth-Grade Student Participant Demographics by School District

|

Demographic Characteristics |

District 1 (n = 2,162) |

District 2 (n = 2,180) |

|

| Gender | FemaleMale |

1,080 (49.90%) 1,082 (50.10%) |

1,070 (49.10%) 1,110 (50.90%) |

| Ethnicity | AsianBlack

Hispanic Native American Multiracial White No response |

89 (04.12%) 899 (41.58%) 165 (07.63%) 7 (00.32%) 64 (02.95%) 938 (43.40%) —- |

60 (02.75%) 603 (27.66%) 700 (32.11%) 11 (00.50%) 61 (02.80%) 744 (34.13%) 1 (00.05%) |

| SES | Non-economically disadvantagedEconomically disadvantaged |

1,118 (51.71%) 1,044 (48.29%) |

881 (40.41%) 1,299 (59.59%) |

| Disability | NondisabledDisabled |

1,847 (85.43%) 315 (14.57%) |

1,830 (83.94%) 350 (16.06%) |

| 504 Status | Non-504504 |

2,108 (97.50%) 54 (02.50%) |

2,047 (93.90%) 133 (06.10%) |

| English language learners | Non-ELLELL |

1,968 (91.03%) 194 (08.97%) |

2,031 (93.17%) 149 (06.83%) |

Note. n = number of students enrolled in the district; SES = socioeconomic status; ELL = English language learners.

Instruments

Student Engagement in School Success Skills. The SESSS (Carey et al., 2013) was developed to measure the extent to which students use the specific strategies that researchers have shown relate to enhanced academic achievement (Hattie et al., 1996; Masten & Coatsworth, 1998; Wang et al., 1994b). Survey items were written to assess students’ cognitive and metacognitive skills (e.g., goal setting, progress monitoring, memory skills), social skills (e.g., communication skills, social problem solving, listening, teamwork skills) and self-management skills (e.g., managing attention, motivation, anger). After the initial pool of items was developed, items were reviewed by an expert panel of elementary educators and school counselors and subjected to a readability analysis using the Lexile Framework for Reading system (MetaMetrics, 2012). At each stage of review, minor changes were made to improve clarity on several items.

Twenty-seven self-report items (plus six additional items used to control for response set) were assembled into a scale with the following directions: “Below is a list of things that some students do to help themselves do better in school. No one does all these things. No one does any of these things all the time. Please think back over the last two weeks and indicate how often you did each of these things in the last two weeks. Please follow along as each statement is read and circle the answer that indicates what you really did. Please do your best to be as accurate as possible. There are no right or wrong answers. We will not share your answers with your parents or teachers. We will not grade your answers.”

The response format included four options that reflected frequency of strategy use in the last two weeks: “I didn’t do this at all,” “I did this once,” “I did this two times” and “I did this three or more times.” This response format was not conducive to writing clear negatively worded items; therefore, six additional items were developed to help control for response set. Three of these additional items reflected strategies that elementary students were unlikely to use (e.g., searching the Internet for additional math problems to complete). Three items reflected strategies that elementary students were likely to use (e.g., asking a friend when homework was due), but they were not covered in the SSS program.

Based on a previous administration of the SESSS to 262 elementary students in the fourth through eighth grades, Carey et al. (2013) reported an overall alpha coefficient for reliability for the 27-item scale to be .91, and coefficient alphas for each grade ranged between .87 (for fifth grade) and .95 (for seventh grade). All items correlated well with the total scale (ranging between .34 and .63). Scores on the total scale were distributed approximately normally: M =65.83, SD = 15.44.

In addition, Carey et al., (2013) found in an exploratory factor analysis of the SESSS scores of 402 fourth through sixth graders that a four-factor solution provided the best model of scale dimensionality, considering both the solution’s clean factor structure and the interpretability of these factors. These four factors reflected students’ Self-Management of Learning, Application of Learning Strategies, Support of Classmates’ Learning and Self-Regulation of Arousal. Regarding the SESSS factors, Self-Management of Learning and Application of Learning Strategies related closely to the categories of cognitive skills, metacognitive skills and the intentional self-regulation of cognitive processes. Support of Classmates’ Learning related closely to social skills that support classroom learning. Self-Regulation of Arousal related to the self-management of arousal and emotion that can interfere with effective learning. While determining the actual associations of SESSS factors with specific, previously established constructs requires empirical study, it is encouraging that the factor structure determined in this research corresponded with previous research.

In a confirmatory factor analysis study (Brigman et al., 2014), using SESSS scores from a diverse sample of almost 4,000 fifth-grade students, who found that while a four-factor model fit the data well, the scales associated with Self-Management of Learning and Application of Learning Strategies correlated so highly (r = .90) as to be indiscriminate. These items associated with the two factors were combined, and the subsequent three-factor model also proved to better fit the data. Brigman et al. (2014) suggested that the SESSS is best thought of as having three underlying factors corresponding to Self-Direction of Learning (which represents the combination of the original Management of Learning and Application of Learning Strategies factors), Support of Classmates’ Learning and Self-Regulation of Arousal.

Based on factor loadings, Brigman et al. (2014) created three SESSS subscales. The Self-Direction of Learning subscale (19 items) reflects the students’ intentional use of cognitive and metacognitive strategies to promote their own learning. Typical items include the following: “After I failed to reach a goal, I told myself to try a new strategy and not to doubt my ability,” and “I tried to keep myself motivated by imagining what it would be like to achieve an important goal.” The Support of Classmates’ Learning subscale (six items) reflects the students’ intentional use of strategies to help classmates learn effectively. Typical items include the following: “I tried to help a classmate learn how to do something that was difficult for them to do,” and “I tried to encourage a classmate who was having a hard time doing something.” Finally, the Self-Regulation of Arousal subscale (three items) reflects students’ intentional use of strategies to control disabling anxiety and cope with stress. Typical items include the following: “I focused on slowing my breathing so I would feel less stressed,” and “I imagined being in a calm place in order to feel less stressed.”

Motivated Strategies for Learning Questionnaire. The MSLQ is a 55-item, student self-report instrument with five subscales that measure different aspects of students’ motivation, emotion, effort and strategy use (Pintrich & DeGroot, 1990). The different subscales of the MSLQ are designed to be used singly or in combination to fit the needs of a researcher (Duncan & McKeachie, 2005). Items were adapted from various instruments used to assess student motivation, cognitive strategy use and metacognition (e.g., Eccles, 1983; Harter, 1981; Weinstein, Schulte, & Palmer, 1987). The present study used the following four subscales of the MSLQ: Cognitive Strategy Use, Self-Regulation, Self-Efficacy and Test Anxiety.

The Cognitive Strategy Use subscale is composed of 13 items that reflect the use of different types of cognitive strategies (e.g., rehearsal, elaboration, organizational strategies) to support learning. Typical items include the following: “When I read material for class, I say the words over and over to myself to help me remember,” “When I study, I put important ideas into my own words” and “I outline the chapters in my book to help me study.” Pintrich and DeGroot (1990) reported that the Cognitive Strategy Use subscale is reliable (Cronbach’s alpha = .83).

The Self-Regulation subscale includes nine items that reflect metacognitive and effort management strategies that support learning. Typical items include the following: “I ask myself questions to make sure I know the material I have been studying” and “Even when study materials are boring I keep working until I finish.” Pintrich and DeGroot (1990) reported that the Self-Regulation subscale is reliable (Cronbach’s alpha = .74).

The Self-Efficacy subscale is composed of nine items that reflect students’ ratings of their level of confidence in their ability to do well in classroom work. Typical items include the following: “Compared with others in this class, I think I’m a good student” and “I know that I will be able to learn the material for this class.” Pintrich and DeGroot (1990) reported that the Self-Efficacy subscale is reliable (Cronbach’s alpha = .89).

The Test Anxiety subscale includes four items that reflect students’ ratings of their experience of disabling levels of anxiety associated with classroom tests and examinations. Typical items include the following: “I am so nervous during a test that I cannot remember facts I have learned” and “I worry a great deal about tests.” Pintrich and DeGroot (1990) reported that the Self-Efficacy subscale is reliable (Cronbach’s alpha = .75).

Factor analyses indicated that these MSLQ subscales are related to different latent factors. Scores on the Cognitive Strategy Use subscale have been demonstrated to be related to grades on quizzes and examinations, grades on essays and reports, and overall class grades. Scores on the Self-Regulation subscale have been shown to be related to the above measures plus student performance on classroom seatwork assignments. Scores on the Self-Efficacy subscale have been demonstrated to be related to students’ grades on quizzes and examinations, grades for classroom seatwork assignments, grades on essays and reports, and overall class grades. Scores on the Test Anxiety subscale proved to be associated with lower levels of performance on classroom examinations and quizzes, as well as course grades (Pintrich & DeGroot, 1990).

Duncan and McKeachie (2005) reviewed the extensive research on the psychometric properties and research uses of the MSLQ. They concluded that the subscales are reliable, measure their target constructs and have been successfully used in numerous studies to measure student change after educational interventions targeting these constructs.

Self-Efficacy for Self-Regulated Learning scale. The SESRL was designed to measure students’ confidence in their abilities to perform self-regulatory strategies. It is a seven-item self-report instrument based on the Children’s Self-Efficacy Scale (Bandura, 2006; Usher & Pajares, 2008). Items (e.g., “How well can you motivate yourself to do schoolwork?”) reflect students’ judgments about their abilities to perform self-regulation strategies identified by teachers as being frequently used by students (Pajares & Valiante, 1999). The scale has been used successfully with older elementary students in a self-read format and with fourth graders in a read aloud administration format (Usher & Pajares, 2006). Cronbach’s alpha estimates of reliability have ranged between .78 and .84 (Britner & Pajares, 2006; Pajares & Valiante, 2002; Usher & Pajares, 2008). Factor analysis has suggested that the scale is unidimensional. Concurrent validity studies have indicated that the scale is related to measures of self-efficacy, task orientation and achievement (Usher & Pajares, 2006).

Data Analysis

In the initial analysis of the three SESSS subscales, the present authors used mean imputation to replace missing survey responses, by replacing a missing response with the overall mean for that survey item. For each of the 33 SESSS items, only 8.3%–9.1% of the responses were missing. Mean imputation is appropriate when the percentage of missing data is less than 10% and can be considered to be missing at random (Longford, 2005). In the current study, the students with missing survey data had an average SESSS score equal to that of the students with a complete response set, thus supporting the notion that the data were missing at random. Coefficient alpha, used as a measure of reliability, was calculated for each of the subscales before missing values were replaced.

Both convergent and discriminant evidence is needed in the validation process (Campbell & Fiske, 1959; Messick, 1993). Messick (1993) argued that while convergent evidence is important, it can mask certain problems. For example, if all tests of a construct do not measure a particular facet of that construct, the tests could all correlate highly. Likewise, if all tests of a construct include some particular form of construct-irrelevant variance, then the tests may correlate even more strongly because of that fact. Due to these possible shortcomings of convergent evidence, discriminant evidence is needed to ensure that the test is not correlated with another construct that could account for the misleading convergent evidence.

To determine the validity of the three SESSS subscales, the authors examined the correlations between each of the subscales with five other measures: four subscales of the MSLQ (Self-Efficacy, Cognitive Strategy Use, Self-Regulation and Test Anxiety), and the SESRL. Specifically, the authors considered the strength and direction of the SESSS subscales’ correlations with these other measures.

Results

Descriptive statistics and reliability estimates for the instruments used in this study are contained in Table 2. Coefficient alphas for the three SESSS subscales (Self-Direction of Learning, Support of Classmates’ Learning and Self-Regulation of Arousal), were 0.89, 0.79 and 0.68, respectively, and 0.90 for the SESSS as a whole. These results indicate good internal consistency (i.e., that the items within each instrument measure the same construct).

All correlations between pairs of subscales appear in Table 3. Because of the large sample size in this study, statistical significance by itself could be misleading, so the authors used the magnitude and direction of the correlations for their interpretations. Correlation is an effect size reflecting the degree of association of two variables (Ellis, 2010). The correlations among the three SESSS subscales ranged between .47 and .70, which suggests that the subscales measured related but discriminable dimensions of students’ success skill use. In assessing the concurrent validity of these three subscales, it was helpful to first focus on the scales that correlated most highly with the three SESSS subscales. The three SESSS subscales followed the same pattern with respect to strength of correlation. All three correlated most highly with both the Cognitive Strategy Use and Self-Regulation subscales of the MSLQ. These two subscales measure the students’ reported use of cognitive and metacognitive strategies associated with effort management and effective learning.

Table 2

Descriptive Statistics and Reliability Estimates for the Study Scales

| Scales | Scales |

M |

SD |

Alpha |

||

| SESSS | Self-Direction of Learning |

48.6 |

10.88 |

0.89 |

||

| Support of Classmates’ Learning |

16.6 |

4.28 |

0.79 |

|||

| Self-Regulation of Arousal |

7.9 |

2.60 |

0.68 |

|||

| MSLQ | Self-Efficacy |

28.3 |

4.41 |

0.83 |

||

| Cognitive Strategy Use |

48.6 |

8.31 |

0.82 |

|||

| Self-Regulation |

31.2 |

5.08 |

0.75 |

|||

| Test Anxiety |

8.8 |

4.03 |

0.79 |

|||

| SESRL | Self-Eff. for Self-Reg. Learning |

31.2 |

6.10 |

0.83 |

||

Note. SESSS = Student Engagement in School Success Skills; MSLQ = Motivated Strategies for Learning Questionnaire; SESRL = Self-Efficacy for Self-Regulated Learning.

Table 3

Correlations Between Scales

|

SESSS |

MSLQ |

||||||||

|

SDL |

SCL |

SRA |

SE |

CSU |

SR |

TA |

SESRL |

||

| SESSS | |||||||||

|

SDL |

—– |

0.70* |

0.58* |

0.28* |

0.54* |

0.53* |

-0.02 |

0.44* |

|

|

SCL |

—– |

0.47* |

0.25* |

0.39* |

0.39* |

-0.02 |

0.35* |

||

|

SRA |

—– |

0.12* |

0.32* |

0.30* |

0.08* |

0.24* |

|||

| MSLQ | |||||||||

|

SE |

—– |

0.61* |

0.54* |

-0.38* |

0.67* |

||||

|

CSU |

—– |

0.70* |

-0.20* |

0.68* |

|||||

|

SR |

—– |

-0.25* |

0.70* |

||||||

|

TA |

—– |

-0.35* |

|||||||

| SESRL |

—– |

||||||||

Note. SESSS = Student Engagement in School Success Skills survey; MSLQ = Motivated Strategies for Learning Questionnaire; SESRL = Self-Efficacy for Self-Regulated Learning scale; SDL = Self-Direction of Learning; SCL = Support of Classmates’ Learning; SRA = Self-Regulation of Arousal; SE = Self-Efficacy; CSU = Cognitive Strategy Use; SR = Self-Regulation; TA = Test Anxiety;

*p < .01

Next, the three SESSS subscales correlated with the SESRL, which measures students’ beliefs in their capability to engage in common effective self-regulation learning strategies. Again, all three SESSS subscales followed the same pattern, and had a considerable drop in magnitude of correlation with the MSLQ Self-Efficacy subscale, which measures students’ reports of general academic self-efficacy. While smaller, the correlations with the Self-Efficacy subscale (which ranged between .12 and .28) were practically different than 0. The Self-Direction of Learning subscale had the strongest correlations with the other measures, followed by the Support of Classmates’ Learning subscale and finally by the Self-Regulation of Arousal subscale. There is evidence that the three SESSS subscales, the Cognitive Strategy Use subscale and the Self-Regulation subscale of the MSLQ, and the SESRL all measure some common dimension of an underlying construct, probably relating to students’ intentional use of strategies to promote effective learning.

The Self-Regulation of Arousal subscale was the only one of the three SESSS subscales to be significantly correlated with the MSLQ Test Anxiety subscale. Conceptually, students’ abilities to self-regulate arousal should be related to their reported levels of test anxiety. However, given the weak relationship (r = 0.08) and the fact that this observed correlation was actually opposite in direction to the relationship that would be expected, this finding should not be given undue weight. The results of this study offer little to no evidence that the three SESSS subscales measure the same construct as the MSLQ Test Anxiety subscale. This weak or nonexistent relationship is discriminant evidence in that the other SESSS subscales did not correlate strongly or at all with the MSLQ Test Anxiety subscale. However, the three other MSLQ subscales and the SESRL all showed moderate, negative correlations with the MSLQ Test Anxiety subscale. These differing patterns of correlation with MSLQ Test Anxiety suggest that the SESSS subscales capture a different dimension than the common dimension of the underlying construct measured by the MSLQ subscales (including the Test Anxiety subscale) and the SESRL.

Discussion

The observed pattern of results is very useful in determining the validity of inferences that currently can be made from SESSS scores. Based on prior factor analytic studies (Brigman et al., 2014; Carey et al., 2013), the present authors made an attempt to create three SESSS subscales based on the items that load most strongly on each of three underlying factors. These three SESSS subscales showed good internal consistency and moderate intercorrelations suggesting that the three subscales most probably measure related but discriminable dimensions of students’ success skill use. However, the three SESSS subscales showed essentially the same pattern of correlation with comparison scales. Similarly, the pattern of results suggests that there is little or no overlap between the construct measured by the three SESSS subscales and the construct measured by the Test Anxiety subscale of the MSLQ.

Unfortunately, these results do not shed much light on any differences among the SESSS subscales. Each subscale showed essentially the same pattern of correlations with the comparison scales even where differences would have been expected. For example, the authors would have expected the SESSS Self-Regulation of Arousal subscale to correlate significantly with the MSLQ Test Anxiety subscale, since individuals who are better able to regulate their levels of emotional arousal would be expected to experience less specific anxiety.

These results suggest that the SESSS as a whole represents a valid measure of students’ intentional use of strategies to promote academic success. While prior factor analytic studies (Brigman et al., 2014; Carey et al., 2013) have suggested that the SESSS has three related dimensions, making inferences based upon the three SESSS subscales related to these dimensions is not warranted. Instead, until evidence can be found that these three subscales measure discriminable dimensions of success skill use, the SESSS should be used as a unitary measure in research and practice. Future research in this area is necessary.

Conclusion

Researchers have thoroughly documented the need for school counselors to demonstrate their impact on student achievement (Brown & Trusty, 2005; Dimmitt et al., 2007; Whiston & Quinby, 2009; Whiston & Sexton, 1998; Whiston et al., 2011). School counselor-led interventions that provide evidence of improving student performance remain at the top of national initiatives and research agendas (ASCA, 2005; Dimmitt et al., 2005). However, there is a limited amount of standardized outcome assessments specifically tied to school counselor interventions available to evaluate changes in student knowledge, skills and attitudes related to academic achievement.

The SESSS is easy to administer (it takes fewer than 15 minutes to complete) and educators use it in schools to gain valuable information about students’ use of skills and strategies related to school success. Results on the SESSS may be used to improve the implementation of school counselor-led interventions and reinforcement of specific skills in school and home settings. Current findings indicate that SESSS results should be interpreted as a whole rather than by subscale. SESSS results can be used to monitor student progress, and identify gaps in learning as well as factors affecting student behavior.

The SESSS may be used as a screening tool to identify students in need of school counseling interventions and to evaluate student growth in the academic and behavioral domains. A review of SESSS student data may reveal gaps between student groups and identify the need for additional education opportunities, as well as lead to decisions about future goals of the school counseling program and discussions with administration and staff about program improvement (Carey et al., 2013). Finally, SESSS student data can be used to demonstrate how school counselors can impact student academic and personal/social development related to classroom learning and achievement. SESSS results can be shared with various stakeholders through a variety of report formats (e.g., Web sites, handouts, newsletters), publications, or presentations at the local, regional or national level to document the school counselor’s ability to affect student outcomes most related to parents, administrators and other staff (Carey et al., 2013).

There is one limitation in the study worth noting. While the sample size for the current analysis is considered large and diverse, all participates represented a single grade level, fifth grade, and two public school districts. Future analyses should include students from various elementary and secondary settings and grade levels.

Future research on the psychometric properties of the SESSS should include studies that address (a) the reliability and intercorrelations of the assessments corresponding to the three SESSS subscales and (b) the predictive validity that establishes the relationships between SESSS subscales and measures of academic success (e.g., achievement test scores, grades, teacher ratings). These additional studies are necessary to firmly establish the utility of the SESSS as a reliable and valid measure of student success skills.

Conflict of Interest and Funding Disclosure

The authors reported no conflict of

interest or funding contributions for

the development of this manuscript.

References

American School Counselor Association. (2005). The ASCA national model: A framework for school counseling programs (2nd ed.). Alexandria, VA: Author.

Bandura, A. (2006). Guide for constructing self-efficacy scales. In F. Pajares & T. Urdan (Eds.), Self-efficacy beliefs of adolescents (pp. 307–337). Greenwich, CT: Information Age.

Brigman, G., & Webb, L. (2010). Student Success Skills: Classroom manual (3rd ed.). Boca Raton, FL: Atlantic Education Consultants.

Brigman, G., Wells, C., Webb, L., Villares, E., Carey, J. C., & Harrington, K. (2014). Psychometric properties and confirmatory factor analysis of the student engagement in school success skills. Measurement and Evaluation in Counseling and Development. Advance online publication. doi:10.1177/0748175614544545

Britner, S. L., & Pajares, F. (2006). Sources of science self-efficacy beliefs of middle school

students. Journal of Research in Science Teaching, 43, 485–499.

Brown, D., & Trusty, J. (2005). School counselors, comprehensive school counseling programs, and academic achievement: Are school counselors promising more than they can deliver? Professional School Counseling, 9, 1–8.

Campbell, D. T., & Fiske, D. W. (1959). Convergent and discriminant validation by the multitrait-multimethod matrix. Psychological Bulletin, 56, 81–105.

Carey, J. C. (2004). Does implementing a research-based school counseling curriculum enhance student achievement? Amherst, MA: Center for School Counseling Outcome Research. Retrieved from http://www.umass.edu/schoolcounseling/uploads/ResearchBrief2.3.pdf

Carey, J., Brigman, G., Webb, L., Villares, E., & Harrington, K. (2013). Development of an instrument to measure student use of academic success skills: An exploratory factor analysis. Measurement and Evaluation in Counseling and Development, 47, 171–180. doi:10.1177/0748175613505622

Dimmitt, C., Carey, J. C., & Hatch, T. (2007). Evidence-based school counseling: Making a difference with data-driven practices. Thousand Oaks, CA: Corwin Press.

Dimmitt, C., Carey, J. C., McGannon, W., & Henningson, I. (2005). Identifying a school counseling research agenda: A delphi study. Counselor Education and Supervision, 44, 214–228. doi:10.1002/j.1556-6978.2005.tb01748.x

Duncan, T. G., & McKeachie, W. J. (2005). The making of the motivated strategies for learning questionnaire. Educational Psychologist, 40, 117–128.

Durlak, J. A., Weissberg, R., Dymnicki, A. B., Taylor, R. D., & Schellinger, K. B. (2011). The impact of enhancing students’ social and emotional learning: A meta-analysis of school-based universal interventions. Child Development, 82, 405–432. doi:10.1111/j.1467-8624.2010.01564.x

Eccles, J. (1983). Expectancies, values and academic behaviors. In J. T. Spence (Ed.), Achievement and achievement motives: Psychological and sociological approaches (pp. 75–146). San Francisco, CA: W.H. Freeman.

Ellis, P. D. (2010). The essential guide to effect sizes: Statistical power, meta-analysis, and the interpretation of research results. Leiden, Netherlands: Cambridge University Press.

Green, A., & Keys, S. (2001). Expanding the developmental school-counseling paradigm: Meeting the needs of the 21st century student. Professional School Counseling, 5, 84–95.

Greenberg, M. T., Weissberg, R. P., O’Brien, M. U., Zins, J. E., Fredericks, L., Resnik, H., & Elias, M. J. (2003). Enhancing school-based prevention and youth development through coordinated social, emotional, and academic learning. American Psychologist. Special Issue: Prevention that Works for Children and Youth, 58, 466–474.

Gysbers, N. C. (2004). Comprehensive guidance and counseling programs: The evolution of accountability. Professional School Counseling, 8, 1–14.

Harter, S. (1981). A new self-report scale of intrinsic versus extrinsic orientation in the classroom: Motivational and informational components. Developmental Psychology, 17, 300–312.

Hattie, J., Biggs, J., & Purdie, N. (1996). Effects of learning skills interventions on student learning: A meta-analysis. Review of Educational Research, 66(2), 99–136. doi:10.3102/00346543066002099

Lapan, R. (2005). Evaluating school counseling programs. In C. A. Sink (Ed.), Contemporary school counseling: Theory, research, and practice (pp. 257–293). Boston, MA: Houghton Mifflin.

Longford, N. T. (2005). Missing data and small-area estimation: Modern analytical equipment for the survey statistician. New York, NY: Springer.

Marzano, R. J., Pickering, D. & Pollock, J. E. (2001). Classroom instruction that works: Research-based strategies for increasing student achievement. Alexandria, VA: Association for Supervision and Curriculum Development.

Masten, A. S., & Coatsworth, J. D. (1998). The development of competence in favorable and unfavorable environments: Lessons from research on successful children. American Psychologist, 53, 205–220.

Messick, S. (1993). Validity. In R. L. Linn (Ed.), Educational measurement, (3rd ed., pp. 13–100). Phoenix, AZ: American Council on Education/Oryx.

MetaMetrics, Inc. (2012). The Lexile® framework for reading. Retrieved from http://www.lexile.com

Myrick, R. D. (2003). Accountability: Counselors count. Professional School Counseling, 6, 174–179.

Paisley, P. O., & Hayes, R. L. (2003). School counseling in the academic domain: Transformations in preparation and practice. Professional School Counseling, 6, 198–204.

Pajares, F., & Valiante, G. (1999). Grade level and gender differences in the writing self-beliefs of middle school students. Contemporary Educational Psychology, 24, 390–405.doi:10.1006/ceps.1998.0995

Pajares, F., & Valiante, G. (2002). Students’ self-efficacy in their self-regulated learning strategies: A developmental perspective. Psychologia, 45, 211–221.

Pintrich, P. R., & De Groot, E. V. (1990). Motivational and self-regulated learning components of classroom academic performance. Journal of Educational Psychology, 82, 33–40.

Scarborough, J. L. (2005). The school counselor activity rating scale: An instrument for gathering process data. Professional School Counseling, 8, 274–283.

Sink, C. A., & Spencer, L. R. (2007). Teacher version of the my class inventory-short form: An accountability tool for elementary school counselors. Professional School Counseling, 11, 129–139.

Sperling, R. A., Howard, B. C., Miller, L. A., & Murphy, C. (2002). Measures of children’s knowledge and regulation of cognition. Contemporary Educational Psychology, 27, 51–79. doi:10.1006/ceps.2001.1091

Usher, E. L., & Pajares, F. (2006). Sources of academic and self-regulatory efficacy beliefs of entering middle school students. Contemporary Educational Psychology, 31, 125–141. doi:10.1016/j.cedpsych.2005.03.002

Usher, E. L., & Pajares, F. (2008). Self-efficacy for self-regulated learning: A validation study. Educational and Psychological Measurement, 68, 443–463. doi:10.1177/0013164407308475

Villares, E., Frain, M., Brigman, G., Webb, L., & Peluso, P. (2012). The impact of student success skills on standardized test scores: A meta-analysis. Counseling Outcome Research and Evaluation, 3, 3–16. doi:10.1177/2150137811434041

Wang, M. C., Haertel, G. D., & Walberg, H. J. (1994a). What helps students learn? Educational Leadership, 51(4), 74–79.

Wang, M. C., Haertel, G. D., & Walberg, H. J. (1994b). Educational resilience in inner cities. In M. C. Wang & E. W. Gordon (Eds.), Educational resilience in inner-city America: Challenges and prospects (pp. 45–72). Mahwah, NJ: Erlbaum.

Webb, L., Brigman, G., Carey, J., & Villares, E. (2011). A randomized controlled trial of student success skills: A program to improve academic achievement for all students (Education Research Grant 84.305A). Institute of Education Sciences, U.S. Department of Education. Unpublished.

Weinstein, C. E., Schulte, A. C., & Palmer, D. R. (1987). LASSI: Learning and study strategies inventory. Clearwater, FL: H & H.

What Works Clearinghouse (2011). Procedures and standards handbook, version 2.1. Washington, DC: Author.

Whiston, S. C. (2002). Response to the past, present, and future of school counseling: Raising some issues. Professional School Counseling, 5, 148–155.

Whiston, S. C. (2011). Vocational counseling and interventions: An exploration of future “big” questions. Journal of Career Assessment, 19, 287–295. doi:10.1177/1069072710395535

Whiston, S. C., & Aricak, O. T. (2008). Development and initial investigation of the school counseling program evaluation scale. Professional School Counseling, 11, 253–261.

Whiston, S. C., & Quinby, R. F. (2009). Review of school counseling outcome research. Psychology in the Schools, 46, 267–272. doi:10.1002/pits.20372

Whiston, S. C., & Sexton, T. L. (1998). A review of school counseling outcome research: Implications for practice. Journal of Counseling & Development, 76, 412–426. doi:10.1002/j.1556-6676.1998.tb02700.x

Whiston, S. C., Tai, W. L., Rahardja, D., & Eder, K. (2011). School counseling outcome: A meta-analytic examination of interventions. Journal of Counseling & Development, 89, 37–55.

Yildiz, E., Akpinar, E., Tatar, N., & Ergin, O. (2009). Exploratory and confirmatory factor analysis of the metacognition scale for primary school students. Educational Sciences: Theory & Practice, 9, 1591–1604.

Zins, J. E., Weissberg, R. P., Wang, M. C., & Walberg, H. J. (Eds.). (2004). Building academic success on social and emotional learning: What does the research say? New York, NY: Teachers College Press.

Elizabeth Villares is an associate professor at Florida Atlantic University. Kimberly Colvin is an assistant professor at the University at Albany, SUNY. John Carey is a professor at the University of Massachusetts-Amherst. Linda Webb is a senior research associate at Florida State University. Greg Brigman, NCC, is a professor at Florida Atlantic University. Karen Harrington is assistant director at the Ronald H. Fredrickson Center for School Counseling Research and Evaluation at the University of Massachusetts-Amherst. Correspondence can be addressed to Elizabeth Villares, 5353 Parkside Drive, EC 202H, Jupiter, FL 33458, evillare@fau.edu.

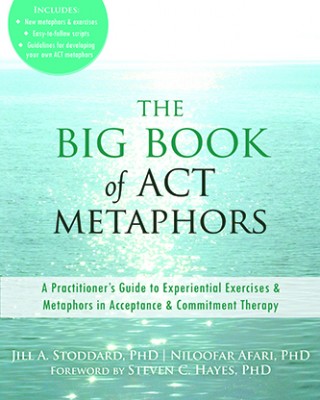

Acceptance and commitment therapy (ACT) has received significant research attention over the past decade and also been used frequently to treat individuals presenting with a variety of clinical concerns ranging from chronic pain and diabetes management to severe depression and substance abuse. In The Big Book of ACT Metaphors: A Practitioner’s Guide to Experiential Exercises and Metaphors in Acceptance and Commitment Therapy, Jill A. Stoddard and Niloofar Afari provide a comprehensive A–Z resource guide for practitioners, trainees and others in the counseling profession to use when working with clients. Additionally, novel metaphors, new experiential exercises and detailed scripts were collected from the entire ACT community to make this a collaborative endeavor and to provide a “one-stop” shop for all.

Acceptance and commitment therapy (ACT) has received significant research attention over the past decade and also been used frequently to treat individuals presenting with a variety of clinical concerns ranging from chronic pain and diabetes management to severe depression and substance abuse. In The Big Book of ACT Metaphors: A Practitioner’s Guide to Experiential Exercises and Metaphors in Acceptance and Commitment Therapy, Jill A. Stoddard and Niloofar Afari provide a comprehensive A–Z resource guide for practitioners, trainees and others in the counseling profession to use when working with clients. Additionally, novel metaphors, new experiential exercises and detailed scripts were collected from the entire ACT community to make this a collaborative endeavor and to provide a “one-stop” shop for all.