Development of a Logic Model to Guide Evaluations of the ASCA National Model for School Counseling Programs

Ian Martin, John Carey

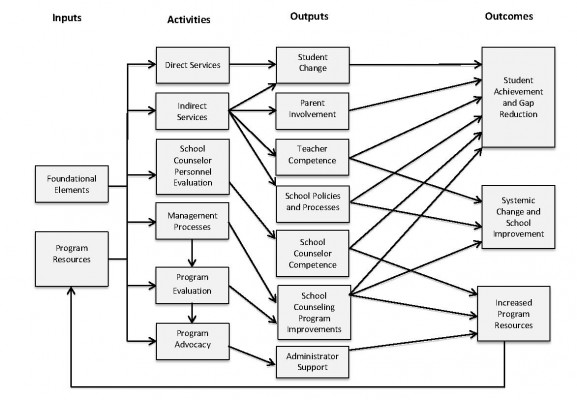

A logic model was developed based on an analysis of the 2012 American School Counselor Association (ASCA) National Model in order to provide direction for program evaluation initiatives. The logic model identified three outcomes (increased student achievement/gap reduction, increased school counseling program resources, and systemic change and school improvement), seven outputs (student change, parent involvement, teacher competence, school policies and processes, competence of the school counselors, improvements in the school counseling program, and administrator support), six major clusters of activities (direct services, indirect services, school counselor personnel evaluation, program management processes, program evaluation processes and program advocacy) and two inputs (foundational elements and program resources). The identification of these logic model components and linkages among these components was used to identify a number of necessary and important evaluation studies of the ASCA National Model.

Keywords: ASCA National Model, school counseling, logic model, program evaluation, evaluation studies

Since its initial publication in 2003, The ASCA National Model: A Framework for School Counseling Programs has had a dramatic impact on the practice of school counseling (American School Counselor Association [ASCA], 2003). Many states have revised their model of school counseling to make it consistent with this model (Martin, Carey, & DeCoster, 2009), and many schools across the country have implemented 3the ASCA National Model. The ASCA Web site, for example, currently lists over 400 schools from 33 states that have won a Recognized ASCA Model Program (RAMP) award since 2003 as recognition for exemplary implementation of the model (ASCA, 2013).

While the ASCA National Model has had a profound impact on the practice of school counseling, very few studies have been published that evaluate the model itself. Evaluation is necessary to determine if the implementation of the model results in the model’s anticipated benefits and to determine how the model can be improved. The key studies typically cited (see ASCA, 2005) as supporting the effectiveness of the ASCA National Model (e.g., Lapan, Gysbers, & Petroski, 2001; Lapan, Gysbers, & Sun, 1997) were actually conducted before the model was developed and were designed as evaluations of Comprehensive Developmental Guidance, which is an important precursor and component of the ASCA National Model, but not the model itself.

Two recent statewide evaluations of school counseling programs focused on the relationships between the level of implementation of the ASCA National Model and student outcomes. In a statewide evaluation of school counseling programs in Nebraska, Carey, Harrington, Martin, and Hoffman (2012) found that the extent to which a school counseling program had a well-implemented, differentiated delivery system consistent with practices advocated by the ASCA National Model was associated with lower suspension rates, lower discipline incident rates, higher attendance rates, higher math proficiency and higher reading proficiency. These results suggest that model implementation is associated with increased student engagement, fewer disciplinary problems and higher student achievement. In a similar statewide evaluation study in Utah, Carey, Harrington, Martin, and Stevens (2012) found that the extent to which the school counseling program had a programmatic orientation, similar to that advocated in the ASCA National Model, was associated with both higher average ACT scores and a higher number of students taking the ACT. This suggests that model implementation is associated with both increased achievement and a broadening of student interest in college. While these studies suggest that benefits to students are associated with the implementation of the ASCA National Model, additional evaluations are necessary that use stronger (e.g., quasi-experimental and longitudinal) designs and investigate specific components of the model in order to determine their effectiveness or how they can be improved.

There are several possible reasons why the ASCA National Model has not been evaluated extensively. The school counseling field as a whole has struggled with general evaluation issues. For example, questions have been raised regarding the effectiveness of practitioner training in evaluation (Astramovich, Coker, & Hoskins, 2005; Heppner, Kivlighan, & Wampold, 1999; Sexton, Whiston, Bleuer, & Walz, 1997; Trevisan, 2000); practitioners have cited lack of time, evaluation resources and administrative support as major barriers to evaluation (Loesch, 2001; Lusky & Hayes, 2001); and some practitioners have feared that poor evaluation results may negatively impact their program credibility (Isaacs, 2003; Schmidt, 1995). Another contributing factor is that while the importance of evaluation is stressed in the literature, few actual examples of program evaluations and program evaluation results have been published (Astramovich & Coker, 2007; Martin & Carey, 2012; Martin et al., 2009; Trevisan, 2002).

In addition, there are several features of the ASCA National Model that make evaluations difficult. First, the model is complex, containing many components grouped into four interrelated, functional subsystems referred to as the foundation, delivery system, management system and accountability system. Second, ASCA created the National Model by combining elements of existing models that were developed by different individuals and groups. For example, the principle influences of the model (ASCA, 2012) are cited as Gysbers and Henderson (2000), Johnson and Johnson (2001) and Myrick (2003). Furthermore, principles and concepts derived from important movements such as the Transforming School Counseling Initiative (Martin, 2002) and evidence-based school counseling (Dimmitt, Carey, & Hatch, 2007) also were incorporated into the model during its development. While these preexisting models and movements share some common features, they differ in important ways. Elements of these approaches were combined and incorporated into the ASCA National Model without a full integration of their philosophical and theoretical perspectives and principles. Consequently, the ASCA National Model does not reflect a single cohesive approach to program organization and management. Instead, it reflects a collection of presumably effective principles and practices that have been applied in school counseling programs. Third, instruments for measuring important aspects of model implementation are lacking (Clemens, Carey, & Harrington, 2010). Fourth, the theory of action of the ASCA National Model has not been fully explicated, so it is difficult to determine what specific benefits are intended to result from the implementation of specific elements of the model. For example, it is not entirely clear how changing the performance evaluation of counselors is related to the desired benefits of the model.

In this article, the authors present the results of their work in developing a logic model for the ASCA National Model. Logic modeling is a systematic approach to enabling high-quality program evaluation through processes designed to result in pictorial representations of the theory of action of a program (Frechtling, 2007). Logic modeling surfaces and summarizes the explicit and implicit logic of how a program operates to produce its desired benefits and results. By applying logic modeling to an analysis of the ASCA National Model, the authors intended to fully explicate the relationships between structures and activities advocated by the model and their anticipated benefits so that these relationships can be tested in future evaluations of the model.

The purpose of this study, therefore, was to develop a useful logic model that describes the workings of the ASCA National Model in order to promote its evaluation. More specifically, the purpose was to mine the logic elements, program outcomes and implicit (unstated) assumptions about the relationships between program elements and outcomes. In developing this logic model, the authors followed the processes suggested by the W. K. Kellogg Foundation (2004) and Frechtling (2007). Several different frameworks exist for logic models, but the authors elected to use Frechtling’s framework because it focuses specifically on promoting evaluation of an existing program (as opposed to other possible uses such as program planning). This framework identifies the relationships among program inputs, activities, outputs and outcomes. Inputs refer to the resources needed to deliver the program as intended. Activities refer to the actual program components that are expected to be related to a desired outcome. Outputs refer to the immediate products or results of activities that can be observed as evidence that the activity was actually completed. Outcomes refer to the desired benefits of the program that are expected to occur as a consequence of program activities. The authors’ logic model development was guided by four questions:

What are the essential desired outcomes of the ASCA National Model?

What are the essential activities of the ASCA National Model and how do these activities relate to its outputs?

What are the essential outputs of the ASCA National Model and how do these outputs relate to its desired outcomes?

What are the essential inputs of the ASCA National Model and how do these inputs relate to its activities?

Methods

All analyses in this study were based on the latest edition of the ASCA National Model (ASCA, 2012). In these analyses, every attempt was made to base inferences on the actual language of the model. In some instances (for example, when it was unclear which outputs were expected to be related to a given activity) the professional literature about the ASCA National Model was consulted.

Because the authors intended to develop a logic model from an existing program blueprint (rather than designing a new program), they began, according to recommended procedures (W. K. Kellogg Foundation, 2004), by first identifying outcomes and then working backward to identify activities, then outputs associated with activities and finally, inputs.

Identification of Outcomes

The authors independently reviewed the ASCA National Model (2012) and identified all elements in the model. The two authors’ lists of elements (e.g., vision statement, annual agreement with school leaders, indirect service delivery and curriculum results reports) were merged to create a common list of elements. The authors then independently created a series of if, then statements for each element of the model that traced the logical connections explicitly stated in the model (or in rare instances, stated in the professional literature about the model) between the element and a program outcome. In this way, both the desired outcomes of the ASCA National Model and the desired logical linkages between elements and outcomes were identified.

During this process, some ASCA National Model elements were included in the same logic sequence because they were causally related to each other. For example, both the vision statement and the mission statement were included in the same logic sequence because a strong vision statement was described as a necessary prerequisite for the development of a strong mission statement. Some ASCA National Model elements also were included in more than one logical sequence when it was clear that two different outcomes were intended to occur related to the same element. For example, it was evident that closing-the-gap reports were intended to result in intervention improvements, leading to better student outcomes and also to apprising key stakeholders of school counseling program results, in order to increase support and resources for the program.

Identification of Activities

Frechtling (2007) noted that the choice of the right amount of complexity in portraying the activities in a logic model is a critically important factor in a model’s utility. If activities are portrayed in their most differentiated form, the model can be too complex to be useful. If activities are portrayed in their most compact form, the model can lack enough detail to guide evaluation. Therefore, in the present study, the authors decided to construct several different logic models with different sets of activities that ranged from including all the previously identified ASCA National Model elements as activities to including only the four sections of the ASCA National Model (i.e., foundation, management system, delivery system and accountability system) as activities. As neither of the two extreme options proved to be feasible, the authors began clustering ASCA National Model elements and developed six activities, each of which represented a cluster of program elements.

Identification of Outputs Related to Activities

Outputs are the observable immediate products or deliverables of the logic model’s inputs and activities (Frechtling, 2007). After the authors identified an appropriate level for representing model activities, they generated the same level of program outputs. Reexamining the logic sequences, clustering products of identified activities and then creating general output categories from the clustered products accomplished this task. For example, the activity known as direct services contained several ASCA National Model products, such as the curriculum results report, the small-group results report and the closing-the-gap results report (among others), and the resulting output was finally categorized as student change. Ultimately, seven logic model outputs were identified through this process to help describe the outputs created by ASCA National Model activities.

Identifying the Connections Between Outputs and Outcomes

Creating connections between model outputs and outcomes was accomplished by linking the original logic sequences to determine how the ASCA National Model would conceive of outputs as being linked to outcomes. Returning to the above example, the output known as student change, which included such products as results reports, was connected to the outcome known as student achievement and gap reduction in several logic sequences. At the conclusion of this process, each output had straightforward links to one or multiple proposed model outcomes. Not only was this process useful in identifying links between outputs and outcomes, but it also functioned as an opportunity to test the output categories for conceptual clarity.

Identification of Inputs and Connections Between Inputs and Activities

The authors reviewed the ASCA National Model to determine which inputs were necessary to include in the logic model. They identified two essential types of inputs: foundational elements (conceptual underpinnings described in the foundation section of the ASCA National Model) and program resources (described throughout the ASCA National Model). The authors determined that these two types of inputs were necessary for the effective operation of all six activities.

Identifying Other Connections Within the Logic Model

After the inputs, activities, outputs, outcomes and the connections between these levels were mapped, the authors again reviewed the logic sequences and the ASCA National Model to determine if any additional linkages needed to be included in the logic model (see Frechtling, 2007). They evaluated the need for within-level linkages (e.g., between two activities) and feedback loops (i.e., where a subsequent component influences the nature of preceding components). The authors determined that two within-level and one recursive linkage were needed.

Results

Outcomes

A total of 65 logic sequences were identified for the ASCA National Model sections: foundation (n = 7), management system (n = 30), delivery system (n = 7) and accountability system (n = 21). Table 1 contains sample logic sequences.

Table 1

Examples of Logic Sequences Relating ASCA National Model Elements to Outcomes

|

National Model Section |

Logic Sequence |

| Foundation | a. If counselors go through the process of creating a set of shared beliefs, then they will establish a level of mutual understanding.b. If counselors establish a level of mutual understanding, then they will be more successful in developing a shared vision for the program.c. If counselors develop a shared vision for the program, then they can develop an effective vision statement.d. If counselors create a vision statement, then they will have the clarity of purpose that is needed to develop a mission statement.e. If counselors create a mission statement, then the program will be more focused.f. If the program is better focused, counselors will create a set of program goals, which will enable counselors to specify how the attainment of the goals should be measured.

g. If counselors specify how the attainment of goals should be measured, then effective program evaluation will be conducted. h. If effective program evaluation is conducted, then the program will be continuously improved. i. If the program will be continuously improved, then improved student achievement will result. |

| Management System | a. If school counselors create annual agreements with the leader in charge of the school, then the goals and activities of the counseling program will be more aligned with the goals of the school.b. If the goals and activities of the counseling program are more aligned with the goals of the school, then school leaders will recognize the value of the school counseling program.c. If school leaders recognize the value of the school counseling program, then they will commit resources to support the program. |

| Delivery System | a. If school counselors engage in indirect services (e.g., consultation and advocacy), then school policies and processes will improve.b. If school policies and processes improve, then teachers will develop more competency, and systemic change and school improvement will occur. |

| Accountability System | a. If counselors complete curriculum results reports, then they will have the information they need to demonstrate the effectiveness of developmental and preventative curricular activities.b. If counselors have the information they need to demonstrate the effectiveness of developmental and preventative curricular activities, then they can communicate their impact to school leaders.c. If school leaders are aware of the impact of developmental and preventative curricular activities, then they will recognize their value.d. If school leaders recognize the value of developmental and preventative curricular activities, then they will commit resources to support them. |

Forty of these logic sequences terminated with an outcome related to increased student achievement or (relatedly) to a reduction in the achievement gap. Twenty-two sequences terminated with an outcome related to an increase in program resources. Only three sequences terminated with an outcome related to systemic change in the school. From this analysis, the authors concluded that the primary desired outcomes of the ASCA National Model are increased student achievement/gap reduction and increased school counseling program resources. They also concluded that systemic change and school improvement is another desired outcome of the ASCA National Model.

Activities

Based on a clustering of ASCA National Model elements identified previously, six activities were developed for the logic model. These activities included the following: direct services, indirect services, school counselor personnel evaluation, program management processes, program evaluation processes and program advocacy processes. Each of these activities represents a cluster of elements within the ASCA National Model. For example, the activity known as direct services includes the school counseling core curriculum, individual student planning and responsive services. Consequently, the direct services activity represents the spectrum of services that would be delivered to students in an ASCA National Model school counseling program.

Activities Related to Outputs

Based on the clustering of the ASCA National Model products or deliverables around the related logic model activities, seven outputs were identified. These outputs included the following: student change, parent involvement, teacher competence, school policies and processes, school counselor competence, school counseling program improvements, and administrator support. The outputs represent all of the ASCA National Model products generated by model activities and help to collect evidence and determine to what degree an activity was successfully accomplished. In essence, for evaluation purposes, these outputs represent the intermediate outcomes (Dimmitt et al., 2007) of an ASCA National Model program. Activities should result in measurable changes in outputs, which in turn should result in measurable changes in outcomes. For example, the output known as student change reflects student changes such as increased academic motivation, increased problem-solving skills, enhanced emotional regulation and better interpersonal problem-solving skills; these changes lead to the longer-term outcome of student achievement and gap reduction.

Connections Between Outputs and Outcomes

Connecting the seven ASCA National Model outputs to its outcomes strengthens the logic model by identifying the hypothesized relationships between the more immediate changes that result from school counseling program activities (i.e., outputs) and the more distal changes that result from the operation of the program (i.e., outcomes). As described earlier, two primary outcomes (student achievement and gap reduction and increased program resources) and one secondary outcome (systemic change and school improvement) were identified within the ASCA National Model. Three of the seven outputs (student change, parent involvement and administrator support) were connected to only one outcome. Three other outputs (teacher competence, school policies and processes, and school counselor competence) were connected to two outcomes. One output (administrator support) was connected to all three outcomes. Interpreting these linkages is useful in understanding the implicit theory of change of the ASCA National Model and consequently in designing appropriate evaluation studies. The authors’ logic model, for example, indicates that student changes (related to both direct and indirect services of an ASCA National Model program) are expected to result in measurable increases in student achievement and a reduction in the achievement gap.

It also is helpful to scan backward in the logic model to identify how changes in outcomes are expected to occur. For example, student achievement and gap reduction is linked to six model outputs (student change, parent involvement, teacher competence, school policies and processes, school counselor competence, and school counseling program improvements). Student achievement and gap reduction is multiply determined and is the major focus of the ASCA National Model. Increased program resources are connected to three model outputs (school counselor competence, school counseling program improvements and administrator support). Systemic change and school improvement also can be connected to three outputs (teacher competence, school policies and processes, and school counseling program improvements).

Inputs and Connections Between Inputs and Activities

Based on an analysis of the ASCA National Model, two inputs were identified for inclusion in the logic model: foundational elements (which include the elements in the ASCA National Model’s foundation section considered important for program planning and operation) and program resources (which include elements essential for effective program implementation such as counselor caseload, counselor expertise, counselor professional development support, counselor time-use and program budget). Both of these inputs were identified as being important in the delivery of all six activities.

Additional Connections Within the Logic Model

Based on a final review of the logical sequences and another review of the ASCA National Model, three additional linkages were added to the authors’ logic model. The first linkage was a unidirectional arrow leading from management processes to program evaluation in the activities column. This arrow was intended to represent the tight connection between management processes and evaluation activities that is evident in the ASCA National Model. Relatedly, a unidirectional arrow leading from the school counseling program evaluation activity to the program advocacy activity was added. This arrow was intended to represent the many instances of the ASCA National Model suggesting that program evaluation activities should be used to generate essential information for program advocacy. The final additional link was a recursive arrow leading from the increased program resources outcome to the program resources input. This linkage was intended to represent the ASCA National Model’s concept that investment of additional resources resulting from successful implementation and operation of an ASCA National Model program will result in even higher levels of program effectiveness and eventually even better outcomes.

The Logic Model

Figure 1 contains the final logic model for the ASCA National Model for School Counseling Programs. Logic models portray the implicit theory of change underlying a program and consequently facilitate the evaluation of the program (Frechtling, 2007). Overall, the theory of change for an ASCA national program could be described as follows: If school counselors use the foundational elements of the ASCA National Model and have sufficient program resources, they will be able to develop and implement a comprehensive program characterized by activities related to direct services, indirect services, school counselor personnel evaluation, management processes, program evaluation and (relatedly) program advocacy. If these activities are put in place, several outputs will be observed, including the following: student changes in academic behavior, increased parent involvement, increases in teacher competence in working with students, better school policies and processes, increased competence of the school counselors themselves, demonstrable improvements in the school counseling program, and increased administrator support for the school counseling program. If these outputs occur, then the following outcomes should result: increased student achievement and a related reduction in the achievement gap, notable systemic improvement in the school in which the program is being implemented, and increased program support and resources. If these additional resources are reinvested in the school counseling program, the effectiveness of the program will increase.

Figure 1. Logic Model for ASCA National Model for School Counseling Programs

Discussion

Logic models can be used for a number of purposes including the following: enhancing communication among program team members, managing the program, documenting how the program is intended to operate and developing an approach to evaluation and related evaluation questions (Frechtling, 2007). The present study was conducted in order to develop a logic model for ASCA National Model programs so that these programs could be more readily evaluated, and based on the results of these evaluations, the ASCA National Model could then be improved.

Evaluations can focus on the question of whether or not a program or components of a program actually result in intended changes. At the most global level, an evaluation can focus on discovering the extent to which the program as a whole achieves its desired outcomes. At a more detailed level, an evaluation can focus on discovering the extent to which the components (i.e., activities) of the program achieve their desired outputs (with the assumption that achievement of the outputs is a necessary precursor to achievement of the outcomes).

In both types of evaluations, it is important to use a design that allows some form of comparison. In the simplest case, it would be possible to compare outputs and outcomes before and after implementation of the ASCA National Model. In more complex cases, it would be possible to compare outputs and outcomes of programs that have implemented the ASCA National Model with programs that have not. In these cases, it is essential to control for the confounding effects of extraneous variables (e.g., the affluence of students in the school) by the use of matching or covariates. If the level of implementation of the ASCA National Model program as a whole can be measured, it is even possible to use multivariate correlation approaches to examine whether the level of implementation of the program is related to desired outcomes while simultaneously controlling statistically for potential confounding variables. These same correlational procedures can be used to examine the relationships between the more discrete activities of the program and their corresponding outputs.

At the most global level, it is important to evaluate the extent to which the implementation of the ASCA National Model results in the following: increases in student achievement (and associated reductions in the achievement gap), measurable systemic change and school improvements, and increases in resources for the school counseling program. At present, there is some evidence that implementation of the ASCA National Model is related to achievement gains (Carey, Harrington, Martin, & Hoffman, 2012; Carey, Harrington, Martin, & Stevens, 2012). No evaluations to date have examined whether ASCA National Model implementation results in systemic change and school improvement or in an increase in program resources.

It also is important to evaluate the extent to which specific program activities achieve their desired outputs. Table 2 contains a list of sample evaluation questions for each activity. Within these questions, evaluation is focused on whether or not components of the program result in overall benefits. No evaluation study to date has evaluated the impact of ASCA National Model implementation on these factors.

Table 2

Sample Evaluation Questions for ASCA National Model Activities

|

Activities |

Evaluation Questions |

| Direct Services | Does organizing and delivering school counseling direct services in accordance with ASCA National Model principles result in an increase in important aspects of students’ school behavior that are related to academic achievement? |

| Indirect Services | Does organizing and delivering school counseling indirect services in accordance with ASCA National Model principles result in an increase in parent involvement? |

| Does organizing and delivering school counseling indirect services in accordance with ASCA National Model principles result in an increase in teachers’ abilities to work effectively with students? | |

| Does organizing and delivering school counseling indirect services in accordance with ASCA National Model principles result in improvements in school policies and procedures that support student achievement? | |

| School Counselor Personnel Evaluation | Does the implementation of personnel and processes recommended by the ASCA National Model result in increases in the professional competence of school counselors? |

| Management Processes | Does the implementation of the management processes recommended by the ASCA National Model result in demonstrable improvements in the school counseling program? |

| Program Evaluation | Does the implementation of program evaluation processes recommended by the ASCA National Model result in demonstrable improvements in the school counseling program? |

| Program Advocacy | Does the implementation of the program advocacy practices recommended by the ASCA National Model result in increases in administrator support for the program? |

In addition to examining program-related change, it is important to evaluate whether a basic assumption of the ASCA National Model bears out in reality. The major assumption is that school counselors who use the foundational elements of the ASCA National Model (e.g., vision statement, mission statement) and have access to typical levels of program resources can develop and implement all the activities associated with an ASCA National Model program (e.g., direct services, indirect services, school counselor personnel evaluation, management processes, program evaluation and program advocacy). Qualitative evaluations of the relationships between inputs and quality of the activities are necessary to determine what levels of inputs are necessary for full implementation. While full evaluation studies of this type have yet to be undertaken, Martin and Carey (2012) have recently reported the results of a two-state qualitative comparison of how statewide capacity-building activities to promote school counselors’ competence in evaluation were used to promote the widespread implementation of ASCA National Model school counseling programs. More studies of this type that focus on the relationships between a broader range of program inputs and school counselors’ ability to fully implement ASCA National Model program activities are needed.

Limitations and Future Directions

Constructing a logic model retrospectively is inherently challenging and complex. This is especially true when the program for which the logic model is being created was not initially developed with reference to an explicit, coherent theory of action. In the present study, the authors approached the work systematically and are confident that others following similar procedures would generate similar results. With that said, a limitation of this work is that the logic model was created based on the authors’ analyses of the written description of the ASCA National Model (2012) and literature surrounding the ASCA National Model. Engaging individuals who were involved in the development and implementation of the ASCA National Model in dialogue might have resulted in a richer logic model with even more utility in directing evaluation of the ASCA National Model. As a follow-up to the present study, the authors intend to continue this inquiry by asking key individuals involved with the ASCA National Model to evaluate the present logic model and to suggest revisions and extensions. Even given this limitation, the current study has potential immediate implications for improving practice that go beyond its role in providing focus and direction for ASCA National Model evaluation.

A potentially fertile testing ground for the implementation of the logic model is present within the RAMP Award process. As aforementioned, RAMP awards are given to exemplary schools that have successfully implemented the ASCA National Model. Currently, schools provide evidence (data) and create narratives regarding how they have successfully met RAMP criteria. Twelve independent rubrics are scored and totaled to determine whether a school receives a RAMP Award. At least two contributions of the logic model for improving the RAMP process seem feasible. First, practitioners can use the logic model to help construct narratives that better articulate how ASCA National Model activities/outputs relate to model outcomes. Second, the logic model may also help improve the RAMP process by highlighting clearer links between activities, outputs and outcomes. In future revisions of the RAMP process, more attention could be paid to the documentation of benefits achieved by the program in terms of both outputs (i.e., the immediate measurable positive consequences of program activities) and outcomes (i.e., the longer-term positive consequences of program operation). In this vein, the authors hope that the logic model developed in this study will help to improve the RAMP process for both practitioners and RAMP evaluators.

Retrospective logic models map a program as it is. In that sense, they are very useful in directing the evaluation of existing programs. Prospective logic models are used to design new programs. Using logic models in program design (or redesign) has some distinct advantages. “Logic models help identify the factors that will impact your program and enable you to anticipate the data and resources you will need to achieve success” (W. K. Kellogg Foundation, 2004, p. 65). When programs are planned with the use of a logic model, greater opportunities exist to explore foundational theories of change, to explore issues or problems addressed by the program, to surface community needs and assets related to the program, to consider desired program results, to identify influential program factors (e.g., barriers or supports), to consider program strategies (e.g., best practices), and to elucidate program assumptions (e.g., the beliefs behind how and why the strategies will work; W. K. Kellogg Foundation, 2004). The authors hope that logic modeling will be incorporated prospectively into the next revision process of the ASCA National Model. Basing future editions of the ASCA National Model on a logic model that comprehensively describes its theory of action should result in a more elegant ASCA National Model with a clearer articulation between its components and its desired results. Such a model would be easier to articulate, implement and evaluate. The authors hope that the development of a retrospective logic model in the present study will facilitate the prospective use of a logic model in subsequent ASCA National Model revisions. The present logic model provides a map of the current state of the ASCA National Model. It is a good starting point for reconsidering such questions as how the model should operate, whether the outcomes are the right outcomes, whether the activities are sufficient and comprehensive enough to lead to the desired outcomes, and whether the available program resources are sufficient to support implementation of program activities.

Conflict of Interest and Funding Disclosure

The authors reported no conflict of

interest or funding contributions for

the development of this manuscript.

References

American School Counselor Association. (2003). The ASCA national model: A framework for school counseling programs. Alexandria, VA: Author.

American School Counselor Association. (2005). The ASCA national model: A framework for school counseling programs. (2nd ed.). Alexandria, VA: Author.

American School Counselor Association. (2012). The ASCA national model: A framework for school counseling programs (3rd ed.). Alexandria, VA: Author.

American School Counselor Association. (2013). Past RAMP Recipients. Retrieved from http://www.ascanationalmodel.org/learn-about-ramp/past-ramp-recipients

Astramovich, R. L., & Coker, J. K. (2007). Program evaluation: The accountability bridge model for counselors. Journal of Counseling & Development, 85, 162–172. doi:10.1002/j.1556-6678.2007.tb00459.x

Astramovich, R. L., Coker, J. K., & Hoskins, W. J. (2005). Training school counselors in program evaluation. Professional School Counseling, 9, 49–54.

Carey, J., Harrington, K., Martin, I., & Hoffman, D. (2012). A statewide evaluation of the outcomes of ASCA National Model school counseling programs in rural and suburban Nebraska high schools. Professional School Counseling, 16, 100–107.

Carey, J. C., Harrington, K., Martin, I., & Stevens, D. (2012). A statewide evaluation of the outcomes of the implementation of ASCA National Model school counseling programs in high schools in Utah. Professional School Counseling, 16, 89–99.

Clemens, E. V., Carey, J. C., & Harrington, K. M. (2010). The school counseling program implementation survey: Initial instrument development and exploratory factor analysis. Professional School Counseling, 14, 125–134.

Dimmitt, C., Carey, J. C., & Hatch, T. A. (2007). Evidence-based school counseling: Making a difference with data-driven practices. New York, NY: Corwin Press.

Frechtling, J. A. (2007). Logic modeling methods in program evaluation. New York, NY: Wiley & Sons.

Gysbers, N. C., & Henderson, P. (2000). Developing and managing your school guidance program (3rd ed.). Alexandria, VA: American Counseling Association.

Heppner, P. P., Kivlighan, D. M., Jr., & Wampold, B. E. (1999). Research design in counseling (2nd ed.). Belmont, CA: Wadsworth.

Isaacs, M. L. (2003). Data-driven decision making: The engine of accountability. Professional School Counseling, 6, 288–295.

Johnson, C. D., & Johnson, S. K. (2001). Results-based student support programs: Leadership academy workbook. San Juan Capistrano, CA: Professional Update.

Lapan, R. T., Gysbers, N. C., & Petroski, G. F. (2001). Helping seventh graders be safe and successful: A statewide study of the impact of comprehensive guidance and counseling programs. Journal of Counseling & Development, 79, 320–330. doi:10.1002/j.1556-6676.2001.tb01977.x

Lapan, R. T., Gysbers, N. C., & Sun, Y. (1997). The impact of more fully implemented guidance programs on the school experiences of high school students: A statewide evaluation study. Journal of Counseling & Development, 75, 292–302. doi:10.1002/j.1556-6676.1997.tb02344.x

Loesch, L. C. (2001). Counseling program evaluation: Inside and outside the box. In D. C. Locke, J. E. Myers, & E. L. Herr (Eds.), The handbook of counseling (pp. 513–525). Thousand Oaks, CA: Sage.

Lusky, M. B., & Hayes, R. L. (2001). Collaborative consultation and program evaluation. Journal of Counseling & Development, 79, 26–38. doi:10.1002/j.1556-6676.2001.tb01940.x

Martin, I., & Carey, J. C. (2012). Evaluation capacity within state-level school counseling programs: A cross-case analysis. Professional School Counseling, 15, 132–143.

Martin, I., Carey, J. C., & DeCoster, K. (2009). A national study of the current status of state school counseling models. Professional School Counseling, 12, 378–386.

Martin, P. J. (2002). Transforming school counseling: A national perspective. Theory Into Practice, 41, 148–153. doi:10.1207/s15430421tip4103_2

Myrick, R. D. (2003). Developmental guidance and counseling: A practical approach (4th ed.). Minneapolis, MN: Educational Media Corporation.

Schmidt, J. J. (1995). Assessing school counseling programs through external interviews. School Counselor, 43, 114–123.

Sexton, T. L., Whiston, S. C., Bleuer, J. C., & Walz, G. R. (1997). Integrating outcome research into counseling practice and training. Alexandria, VA: American Counseling Association.

Trevisan, M. S. (2000). The status of program evaluation expectations in state school counselor certification requirements. American Journal of Evaluation, 21, 81–94. doi:10.1177/109821400002100107

Trevisan, M. S. (2002). Evaluation capacity in K-12 school counseling programs. American Journal of Evaluation, 23, 291–305. doi:10.1016/S1098-2140(02)00207-2

W. K. Kellogg Foundation. (2004). Logic model development guide. Battle Creek, MI: Author.

Ian Martin is an assistant professor at the University of San Diego. John Carey is a professor at the University of Massachusetts, Amherst, and the Director of the Ronald H. Fredrickson Center for School Counseling Outcome Research and Evaluation. Correspondence can be addressed to: Ian Martin, 5998 Alcala Park, San Diego, CA 92110, imartin@sandiego.edu.