Research Identity Development of Counselor Education Doctoral Students: A Grounded Theory

Dodie Limberg, Therese Newton, Kimberly Nelson, Casey A. Barrio Minton, John T. Super, Jonathan Ohrt

We present a grounded theory based on interviews with 11 counselor education doctoral students (CEDS) regarding their research identity development. Findings reflect the process-oriented nature of research identity development and the influence of program design, research content knowledge, experiential learning, and self-efficacy on this process. Based on our findings, we emphasize the importance of mentorship and faculty conducting their own research as a way to model the research process. Additionally, our theory points to the need for increased funding for CEDS in order for them to be immersed in the experiential learning process and research courses being tailored to include topics specific to counselor education.

Keywords: grounded theory, research identity development, counselor education doctoral students, mentoring, experiential

Counselor educators’ professional identity consists of five primary roles: counseling, teaching, supervision, research, and leadership and advocacy (Council for Accreditation of Counseling and Related Educational Programs [CACREP], 2015). Counselor education doctoral programs are tasked with fostering an understanding of these roles in future counselor educators (CACREP, 2015). Transitions into the counselor educator role have been described as life-altering and associated with increased levels of stress, self-doubt, and uncertainty (Carlson et al., 2006; Dollarhide et al., 2013; Hughes & Kleist, 2005; Protivnak & Foss, 2009); however, little is known about specific processes and activities that assist programs to intentionally cultivate transitions into these identities.

Although distribution of faculty roles varies depending on the type of position and institution, most academic positions require some level of research or scholarly engagement. Still, only 20% of counselor educators are responsible for producing the majority of publications within counseling journals, and 19% of counselor educators have not published in the last 6 years (Lambie et al., 2014). Borders and colleagues (2014) found that the majority of application-based research courses in counselor education doctoral programs (e.g., qualitative methodology, quantitative methodology, sampling procedures) were taught by non-counseling faculty members, while counseling faculty members were more likely to teach conceptual or theoretical research courses. Further, participants reported that non-counseling faculty led application-based courses because there were no counseling faculty members who were well qualified to instruct such courses (Borders et al., 2014).

To assist counselor education doctoral students’ (CEDS) transition into the role of emerging scholar, Carlson et al. (2006) recommended that CEDS become active in scholarship as a supplement to required research coursework. Additionally, departmental culture, mentorship, and advisement have been shown to reduce rates of attrition and increase feelings of competency and confidence in CEDS (Carlson et al., 2006; Dollarhide et al., 2013; Protivnak & Foss, 2009). However, Borders et al. (2014) found that faculty from 38 different CACREP-accredited programs reported that just over half of the CEDS from these programs became engaged in research during their first year, with nearly 8% not becoming involved in research activity until their third year. Although these experiences assist CEDS to develop as doctoral students, it is unclear which of these activities are instrumental in cultivating a sound research identity (RI) of CEDS. Understanding how RI is cultivated throughout doctoral programs may provide ways to enhance research within the counseling profession. Understanding this developmental process will inform methods for improving how counselor educators prepare CEDS for their professional roles.

Research Identity

Research identity is an ambiguous term within the counseling literature, with definitions that broadly conceptualize the construct in terms of beliefs, attitudes, and efficacy related to scholarly research, along with a conceptualization of one’s own overall professional identity (Jorgensen & Duncan, 2015; Lamar & Helm, 2017; Ponterotto & Grieger, 1999; Reisetter et al., 2011). Ponterotto and Grieger (1999) described RI as how one views oneself as a scholar or researcher, noting that research worldview (i.e., the lens through which they view, approach, and manage the process of research) impacts how individuals conceptualize, conduct, and interpret results. This perception and interpretation of research as important to RI is critical to consider, as it is common practice for CEDS to enter doctoral studies with limited research experience. Additionally, many CEDS enter into training with a strong clinical identity (Dollarhide et al., 2013), but coupled with the void of research experience or exposure, CEDS may perceive research as disconnected and separate from counseling practice (Murray, 2009). Furthermore, universities vary in the support (e.g., graduate assistant, start-up funds, course release, internal grants) they provide faculty to conduct research.

The process of cultivating a strong RI may be assisted through wedding science and practice (Gelso et al., 2013) and aligning research instruction with values and theories often used in counseling practice (Reisetter et al., 2011). More specifically, Reisetter and colleagues (2011) found that cultivation of a strong RI was aided when CEDS were able to use traditional counseling skills such as openness, reflexive thinking, and attention to cognitive and affective features while working alongside research “participants” rather than conducting studies on research “subjects.” Counseling research is sometimes considered a practice limited to doctoral training and faculty roles, perhaps perpetuating the perception that counseling research and practice are separate and distinct phenomena (Murray, 2009). Mobley and Wester (2007) found that only 30% of practicing clinicians reported reading and integrating research into their work; therefore, early introduction to research may also aid in diminishing the research–practice gap within the counseling profession. The cultivation of a strong RI may begin through exposure to research and scholarly activity at the master’s level (Gibson et al., 2010). More recently, early introduction to research activity and counseling literature at the master’s level is supported within the 2016 CACREP Standards (2015), specifically the infusion of current literature into counseling courses (Standard 2.E.) and training in research and program evaluation (Standard 2.F.8.). Therefore, we may see a shift in the research–practice gap based on these included standards in years to come.

Jorgensen and Duncan (2015) used grounded theory to better understand how RI develops within master’s-level counseling students (n = 12) and clinicians (n = 5). The manner in which participants viewed research, whether as separate from their counselor identity or as fluidly woven throughout, influenced the development of a strong RI. Further, participants’ views and beliefs about research were directly influenced by external factors such as training program expectations, messages received from faculty and supervisors, and academic course requirements. Beginning the process of RI development during master’s-level training may support more advanced RI development for those who pursue doctoral training.

Through photo elicitation and individual interviews, Lamar and Helm (2017) sought to gain a deeper understanding of CEDS’ RI experiences. Their findings highlighted several facets of the internal processes associated with RI development, including inconsistency in research self-efficacy, integration of RI into existing identities, and finding methods of contributing to the greater good through research. The role of external support during the doctoral program was also a contributing factor to RI development, with multiple participants noting the importance of family and friend support in addition to faculty support. Although this study highlighted many facets of RI development, much of the discussion focused on CEDS’ internal processes, rather than the role of specific experiences within their doctoral programs.

Research Training Environment

Literature is emerging related to specific elements of counselor education doctoral programs that most effectively influence RI. Further, there is limited research examining individual characteristics of CEDS that may support the cultivation of a strong RI. One of the more extensively reviewed theories related to RI cultivation is the belief that the research training environment, specifically the program faculty, holds the most influence and power over the strength of a doctoral student’s RI (Gelso et al., 2013). Gelso et al. (2013) also hypothesized that the research training environment directly affects students’ research attitudes, self-efficacy, and eventual productivity. Additionally, Gelso et al. outlined factors in the research training environment that influence a strong RI, including (a) appropriate and positive faculty modeling of research behaviors and attitudes, (b) positive reinforcement of student scholarly activities, (c) the emphasis of research as a social and interpersonal activity, and (d) emphasizing all studies as imperfect and flawed. Emphasis on research as a social and interpersonal activity consistently received the most powerful support in cultivating RI. This element of the research training environment may speak to the positive influence of working on research teams or in mentor and advising relationships (Gelso et al., 2013).

To date, there are limited studies that have addressed the specific doctoral program experiences and personal characteristics of CEDS that may lead to a strong and enduring RI. The purpose of this study was to: (a) gain a better understanding of CEDS’ RI development process during their doctoral program, and (b) identify specific experiences that influenced CEDS’ development as researchers. The research questions guiding the investigation were: 1) How do CEDS understand RI? and 2) How do CEDS develop as researchers during their doctoral program?

Method

We used grounded theory design for our study because of the limited empirical data about how CEDS develop an RI. Grounded theory provides researchers with a framework to generate a theory from the context of a phenomenon and offers a process to develop a model to be used as a theoretical foundation (Charmaz, 2014; Corbin & Strauss, 2008). Prior to starting our investigation, we received IRB approval for this study.

Research Team and Positionality

The core research team consisted of one Black female in the second year of her doctoral program, one White female in the first year of her doctoral program, and one White female in her third year as an assistant professor. A White male in his sixth year as an assistant professor participated as the internal auditor, and a White male in his third year as a clinical assistant professor participated as the external auditor. Both doctoral students had completed two courses that covered qualitative research design, and all three faculty members had experience utilizing grounded theory. Prior to beginning our work together, we discussed our beliefs and experiences related to RI development. All members of the research team were in training to be or were counselor educators and researchers, and we acknowledged this as part of our positionality. We all agreed that we value research as part of our roles as counselor educators, and we discussed our beliefs that the primary purpose of pursuing a doctoral degree is to gain skills as a researcher rather than an advanced counselor. We acknowledged the strengths that our varying levels of professional experiences provided to our work on this project, and we also recognized the power differential within the research team; thus, we added auditors to help ensure trustworthiness. All members of the core research team addressed their biases and judgments regarding participants’ experiences through bracketing and memoing to ensure that participants’ voices were heard with as much objectivity as possible (Hays & Wood, 2011). We recorded our biases and expectations in a meeting prior to data collection. Furthermore, we continued to discuss assumptions and biases in order to maintain awareness of the influence we may have on data analysis (Charmaz, 2014). Our assumptions included (a) the influence of length of time in a program, (b) the impact of mentoring, (c) how participants’ research interests would mirror their mentors’, (d) that beginning students may not be able to articulate or identify the difference between professional identity and RI, (e) that CEDS who want to pursue academia may identify more as researchers than in other roles (i.e., teaching, supervision), and (f) that coursework and previous experience would influence RI. Each step of the data analysis process provided us the opportunity to revisit our biases.

Participants and Procedure

Individuals who were currently enrolled in CACREP-accredited counselor education and supervision doctoral programs were eligible for participation in the study. We used purposive sampling (Glesne, 2011) to strategically contact eight doctoral program liaisons at CACREP-accredited doctoral programs via email to identify potential participants. The programs were selected to represent all regions and all levels of Carnegie classification. The liaisons all agreed to forward an email that included the purpose of the study and criteria for participation. A total of 11 CEDS responded to the email, met selection criteria, and participated in the study. We determined that 11 participants was an adequate sample size considering data saturation was reached during the data analysis process (Creswell, 2007). Participants represented eight different CACREP-accredited doctoral programs across six states. At the time of the interviews, three participants were in the first year of their program, five were in their second year, and three were in their third year. To prevent identification of participants, we report demographic data in aggregate form. The sample included eight women and three men who ranged in age from 26–36 years (M = 30.2). Six participants self-identified as White (non-Hispanic), three as multiracial, one as Latinx, and one as another identity not specified. All participants held a master’s degree in counseling; they entered their doctoral programs with 0–5 years of post-master’s clinical experience (M = 1.9). Eight participants indicated a desire to pursue a faculty position, two indicated a desire to pursue academia while also continuing clinical work, and one did not indicate a planned career path. Of those who indicated post-doctoral plans, seven participants expected to pursue a faculty role within a research-focused institution and three indicated a preference for a teaching-focused institution. All participants had attended and presented at a state or national conference within the past 3 years, with the number of presentations ranging from three to 44 (M = 11.7). Nine participants had submitted manuscripts to peer-reviewed journals and had at least one manuscript published or in press. Finally, four participants had received grant funding.

Data Collection

We collected data through a demographic questionnaire and semi-structured individual interviews. The demographic questionnaire consisted of nine questions focused on general demographic characteristics (i.e., gender, age, race, and education). Additionally, we asked questions focused on participants’ experiences as researchers (i.e., professional organization affiliations, service, conference presentations, publications, and grant experience). These questions were used to triangulate the data. The semi-structured interviews consisted of eight open-ended questions asked in sequential order to promote consistency across participants (Heppner et al., 2016) and we developed them from existing literature. Examples of questions included: 1) How would you describe your research identity? 2) Identify or talk about things that happened during your doctoral program that helped you think of yourself as a researcher, and 3) Can you talk about any experiences that have created doubts about adopting the identity of a researcher? The two doctoral students on the research team conducted the interviews via phone. Interviews lasted approximately 45–60 minutes and were audio recorded. After all interviews were conducted, a member of the research team transcribed the interviews.

Data Analysis and Trustworthiness

We followed grounded theory data analysis procedures outlined by Corbin and Strauss (2008). Prior to data analysis, we recorded biases, read through all of the data, and discussed the coding process to ensure consistency. We followed three steps of coding: 1) open coding, 2) axial coding, and 3) selective coding. Our first step of data analysis was open coding. We read through the data several times and then started to create tentative labels for chunks of data that summarized what we were reading. We recorded examples of participants’ words and established properties of each code. We then coded line-by-line together using the first participant transcript in order to have opportunities to check in and share and compare our open codes. Then we individually coded the remainder of the participants and came back together as a group to discuss and memo. We developed a master list of 184 open codes.

Next, we moved from inductive to deductive analysis using axial coding to identify relationships among the open codes. We identified relationships among the open codes and grouped them into categories. Initially we created a list of 55 axial codes, but after examining the codes further, we made a team decision to collapse them to 19 axial codes that were represented as action-oriented tasks within our theory (see Table 1).

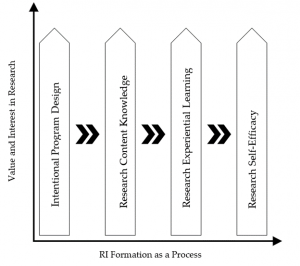

Last, we used selective coding to identify core variables that include all of the data. We found that two factors and four subfactors most accurately represent the data (see Figure 1). The auditor was involved in each step of coding and provided feedback throughout. To enhance trustworthiness and manage bias when collecting and analyzing the data, we applied several strategies: (a) we recorded memos about our ideas about the codes and their relationships (i.e., reflexivity; Morrow, 2005); (b) we used investigator triangulation (i.e., involving multiple investigators to analyze the data independently, then meeting together to discuss; Archibald, 2015); (c) we included an internal and external auditor to evaluate the data (Glesne, 2011; Hays & Wood, 2011); (d) we conducted member checking by sending participants their complete transcript and summary of the findings, including the visual (Creswell & Miller, 2000); and (e) we used multiple sources of data (i.e., survey questions on the demographic form; Creswell, 2007) to triangulate the data.

Table 1

List of Factors and Subfactors

Factor 1: Research Identity Formation as a Process

unable to articulate what research identity is

linking research identity to their research interests or connecting it to their professional experiences

associating research identity with various methodologies

identifying as a researcher

understanding what a research faculty member does

Factor 2: Value and Interest in Research

desiring to conduct research

aspiring to maintain a degree of research in their future role

making a connection between research and practice and contributing to the counseling field

Subfactor 1: Intentional Program Design

implementing an intentional curriculum

developing a research culture (present and limited)

active faculty mentoring and modeling of research

Subfactor 2: Research Content Knowledge

understanding research design

building awareness of the logistics of a research study

learning statistics

Subfactor 3: Research Experiential Learning

engaging in scholarly activities

conducting independent research

having a graduate research assistantship

Subfactor 4: Research Self-Efficacy

receiving external validation

receiving growth-oriented feedback (both negative and positive)

Figure 1

Model of CEDS’ Research Identity Development

Results

Data analysis resulted in a grounded theory composed of two main factors that support the overall process of RI development among CEDS: (a) RI formation as a process and (b) value and interest in research. The first factor is the foundation of our theory because it describes RI development as an ongoing, formative process. The second main factor, value and interest in research, provides an interpersonal approach to RI development in which CEDS begin to embrace “researcher” as a part of who they are.

Our theory of CEDS’ RI development is represented visually in Figure 1. At each axis of the figure, the process of RI is represented longitudinally, and the value and interest in research increases during the process. The four subfactors (i.e., program design, content knowledge, experiential learning, and self-efficacy) contribute to each other but are also independent components that influence the process and the value and interest. Each subfactor is represented as an upward arrow, which supports the idea within our theory that each subfactor increases through the formation process. Each of these subfactors includes components that are specific action-oriented tasks (see Table 1). In order to make our findings relevant and clear, we have organized them by the two research questions that guided our study. To bring our findings to life, we describe the two major factors, four subfactors, and action-oriented tasks using direct quotes from the participants.

Research Question 1: How Do CEDS Describe RI?

Two factors supported this research question: RI formation as a process and value and interest in research.

Factor 1: Research Identity Formation as a Process

Within this factor we identified five action-oriented tasks: (a) being unable to articulate what research identity is, (b) linking research identity to their research interests or connecting it to their professional experiences, (c) associating research identity with various methodologies, (d) identifying as a researcher, and (e) understanding what a research faculty member does. Participants described RI as a formational process. Participant 10 explained, “I still see myself as a student. . . . I still feel like I have a lot to learn and I am in the process of learning, but I have a really good foundation from the practical experiences I have had [in my doctoral program].” When asked how they would describe RI, many were unable to articulate what RI is, asking for clarification or remarking on how they had not been asked to consider this before. Participants often linked RI to their research interests or professional experiences. For example, Participant 11 said, “in clinical practice, I centered around women and women issues. Feminism has come up as a product of other things being in my PhD program, so with my dissertation, my topic is focused on feminism.” Several participants associated RI with various methodologies, including Participant 7: “I would say you know in terms of research methodology and what not, I strongly align with quantitative research. I am a very quantitative-minded person.” Some described this formational process as the transition to identifying as a researcher:

I actually started a research program in my university, inviting or matching master’s students who were interested in certain research with different research projects that were available. So that was another way of me kind of taking on some of that mentorship role in terms of research. (Participant 9)

As their RI emerged, participants understood what research-oriented faculty members do:

Having faculty talk about their research and their process of research in my doc program has been extremely helpful. They talk about not only what they are working on but also the struggles of their process and so they don’t make it look glamorous all the time. (Participant 5)

Factor 2: Value and Interest in Research

All participants talked about the value and increased interest in research as they went through their doctoral program. We identified three action-oriented tasks within this factor: (a) desiring to conduct research, (b) aspiring to maintain a degree of research in their future role, and (c) making a connection between research and practice and contributing to the counseling field. Participant 6 described, “Since I have been in the doctoral program, I have a bigger appreciation for the infinite nature of it (research).” Participants spoke about an increased desire to conduct research; for example, “research is one of the most exciting parts of being a doc student, being able to think of a new project and carrying out the steps and being able to almost discover new knowledge” (Participant 1). All participants aspired to maintain a degree of research in future professional roles after completion of their doctoral programs regardless of whether they obtained a faculty role at a teaching-focused or research-focused university. For example, Participant 4 stated: “Even if I go into a teaching university, I have intentions in continuing very strongly my research and keeping that up. I think it is very important and it is something that I like doing.” Additionally, participants started to make the connection between research and practice and contributing to the counseling profession:

I think research is extremely important because that is what clinicians refer to whenever they have questions about how to treat their clients, and so I definitely rely upon research to understand views in the field and I value it myself so that I am more well-rounded as an educator. (Participant 6)

Research Question 2: How Do CEDS Develop Their RI During Their Doctoral Program?

The following four subfactors provided a description of how CEDS develop RI during their training: intentional program design, research content knowledge, research experiential learning, and research self-efficacy. Each subfactor contains action-oriented tasks.

Subfactor 1: Intentional Program Design

Participants discussed the impact the design of their doctoral program had on their development as researchers. They talked about three action-oriented tasks: (a) implementing an intentional curriculum, (b) developing a research culture (present and limited), and (c) active faculty mentoring and modeling of research. Participants appreciated the intentional design of the curriculum. For example, Participant 5 described how research was highlighted across courses: “In everything that I have had to do in class, there is some form of needing to produce either a proposal or being a good consumer of research . . . it [the value of research] is very apparent in every course.” Additionally, participants talked about the presence or lack of a research culture. For example, Participant 2 described how “at any given time, I was working on two or three projects,” whereas Participant 7 noted that “gaining research experience is not equally or adequately provided to our doctoral students.” Some participants discussed being assigned a mentor, and others talked about cultivating an organic mentoring relationship through graduate assistantships or collaboration with faculty on topics of interest. However, all participants emphasized the importance of faculty mentoring:

I think definitely doing research with the faculty member has helped quite a bit, especially doing the analysis that I am doing right now with the chair of our program has really helped me see research in a new light, in a new way, and I have been grateful for that. (Participant 1)

The importance of modeling of research was described in terms of faculty actually conducting their own research. For example, Participant 11 described how her professor “was conducting a research study and I was helping her input data and write and analyze the data . . . that really helped me grapple with what research looks like and is it something that I can do.” Participant 10 noted how peers conducting research provided a model:

Having that peer experience (a cohort) of getting involved in research and knowing again that we don’t have to have all of the answers and we will figure it out and this is where we all are, that was also really helpful for me and developing more confidence in my ability to do this [research].

Subfactor 2: Research Content Knowledge

All participants discussed the importance of building their research content knowledge. Research content knowledge consisted of three action-oriented tasks: (a) understanding research design, (b) building awareness of the logistics of a research study, and (c) learning statistics. Participant 1 described their experience of understanding research design: “I think one of the most important pieces of my research identity is to be well-rounded and [know] all of the techniques in research designs.” Participants also described developing an awareness of the logistics of research study, ranging from getting IRB approval to the challenges of data collection. For example, Participant 9 stated:

Seeing what goes into it and seeing the building blocks of the process and also really getting that chance to really think about the study beforehand and making sure you’re getting all of the stuff to protect your clients, to protecting confidentiality, those kind of things. So I think it is kind of understanding more about the research process and also again what goes into it and what makes the research better.

Participants also explained how learning statistics was important; however, a fear of statistics was a barrier to their learning and development. Participant 2 said, “I thought before I had to be a stats wiz to figure anything out, and I realize now that I just have to understand how to use my resources . . . I don’t have to be some stat wiz to actually do [quantitative research].”

Subfactor 3: Research Experiential Learning

Research experiential learning describes actual hands-on experiences participants had related to research. Within our theory, three action-oriented tasks emerged from this subfactor: (a) engaging in scholarly activities, (b) conducting independent research, and (c) having a graduate research assistantship. Engaging in scholarly activities included conducting studies, writing for publication, presenting at conferences, and contributing to or writing a grant proposal. Participant 5 described the importance of being engaged in scholarly activities through their graduate assistantship:

I did have a research graduate assistantship where I worked under some faculty and that definitely exposed me to a higher level of research, and being exposed to that higher level of research allowed me to fine tune how I do research. So that was reassuring in some ways and educational.

Participants also described the importance of leading and conducting their own research via dissertation or other experiences during their doctoral program. For example, Participant 9 said:

Starting research projects that were not involving a faculty member I think has also impacted my work a lot, I learned a lot from that process, you know, having to submit [to] an IRB, having to structure the study and figure out what to do, and so again learning from mistakes, learning from experience, and building self-efficacy.

Subfactor 4: Research Self-Efficacy

The subfactor of research self-efficacy related to the process of participants being confident in identifying themselves and their skills as researchers. We found two action-oriented tasks related to research self-efficacy: (a) receiving external validation and (b) receiving growth-oriented feedback (both negative and positive). Participant 3 described their experience of receiving external validation through sources outside of their doctoral program as helpful in building confidence as a researcher:

I have submitted and have been approved to present at conferences. That has boosted my confidence level to know that they know I am interested in something and I can talk about it . . . that has encouraged me to further pursue research.

Participant 8 explained how receiving growth-oriented feedback on their research supported their own RI development: “People stopped by [my conference presentation] and were interested in what research I was doing. It was cool to talk about it and get some feedback and hear what people think about the research I am doing.”

Discussion

Previous researchers have found RI within counselor education to be an unclear term (Jorgensen & Duncan, 2015; Lamar & Helm, 2017). Although our participants struggled to define RI, our participants described RI as the process of identifying as a researcher, the experiences related to conducting research, and finding value and interest in research. Consistent with previous findings (e.g., Ponterotto & Grieger, 1999), we found that interest in and value of research is an important part of RI. Therefore, our qualitative approach provided us a way to operationally define CEDS’ RI as a formative process of identifying as a researcher that is influenced by the program design, level of research content knowledge, experiential learning of research, and research self-efficacy.

Our findings emphasize the importance of counselor education and supervision doctoral program design. Similar to previous researchers (e.g., Borders et al., 2019; Carlson et al., 2006; Dollarhide et al., 2013; Protivnak & Foss, 2009), we found that developing a culture of research that includes mentoring and modeling of research is vital to CEDS’ RI development. Lamar and Helm (2017) also noted the valuable role faculty mentorship and engagement in research activities, in addition to research content knowledge, has on CEDS’ RI development. Although Lamar and Helm noted that RI development may be enhanced through programmatic intentionality toward mentorship and curriculum design, they continually emphasized the importance of CEDS initiating mentoring relationships and taking accountability for their own RI development. We agree that individual initiative and accountability are valuable and important characteristics for CEDS to possess; however, we also acknowledge that student-driven initiation of such relationships may be challenging in program cultures that do not support RI or do not provide equitable access to mentoring and research opportunities.

Consistent with recommendations by Gelso et al. (2013) and Borders et al. (2014), building a strong foundation of research content knowledge (e.g., statistics, design) is an important component of CEDS’ RI development. Unlike Borders and colleagues, our participants did not discuss how who taught their statistics courses made a difference. Rather, participants discussed the value of experiential learning (i.e., participating on a research team), and conducting research on their own influenced how they built their content knowledge. This finding is similar to Carlson et al.’s (2006) and supports Borders et al.’s findings regarding the critical importance of early research involvement for CEDS.

Implications for Practice

Our grounded theory provides a clear, action-oriented model that consists of multiple tasks that can be applied in counselor education doctoral programs. Given our findings regarding the importance of experiential learning, we acknowledge the importance for increased funding to ensure CEDS are able to focus on their studies and immerse themselves in research experiences. Additionally, design of doctoral programs is crucial to how CEDS develop as researchers. Findings highlight the importance of faculty members at all levels being actively involved in their own scholarship and providing students with opportunities to be a part of it. In addition, we recommend intentional attention to mentorship as an explicit program strategy for promoting a culture of research. Findings also support the importance of coursework for providing students with relevant research content knowledge they can use in research and scholarly activities (e.g., study proposal, conceptual manuscript, conference presentation). Additionally, we recommend offering a core of research courses that build upon one another to increase research content knowledge and experiential application. More specifically, this may include a research design course taught by counselor education faculty at the beginning of the program to orient students to the importance of research for practice; such a foundation may help ensure students are primed to apply skills learned in more technical courses. Finally, we suggest that RI development is a process that is never complete; therefore, counselor educators are encouraged to continue to participate in professional development opportunities that are research-focused (e.g., AARC, ACES Inform, Evidence-Based School Counseling Conference, AERA). More importantly, it should be the charge of these organizations to continue to offer high quality trainings on a variety of research designs and advanced statistics.

Implications for Future Research

Replication or expansion of our study is warranted across settings and developmental levels. Specifically, it would be interesting to examine RI development of pre-tenured faculty and tenured faculty members to see if our model holds or what variations exist between these populations. Or it may be beneficial to assess the variance of RI based on the year a student is in the program (e.g., first year vs. third year). Additionally, further quantitative examination of relationships between each component of our theory would be valuable to understand the relationship between the constructs more thoroughly. Furthermore, pedagogical interventions, such as conducting a scholarship of teaching and learning focused on counselor education doctoral-level research courses, may be valuable in order to support their merit.

Limitations

Although we engaged in intentional practices to ensure trustworthiness throughout our study, there are limitations that should be considered. Specifically, all of the authors value and find research to be an important aspect of counselor education and participants self-selected to participate in the research study, which is common practice in most qualitative studies. However, self-selection may present bias in the findings because of the participants’ levels of interest in the topic of research. Additionally, participant selection was based on those who responded to the email and met the criteria; therefore, there was limited selection bias of the participants from the research team. Furthermore, participants were from a variety of programs and their year in their program (e.g., first year) varied; all the intricacies within each program cannot be accounted for and they may contribute to how the participants view research. Finally, the perceived hierarchy (i.e., faculty and students) on the research team may have contributed to the data analysis process by students adjusting their analysis based on faculty input.

Conclusion

In summary, our study examined CEDS’ experiences that helped build RI during their doctoral program. We interviewed 11 CEDS who were from eight CACREP-accredited doctoral programs from six different states and varied in the year of their program. Our grounded theory reflects the process-oriented nature of RI development and the influence of program design, research content knowledge, experiential learning, and self-efficacy on this process. Based on our findings, we emphasize the importance of mentorship and faculty conducting their own research as ways to model the research process. Additionally, our theory points to the need for increased funding for CEDS in order for them to be immersed in the experiential learning process and research courses being tailored to include topics specific to counselor education and supervision.

Conflict of Interest and Funding Disclosure

The authors reported no conflict of interest

or funding contributions for the development

of this manuscript.

References

Archibald, M. (2015). Investigator triangulation: A collaborative strategy with potential for mixed methods research. Journal of Mixed Methods Research, 10(3), 228–250.

Association for Assessment and Research in Counseling. (2019). About us. http://aarc-counseling.org/abo

ut-us

Borders, L. D., Gonzalez, L. M., Umstead, L. K., & Wester, K. L. (2019). New counselor educators’ scholarly productivity: Supportive and discouraging environments. Counselor Education and Supervision, 58(4), 293–308. https://doi.org/10.1002/ceas.12158

Borders, L. D., Wester, K. L., Fickling, M. J., & Adamson, N. A. (2014). Research training in CACREP-accredited doctoral programs. Counselor Education and Supervision, 53, 145–160.

https://doi.org/10.1002/j.1556-6978.2014.00054.x

Carlson, L. A., Portman, T. A. A., & Bartlett, J. R. (2006). Self-management of career development: Intentionality for counselor educators in training. The Journal of Humanistic Counseling, Education and Development, 45(2), 126–137.

https://doi.org/10.1002/j.2161-1939.2006.tb00012.x

Charmaz, K. (2014). Constructing grounded theory (2nd ed.). SAGE.

Corbin, J., & Strauss, A. (2008). Basics of qualitative research: Techniques and procedures for developing grounded theory (3rd ed.). SAGE.

Council for Accreditation of Counseling and Related Educational Programs. (2015). 2016 CACREP standards. http://www.cacrep.org/wp-content/uploads/2017/08/2016-Standards-with-citations.pdf

Creswell, J. W. (2007). Qualitative inquiry & research design: Choosing among five approaches (2nd ed.). SAGE.

Creswell, J. W., & Miller, D. L. (2000). Determining validity in qualitative inquiry. Theory Into Practice, 39(3), 124–130. https://doi.org/10.1207/s15430421tip3903_2

Dollarhide, C. T., Gibson, D. M., & Moss, J. M. (2013). Professional identity development of counselor education doctoral students. Counselor Education and Supervision, 52(2), 137–150.

https://doi.org/10.1002/j.1556-6978.2013.00034.x

Gelso, C. J., Baumann, E. C., Chui, H. T., & Savela, A. E. (2013). The making of a scientist-psychotherapist: The research training environment and the psychotherapist. Psychotherapy, 50(2), 139–149. https://doi.org/10.1037/a0028257

Gibson, D. M., Dollarhide, C. T., & Moss, J. M. (2010). Professional identity development: A grounded theory of transformational tasks of new counselors. Counselor Education and Supervision, 50(1), 21–38. https://doi.org/10.1002/j.1556-6978.2010.tb00106.x

Glesne, C. (2011). Becoming qualitative researchers: An introduction (4th ed.). Pearson.

Hays, D. G., & Wood, C. (2011). Infusing qualitative traditions in counseling research designs. Journal of Counseling & Development, 89(3), 288–295. https://doi.org/10.1002/j.1556-6678.2011.tb00091.x

Heppner, P. P., Wampold, B. E., Owen, J., Thompson, M. N., & Wang, K. T. (2016). Research design in counseling (4th ed.). Cengage.

Hughes, F. R., & Kleist, D. M. (2005). First-semester experiences of counselor education doctoral students. Counselor Education and Supervision, 45(2), 97–108.

https://doi.org/10.1002/j.1556-6978.2005.tb00133.x

Jorgensen, M. F., & Duncan, K. (2015). A grounded theory of master’s-level counselor research identity. Counselor Education and Development, 54(1), 17–31.

https://doi.org/10.1002/j.1556-6978.2015.00067.x

Lamar, M. R., & Helm, H. M. (2017). Understanding the researcher identity development of counselor education and supervision doctoral students. Counselor Education and Supervision, 56(1), 2–18.

https://doi.org/10.1002/ceas.12056

Lambie, G. W., Ascher, D. L., Sivo, S. A., & Hayes, B. G. (2014). Counselor education doctoral program faculty members’ refereed article publications. Journal of Counseling & Development, 92(3), 338–346. https://doi.org/10.1002/j.1556-6676.2014.00161.x

Mobley, K., & Wester, K. L. (2007). Evidence-based practices: Building a bridge between researchers and practitioners. Association for Counselor Education and Supervision.

Morrow, S. L. (2005). Quality and trustworthiness in qualitative research in counseling psychology. Journal of Counseling Psychology, 52(2), 250–260. https://doi.org/10.1037/0022-0167.52.2.250

Murray, C. E. (2009). Diffusion of innovation theory: A bridge for the research-practice gap in

counseling. Journal of Counseling & Development, 87(1), 108–116.

https://doi.org/10.1002/j.1556-6678.2009.tb00556.x

Ponterotto, J. G., & Grieger, I. (1999). Merging qualitative and quantitative perspectives in a research identity. In M. Kopala & L. A. Suzuki (Eds.), Using qualitative methods in psychology (pp. 49–62). SAGE.

Protivnak, J. J., & Foss, L. L. (2009). An exploration of themes that influence the counselor education doctoral student experience. Counselor Education and Supervision, 48(4), 239–256.

https://doi.org/10.1002/j.1556-6978.2009.tb00078.x

Reisetter, M., Korcuska, J. S., Yexley, M., Bonds, D., Nikels, H., & McHenry, W. (2011). Counselor educators and qualitative research: Affirming a research identity. Counselor Education and Supervision, 44(1), 2–16. https://doi.org/10.1002/j.1556-6978.2004.tb01856.x

Dodie Limberg, PhD, is an associate professor at the University of South Carolina. Therese Newton, NCC, is an assistant professor at Augusta University. Kimberly Nelson is an assistant professor at Fort Valley State University. Casey A. Barrio Minton, NCC, is a professor at the University of Tennessee, Knoxville. John T. Super, NCC, LMFT, is a clinical assistant professor at the University of Central Florida. Jonathan Ohrt is an associate professor at the University of South Carolina. Correspondence may be addressed to Dodie Limberg, 265 Wardlaw College Main St., Columbia, SC 29201, dlimberg@sc.edu.