Oct 31, 2023 | Volume 13 - Issue 3

Donghun Lee, Sojeong Nam, Jeongwoon Jeong, GoEun Na, Jungeun Lee

Despite advanced definitions and continued research on counselor burnout, attempts to investigate an expanded structure of counselor burnout remain limited. Using a serial mediation, the current study conducted a path analysis of a hypothesized process model using the five dimensions of the Counselor Burnout Inventory. Our research findings support the hypothesized sequential process model of counselor burnout, confirming full mediating effects of Deterioration in Personal Life, Exhaustion, and Incompetence in a serial order on the relationship between Negative Work Environment and Devaluing Client. Suggestions for future research and practical implications for counselors, supervisors, and directors are discussed.

Keywords: counselor burnout, serial mediation, path analysis, process model, Counselor Burnout Inventory

Burnout has received significant attention in counseling research because of the unique nature of counseling work (Bardhoshi et al., 2019; Bardhoshi & Um, 2021; Fye et al., 2020; J. J. Kim et al., 2018; Maslach & Leiter, 2016). Early studies on burnout focused on defining burnout as a phenomenon. Freudenberger (1975), recognized as one of the pioneers in examining the burnout phenomenon, emphasized a loss of motivation and emotional exhaustion, defining it as “failing, wearing out, or becoming exhausted through excessive demands on energy, strength, or resources” (p. 73). Osborn (2004) placed an emphasis on physical and psychological reactions to job stress, describing burnout as “the process of physical and emotional depletion resulting from conditions at work or, more concisely, prolonged job stress” (p. 319). Maslach and colleagues have attempted to find a broader definition of burnout in their studies (Maslach & Jackson, 1981a, 1981b; Maslach et al., 1997, 2001; Maslach & Leiter, 2016) by conceptualizing three core dimensions of burnout—emotional exhaustion, a sense of reduced personal accomplishment, and depersonalization—as a syndrome of individuals exposed to long-term emotional and interpersonal stressors related to their job.

Many researchers have investigated burnout phenomenon, particularly among counselors, over several decades (Emerson & Markos, 1996; Evans & Villavisanis, 1997; W. C. McCarthy & Frieze, 1999; Malach-Pines & Yafe-Yanai, 2001). These studies have manifested additional focus on counselors’ actual performance in working with clients by describing counselor burnout as a state in which counselors experience considerable difficulties in performing proper functions and providing effective counseling. Kesler (1990) emphasized counselors’ internal psychological process in her definition of counselor burnout, defining it as a decreased sense of personal accomplishment in which an individual blames themself for their emotional and physical exhaustion, career fatigues, cynical attitudes toward clients, withdrawal from clients, and chronic depression and/or increased anxiety.

Further efforts have been made to better understand counselor burnout by examining the relationships of the three core dimensions—emotional exhaustion, depersonalization, and reduced personal accomplishment. There is a common argument across burnout studies that emotional exhaustion is the central quality of burnout and the most obvious manifestation of the syndrome (Golembiewski & Munzenrider, 1988; R. T. Lee & Ashforth, 1996; Leiter & Maslach, 1988, 1999; Maslach, 1993, 1998). Researchers have articulated the relationships by positing that emotional exhaustion may first trigger depersonalization and depersonalization can then cause decreased personal accomplishment (Golembiewski & Munzenrider, 1988; R. T. Lee & Ashforth, 1996; Leiter & Maslach, 1988). Slightly different findings have suggested that exhaustion may simultaneously lead to depersonalization and reduced personal accomplishment (R. T. Lee & Ashforth, 1993; Maslach, 1993). Some research has provided distinctive relationships among the three dimensions, indicating that emotional exhaustion may result from increased depersonalization (Golembiewski et al., 1986; Taris et al., 2005) and decreased personal accomplishment (Golembiewski et al., 1986).

In response to the need to establish a broader definition of counselor burnout, S. M. Lee and colleagues (2007) expanded the three-dimension model, previously introduced by Maslach and colleagues (1997), to consider organizational and personal sources of burnout. They added two dimensions—negative work environment and deterioration in personal life—to the three core dimensions of burnout, which advanced the model to a five-dimensional burnout model (S. M. Lee et al., 2007). Using the five dimensions, S. M. Lee and colleagues introduced the Counselor Burnout Inventory (CBI). The CBI is the first scale aimed at measuring professional counselors’ burnout symptoms, integrating the expanded theoretical constructs of burnout with the five dimensions representative of the counseling profession (i.e., Exhaustion, Incompetence, Negative Work Environment, Devaluing Client, and Deterioration in Personal Life). Several studies that have explored the psychometric characteristics of the CBI with diverse counselor populations working in a wide range of settings have demonstrated the solid validity and reliability of the CBI (Bardhoshi et al., 2019; J. Lee et al., 2010; S. M. Lee et al., 2007). For example, Bardhoshi et al. (2019) conducted a meta-analysis of 12 studies that utilized the CBI to examine its psychometric characteristics. Their psychometric synthesis reported robust internal consistency, external validity, and structural validity of the five dimensions of burnout across diverse groups of professional counselors, supporting the CBI’s suitability as a tool for understanding the multidimensional burnout phenomenon.

The Current Study

Despite such advanced definitions and continued research on counselor burnout, attempts to understand the expanded structure of counselor burnout using all five dimensions remain limited. The CBI has demonstrated its fitness in burnout research using the five dimensions, especially to explore the relationships among the dimensions and to understand the sequential process of them. Understanding this underlying sequential process will allow counseling professionals to detect burnout-related symptoms earlier and develop prevention and intervention plans accordingly (Gil-Monte et al., 1998;

R. T. Lee & Ashforth, 1993; Noh et al., 2013).

Therefore, the current study aimed to evaluate a hypothesized sequential process model of the five dimensions of counselor burnout syndrome using the CBI, which consists of (a) Negative Work Environment, (b) Deterioration in Personal Life, (c) Exhaustion, (d) Incompetence, and (e) Devaluing Client. Our overarching goal was to enrich the literature by providing a foundation on which to develop an integrative theoretical process model of counselor burnout. The following research questions guided the current study:

1) What are the relationships among the five dimensions of counselor burnout measured by the CBI?

2) Is the relation between Negative Work Environment and Devaluing Client mediated by Deterioration in Personal Life, Exhaustion, and Incompetence in a serial order?

Conceptual Framework

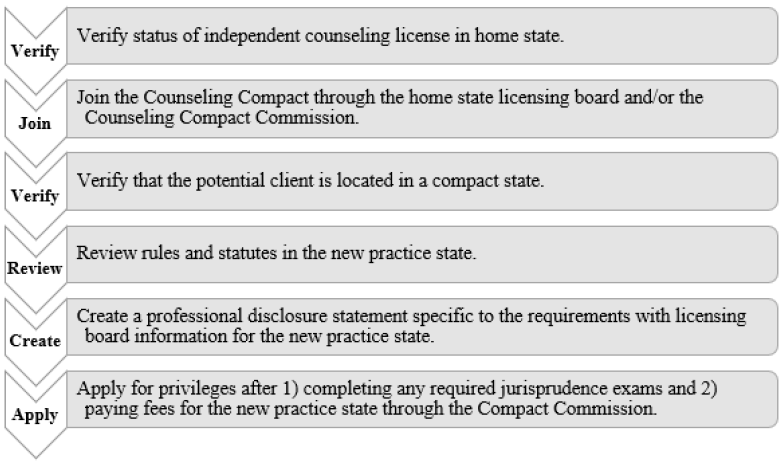

The current study presents a hypothesized sequential process of counselor burnout using the five dimensions of burnout measured by the CBI. Below we provide the rationale for our proposed serial order of counselor burnout, which is: 1) Negative Work Environment, 2) Deterioration in Personal Life, 3) Exhaustion, 4) Incompetence, and 5) Devaluing Client.

The first dimension, Negative Work Environment, reflects counselors’ attitudes and feelings toward their work environments beyond personal and interpersonal issues (S. M. Lee et al., 2007). Previous studies identified organizational factors as an early indicator of burnout among counselors, such as excessive work demands, role ambiguity and conflict, lack of recognition, limited supervisor and colleague support, poor relationships at work, and unfair decision-making (Demerouti et al., 2001; N. Kim & Lambie, 2018; Leiter & Maslach, 1988; Maslach & Leiter, 2008; C. McCarthy et al., 2010; Walsh & Walsh, 2002). These factors were found to be correlated with feelings of burnout and were identified as predictive factors for counselor burnout (N. Kim & Lambie, 2018).

The second dimension of the CBI, Deterioration in Personal Life, recognized as another early indicator of burnout, significantly predicts a wide range of burnout syndromes. This dimension refers to the counselors’ failure to maintain well-being in their personal lives by spending insufficient time with family and friends and having poor boundaries between work and personal life. Deterioration in Personal Life was positively associated with the Exhaustion subscale of the Maslach Burnout Inventory (MBI), accounting for the high number of counselors’ levels of burnout (S. M. Lee et al., 2007). In terms of the sequential order between the two major indicators of counselor burnout, we posited that a negative work environment precedes deterioration in personal life. A negative work environment, with characteristics such as excessive workload, role ambiguity and conflict, and a lack of supervisor and colleague support, may restrict counselors’ personal lives by reducing personal time for their own wellness. When personal time conflicts with an unfavorable work environment and demand, counselors may not easily find a balance between work and life, thus experiencing reduced quality of life and emotional and physical exhaustion as consequences.

Exhaustion, the third dimension of burnout, represents counselors’ physical and emotional depletions that result from excessive workloads and conflictive relationships at work. Exhaustion is the central quality of burnout (Maslach & Leiter, 2008), accompanied by feelings of being drained and emotionally overextended (Maslach, 1998). As many researchers have argued that exhaustion precedes other dimensions of burnout (Leiter & Maslach, 1999; Maslach et al., 1997, 2001; Maslach & Leiter, 2016), the core idea penetrating throughout their arguments is that emotional exhaustion occurs first, followed by reduced personal accomplishment and depersonalization.

Therefore, we proposed that the fourth and fifth dimensions—Incompetence and Devaluing Client, respectively—may be consequences that stem from counselors’ emotional and physical exhaustion. In the CBI, Incompetence refers to a counselor’s internal feeling of incompetence while evaluating their effectiveness as a professional counselor, and it represents their belief that they are an incompetent counselor or that they are failing to make a positive change in their clients. Previous studies have provided evidence that emotional exhaustion increases professional incompetence or inefficacy (R. T. Lee & Ashforth, 1993; Park & Lee, 2013; van Dierendonck et al., 2001). R. T. Lee and Ashforth (1993) insisted that emotional exhaustion increases the possibility of reducing one’s personal accomplishment while also directly causing depersonalization. The last dimension, Devaluing Client, is a counselor’s attitude and perception of their relationship with clients. It describes counselors’ callous attitudes toward clients, such as little empathy for, or no concern about, the welfare of their clients. S. M. Lee et al. (2007) reported that the Devaluing Client dimension was positively correlated with the Depersonalization subscale of the MBI, defined as “a negative, cynical, or excessively detached response to other people” (Maslach, 1998, p. 69).

Regarding the sequence between Exhaustion and the two dimensions of consequence, we postulated that Devaluing Client is the final stage of the burnout developmental model, suggesting that exhaustion triggers feelings of incompetence first, which in turn results in counselors’ actual behavior of devaluing clients. Emotional and physical exhaustion is a major direct threat to counselors’ competencies in providing quality services to their clients (R. T. Lee & Ashforth, 1993; Park & Lee, 2013; van Dierendonck et al., 2001), while devaluing clients—treating clients as objects—can be viewed as an emotional coping strategy to deal with the frustration derived from emotional depletion (Gil-Monte et al., 1998). Emotional exhaustion may therefore increase counselors’ feelings of incompetence and then exacerbate their detachment from clients to the point where they become callous toward and no longer interested in their clients (R. T. Lee & Ashforth, 1993; Leiter & Maslach, 1988; Taris et al., 2005). Thus, we posited that devaluing clients is the final crucial manifestation of the phenomenon among counselors who have experienced prolonged burnout, leading many of them to consider leaving the counseling profession.

In summary, we proposed an integrative process model of counselor burnout that comprises the following stages in sequence: 1) Negative Work Environment, 2) Deterioration in Personal Life,

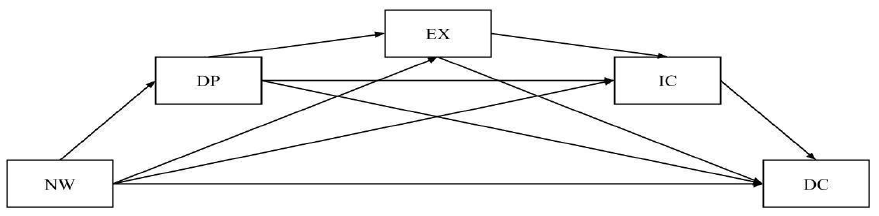

3) Exhaustion, 4) Incompetence, and 5) Devaluing Client. That is, professional counselors who work in a negative work environment for an extended period may start to experience a deterioration in their personal lives, which could lead counselors to emotional and physical exhaustion. Counselors exposed to prolonged exhaustion may also feel a lack of competence in counseling, which may make them prone to becoming callous toward their clients. Figure 1 depicts this serial process model of counselor burnout.

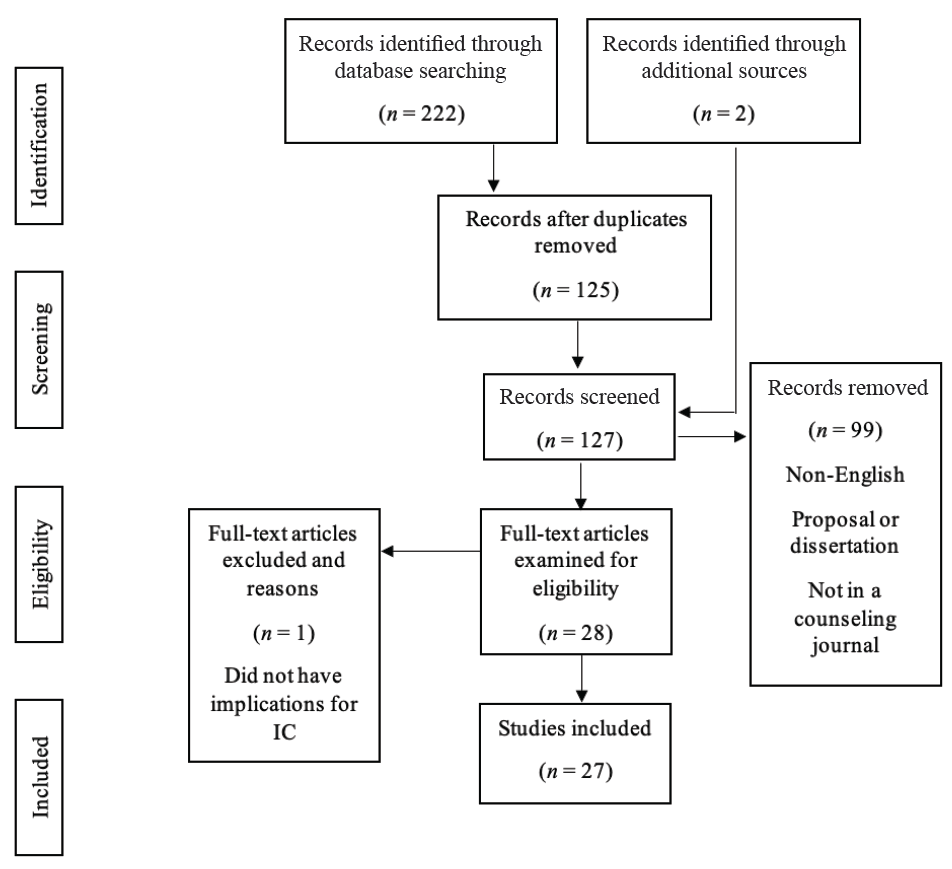

Figure 1

Saturated Model of Counselor Burnout Process

Note. NW = Negative Work Environment, DP = Deterioration in Personal Life, EX = Exhaustion, IC = Incompetence, DC = Devaluing Client.

Methods

Procedure

Upon IRB approvals from two different institutions, mass email invitations were sent to professional counselors affiliated with professional counseling associations (i.e., the American Counseling Association [ACA] and the American School Counselor Association [ASCA]). The email invitations contained a description of the study, proof of IRB approval, informed consent, and a link to a self-reported survey. Prior to responding to the web-based survey, participants were asked to review their consent information. Those who agreed to take part in the survey were asked to respond to a demographic questionnaire, followed by the CBI (S. M. Lee et al., 2007). All measures and forms were coded with the participants’ identification numbers to protect their privacy. Email addresses submitted by those who wanted to be entered into a drawing for compensation were stored in a separate database from the survey responses. Of the 428 participants who completed the online survey, we eliminated 69 who ceased their participation or did not fully complete the survey. As a result, 359 were included in the final data analysis.

Participants

A total of 359 professional counselors who were currently practicing and affiliated with one or more professional counseling-related association(s) participated in the current study. In terms of demographics, 281 participants self-identified as female (78.3%) and 76 as male (21.2%), in addition to two participants who did not want to respond (0.5%). With regard to racial/ethnic identity, the majority of the participants self-identified as White (n = 270, 75.2%). Thirty-three participants identified themselves as African American (9.2%), 20 as Asian/Asian American/Pacific Islander (5.6%), 19 as Hispanic (5.3%), and two as Native American (0.6%). The participants were employed in K–12 school (n = 123, 34.3%), outpatient (n = 76, 21.2%), private practice (n = 66, 18.4%), counselor education (n = 37, 10.3%), university counseling (n = 22, 6.1%), medical/psychiatric hospital (n = 11, 3.1%), or other (n = 24, 6.7%) settings. Years of experience ranged from 1 to 47 years, with a mean of 11.4 and standard deviation of 9.6. The participants displayed diverse specialties, including school counseling (42.9%), mental health counseling (42.9%), marriage and family therapy (4.6%), rehabilitation counseling (3.7%), and other disciplines (5.8%).

Measures

Counselor Burnout Inventory (CBI)

The CBI (S. M. Lee et al., 2007), a 20-item self-report inventory used to assess professional counselors’ burnout, consists of five dimensions: Exhaustion (e.g., “I feel exhausted due to my work as a counselor”), Incompetence (e.g., “I am not confident in my counseling skills”), Negative Work Environment (e.g., “I feel frustrated with the system in my workplace”), Devaluing Client (e.g., “I am not interested in my clients and their problems”), and Deterioration in Personal Life (e.g., “My relationships with family members have been negatively impacted by my work as a counselor”). These five dimensions reflect the characteristics of feelings and behaviors that indicate various levels of burnout among counselors. The CBI asks participants to rate the relevance of the statements on a 5-point Likert scale, ranging from 1 (never true) to 5 (always true). S. M. Lee et al. (2007) reported that Cronbach’s alpha coefficients of internal consistency reliability were .80 for the Exhaustion subscale, .83 for the Negative Work Environment subscale, .83 for the Devaluing Client subscale, .81 for the Incompetence subscale, and .84 for the Deterioration in Personal Life subscale. The current study obtained Cronbach’s alpha coefficients of .89 for Exhaustion, .87 for Negative Work Environment, .77 for Devaluing Client, .78 for Incompetence, and .84 for Deterioration in Personal Life.

Data Analysis

We first explored descriptive statistics of demographic variables to understand the sample characteristics, including gender, race, age, employment status, work setting, specialty, and years of experience. Missing values were imputed using predictive mean matching, which estimates missing values by matching to the observed values in the sample (Rubin, 1986).

To examine relationships among the five dimensions, we conducted a correlation analysis. After identifying the correlation matrix, which confirmed significant relationships among the five dimensions, we tested the hypothesized process model with a serial mediation using a path analysis. The ratio of response-to-parameter was 18:1 for the data, which satisfies the minimum amount of data for conducting a path analysis (Kline, 2015). Because the variables did not follow normality according to the Shapiro-Wilk test, skewness and kurtosis, and plots, we used weighted least squares. We started with a saturated model in which all possible direct paths were identified (Figure 1). Using the trimming method introduced by Meyers and colleagues (2013), we successively removed the least statistically significant path from the previous model until we found a model with all significant paths. To assess the model goodness of fit, model fit indices, including root mean square error residual (RMSEA), standardized root mean square residual (SRMR), comparative fit index (CFI), and Tucker-Lewis index (TLI) were evaluated. The RMSEA and SRMR values ≤ .06 indicate excellent fit, and CFI and TLI values ≥ .95 indicate excellent fit. All analyses were conducted using R Statistical Software (R Core Team, 2022).

Results

Pairwise Correlation Analysis

Table 1 depicts the results of the pairwise correlation analysis. Significant positive correlations were found among the five dimensions of burnout. The relationship between Deterioration in Personal Life and Exhaustion was the largest, while that between Deterioration in Personal Life and Devaluing Client was the smallest. Also notable was that Devaluing Client, which is the dependent variable in the serial mediation model, displayed weak relationships with Negative Work Environment, Deterioration in Personal Life, and Exhaustion, but a moderate relationship with Incompetence.

Table 1

Descriptive Statistics and Correlation Matrix

| Variable |

n |

M |

SD |

1 |

2 |

3 |

4 |

5 |

| 1. Negative Work Environment |

359 |

9.91 |

3.75 |

— |

|

|

|

|

| 2. Deterioration in Personal Life |

359 |

9.46 |

3.52 |

.35** |

— |

|

|

|

| 3. Exhaustion |

359 |

11.84 |

3.54 |

.43** |

.58** |

— |

|

|

| 4. Incompetence |

359 |

8.67 |

2.56 |

.23** |

.35** |

.33** |

— |

|

| 5. Devaluing Client |

359 |

5.52 |

1.94 |

.23** |

.22** |

.27** |

.40** |

— |

| **p < .01 |

|

|

|

|

|

|

|

|

Path Analysis

The first model was identified drawing all possible direct paths among the variables (Table 2). Model fit indices were not calculated, as this is a saturated model. This model indicated that four of the 10 direct paths were not statistically significant, with p values ranging from .065 to .145.

Table 2

Results of a Path Analysis: Saturated Model

| Endogenous Variables |

Exploratory Variables |

Estimate |

Standardized Estimate |

p |

SMC |

| Deterioration in Personal Life |

Negative Work Environment |

0.321 |

0.049 |

.000 |

0.117 |

| Exhaustion |

Negative Work Environment |

0.248 |

0.043 |

.000 |

0.386 |

|

Deterioration in Personal Life |

0.483 |

0.046 |

.000 |

|

| Incompetence |

Negative Work Environment |

0.056 |

0.038 |

.145 |

0.146 |

|

Deterioration in Personal Life |

0.153 |

0.044 |

.000 |

|

|

Exhaustion |

0.125 |

0.045 |

.006 |

|

| Devaluing Client |

Negative Work Environment |

0.050 |

0.029 |

.082 |

0.188 |

|

Deterioration in Personal Life |

−0.003 |

0.035 |

.930 |

|

|

Exhaustion |

0.069 |

0.037 |

.065 |

|

|

Incompetence |

0.254 |

0.037 |

.000 |

|

Note. SMC = squared multiple correlation.

Among them, the path connecting Deterioration in Personal Life to Devaluing Client (p = .930) was the least significant. According to the trimming process, this path was removed to identify modified Model 1. The modified Model 1 was found to include two nonsignificant paths, connecting Negative Work Environment to Incompetence (p = .145) and Negative Work Environment to Devaluing Clients

(p = .082). The path connecting Negative Work Environment to Incompetence was less significant, and it was removed from the modified Model 1 to fit modified Model 2. The modified Model 2 identified only one path that was not significant (p = .050), which connected Negative Work Environment to Devaluing Client. This path was eliminated to identify modified Model 3. The modified Model 3 finally rendered significant direct paths only with excellent model fit indices, and it was retained as the final model (Table 3). Model fit indices of all the models are described in Table 4.

Table 3

Results of a Path Analysis: Retained Model

| Endogenous Variables |

Exploratory Variables |

Estimate |

Standardized

Estimate |

p |

SMC |

| Deterioration in Personal Life |

Negative Work Environment |

0.311 |

0.049 |

0.000 |

0.110 |

| Exhaustion |

Negative Work Environment |

0.255 |

0.042 |

0.000 |

0.398 |

|

Deterioration in Personal Life |

0.491 |

0.046 |

0.000 |

|

| Incompetence |

Deterioration in Personal Life |

0.176 |

0.043 |

0.000 |

0.146 |

|

Exhaustion |

0.136 |

0.044 |

0.002 |

|

| Devaluing Client |

Exhaustion |

0.082 |

0.031 |

0.008 |

0.193 |

|

Incompetence |

0.273 |

0.033 |

0.000 |

|

Note. SMC = squared multiple correlation.

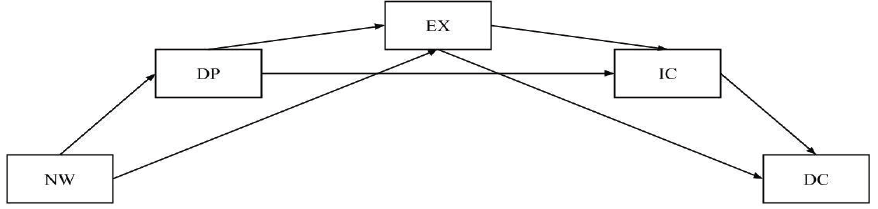

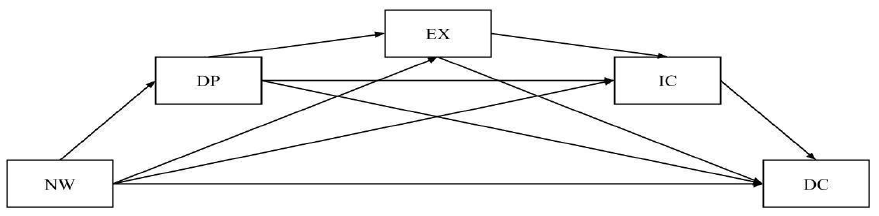

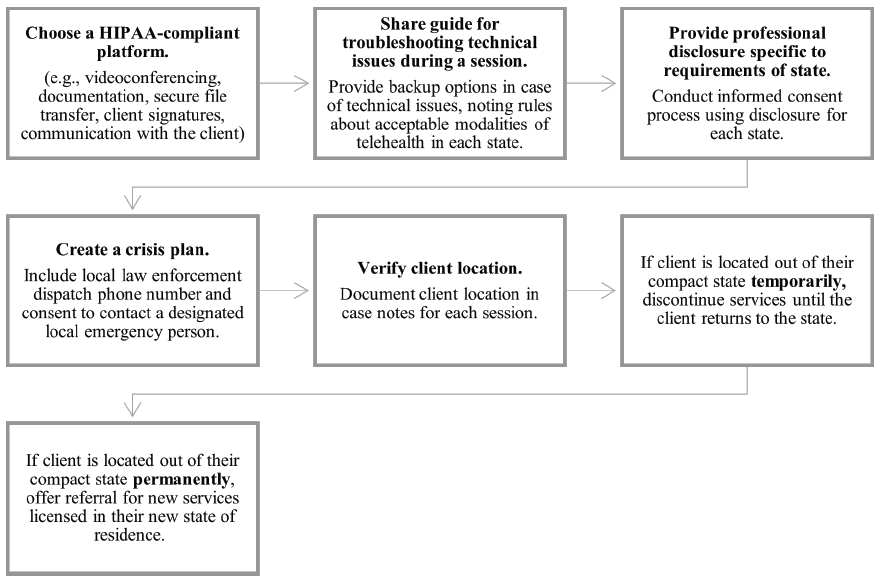

In the retained model (Figure 2), the indirect effect involving all three mediators, Deterioration in Personal Life, Exhaustion, and Incompetence, were found to be significant in a serial chain. The total effect of Negative Work Environment on Devaluing Clients was significant (standardized estimate = .269, p = .000), but the direct effect of Negative Work Environment on Devaluing Clients was not significant (standardized estimate = .062, p = .402). The ratio of the total indirect effect to the total direct effect was .273, and the proportion of the total effect mediated was .125. Among the indirect effects involving one or two of the three mediators, only the paths through Deterioration in Personal Life and Incompetence and the path through Exhaustion and Incompetence were found to be significant (p < .05).

Figure 2

Retained Model of Counselor Burnout Process

Note. NW = Negative Work Environment, DP = Deterioration in Personal Life, EX = Exhaustion, IC = Incompetence, DC = Devaluing Client.

Table 4

Goodness of Fit Indices

| Model |

χ2 |

df |

GFI |

AGFI |

RMSEA |

SRMR |

CFI |

TLI |

| Saturated model |

0.000 |

0.000 |

1.000 |

1.000 |

0.000 |

0.000 |

1.000 |

1.000 |

| Modified model 1 |

0.008 |

1.000 |

1.000 |

1.000 |

0.000 |

0.001 |

1.000 |

1.071 |

| Modified model 2 |

2.168 |

2.000 |

0.997 |

0.981 |

0.015 |

0.019 |

0.999 |

0.994 |

| Modified model 3 (retained) |

6.104 |

3.000 |

0.993 |

0.964 |

0.054 |

0.033 |

0.978 |

0.926 |

Note. AGFI = adjusted goodness of fit index; CFI = comparative normed fit index; df = degree of freedom; GFI = goodness of fit index; RMSEA = root mean squared error of approximation; SRMR = standardized root mean square residual; TLI = Tucker-Lewis index.

Discussion

This study examined a process model of counselor burnout in the work context by analyzing our proposed serial mediation model. The findings support the hypothesized sequential process model of counselor burnout by confirming the full mediating effects of Deterioration in Personal Life, Exhaustion, and Incompetence in a serial order on the relationship between Negative Work Environment and Devaluing Client.

The final model describes the mechanism of counselor burnout, pertaining to how it starts and proceeds to the point where clients are affected. In the proposed model, Negative Work Environment, an external factor that counselors are usually not able to control, may affect counselors’ experiences not only at work but also in their personal lives. This is consistent with previous findings in which counselors exposed to unfavorable work environments for an extended period tended to have poor boundaries between work and life and thus failed to maintain well-being in their personal lives (Leiter & Durup, 1996; Puig et al., 2012). Limited ability to find a balance between work and personal life because of negative work-related factors such as an excessive workload may affect the overall quality of life, resulting in emotional and physical depletion among counselors. Accordingly, in the final model, Exhaustion was predicted by Negative Work Environment through Deterioration in Personal Life. Exhaustion then predicted counselors’ feelings of incompetence as hypothesized. Being emotionally and physically exhausted, counselors may experience a reduced sense of self-competence and self-view as professional counselors, which may also influence their actual performance. The final model depicted that the feelings of incompetence predicted Devaluing Client, indicating counselors were unable to emotionally connect with their clients and thus lost interest in their clients’ welfare. Our findings supported previous studies (Maslach & Leiter, 2016; Maslach et al., 2001; Park & Lee, 2013; Taris et al., 2005; van Dierendonck et al., 2001) that found causal relationships between exhaustion and reduced accomplishment, as well as between exhaustion and depersonalization. For example, several researchers have argued that exhaustion may decrease professionals’ self-efficacy in providing quality services to their clients and that it may also increase depersonalization, in which they feel indifferent toward their work and the people with whom they work (R. T. Lee & Ashforth, 1993; Maslach & Leiter, 2016; Maslach et al., 2001; Park & Lee, 2013; Taris et al., 2005; van Dierendonck et al., 2001).

Lastly, our serial process model confirmed Devaluing Client to be the final stage of counselor burnout, predicted by Negative Work Environment through Deterioration in Personal Life, Exhaustion, and Incompetence. According to Maslach (1998), devaluing clients (i.e., a cynical attitude and feelings toward work and clients) begins with the action of distancing oneself emotionally and cognitively from one’s work, which can be a way to cope with emotional exhaustion. In fact, emotional detachment from work can be viewed as somewhat functional and even a necessary action to take to maintain effectiveness as a professional (Gil-Monte et al., 1998; Golembiewski et al., 1986). Maintaining proper emotional distance from clients and creating a clear boundary from work may act as an effective coping strategy for dealing with emotional and physical exhaustion. However, emotional detachment from work, despite its virtue as a potential coping strategy and an ethical practice (Gil-Monte et al., 1998), can be aggravated by emotional exhaustion through the perceived lack of competence as professional counselors. Such a detached attitude may lead counselors to become callous toward clients and to contemplate leaving the counseling profession (Cook et al., 2021). As the process model indicates, a negative work environment could significantly affect counselors’ social, emotional, cognitive, and behavioral aspects in order, which may harm their clients and the profession.

In addition to the mechanism of counselor burnout involving all dimensions with three mediators, the final model also identified effects involving a part of the five dimensions (Figure 2). Among those, a significant finding relates to the one without Exhaustion. According to the model, without Exhaustion, Negative Work Environment may still lead counselors to devalue clients through the deterioration in their personal lives and the feeling of incompetence. This finding is significant given that a variety of burnout theories and research have posited exhaustion as a key concept of burnout, insisting that emotional and physical depletion of counselors would lead up to feelings of incompetence and devaluing clients (Leiter & Maslach, 1999; Maslach & Leiter, 2016; Maslach et al., 2001; Park & Lee, 2013). Distinguishably, our findings suggest that, even when counselors do not necessarily experience emotional and physical exhaustion or are not aware of such experiences, counselors who work in unfavorable work environments that negatively impact their personal lives for a long period (Puig et al., 2012) may feel unable to maintain their effectiveness as professionals (Bandura & National Institute of Mental Health, 1986; Hattie et al., 2004) and thus become callous toward their work and clients (Maslach & Leiter, 2016).

Implications

To the best of our knowledge, the present study is the first attempt to acquire an in-depth understanding of a process model of counselor burnout using the five dimensions of burnout. The present study introduced a model that depicts the sequential process among the five dimensions of counselor burnout, indicating how counselor burnout may develop from their experiences at work as counselors to the point where they may harm their clients. Our research findings suggest important practical implications for counselors, clinical supervisors, and counseling center directors.

Implications for Counselors

The findings first enrich counselors’ understanding of their experiences of burnout and allow them to reflect on how they can relate themselves to each stage of the model. Counselors may use this model to examine their environments and experiences and to engage in self-reflection, assessing whether they have any signs of burnout. A single experience that may not have always necessarily been considered an indication of being in a developmental phase of counselor burnout, such as a limited number of coworkers who can provide case consultation (i.e., negative work environment) or reduced quality time with family and friends because of limited free time (i.e., deterioration in personal life), can now be perceived as early signs of counselor burnout that require intervention. This means that they may still be in the early phases and have the potential to bounce back if they seek help and receive early intervention. Given the gravity of more severe symptoms of burnout (i.e., incompetence and devaluing clients), counselors may utilize this model to detect the early signs of counselor burnout and develop strategies, such as self-care or help-seeking plans, so they can avoid progressing to the later phases of counselor burnout. Failing to take immediate action and receive appropriate help can lead to a serious problem, resulting in not only violating ethical obligations given to all counselors but also potentially harming clients. Specifically, counselors exhibiting the symptom of devaluing clients but failing to take any action may violate the two core values of the counseling profession: “nonmaleficence” and “beneficence” (ACA, 2014). The ACA Code of Ethics (ACA, 2014) states that professional counselors should avoid causing harm to their clients and work for the good of the clients by promoting their mental health and well-being. By devaluing clients as a result of experiencing burnout, counselors would essentially devastate the therapeutic relationship with their clients (Garner, 2006), impair their own ability to promote clients’ well-being (i.e., violation of beneficence), and ultimately cause serious harm to the clients (i.e., violation of nonmaleficence).

Implications for Clinical Supervisors

As counselors take primary responsibility for detecting the symptoms of burnout to maintain their optimal effectiveness, supervisors should continue to support them by guaranteeing adequate supervision and continuing education that provides an opportunity to discuss burnout experiences. Supervisors may set aside time during the supervision sessions for genuine discussions to help counselors better address their burnout and encourage them to regularly adopt the sequential model of the current study to assess their experience pertaining to the five dimensions. Continuing education may focus on the early warning signs of burnout, which in the current study were negative work environment and deterioration in personal life, so that supervisees can take immediate action when detecting the signs of burnout. Encouraging counselors to monitor their sudden changes and stressors at work and in their personal life and maintain a balance between them could help them seek support from supervisors and professional counseling. Relatedly, when counselors show the cognitive and behavioral patterns of devaluing clients, which is the last element in the sequential process of counselor burnout, supervisors should take immediate actions to protect both clients and counselors (ACA, 2014; Dang & Sangganjanavanich, 2015) because it may signal more serious levels of burnout. Therefore, supervisors need to maintain an open and inviting atmosphere to not only allow conversations when supervisees feel less empathetic toward their clients or disinterested in their clients’ lives, but also initiate further discussions for creating intervention strategies that are effective at mitigating impairment caused by burnout (Merriman, 2015). Supervisors should be mindful that some counselors may be reluctant to discuss their burnout symptoms with their supervisors because of fear of a negative evaluation. Having a conversation regarding their burnout in a more confidential relationship, such as counseling, would be more effective and safer for the counselors to evaluate their impairment accurately and take actions as necessary.

Implications for Counseling Center Directors

According to our findings, without necessarily going through the stage of emotional and physical exhaustion, negative work environment can have tremendous negative impacts on counselors’ overall competencies. This finding stresses the role of directors of counseling centers to create a positive work environment for counselors. First, the directors may periodically examine counselors’ perceptions of their work environment to determine whether they feel frustrated with the working system or perceive any unfair treatment (e.g., excessive workload, limited resources, unfair decision-making). For instance, they could hold a monthly meeting to listen to counselors’ difficulties and discuss improvement opportunities in the system. Paying attention to counselors’ difficulties and feedback can be critical to not only making system improvements but also building healthy relationships among members of the environment. The directors may take a careful look at their counselors’ caseloads and maintain a reasonable counselor-to-client ratio to prevent burnout and create a working environment that allows for the best services for their clients.

Second, counseling center directors can prevent or reduce counselors’ burnout by providing professional development opportunities. Counselors have experienced rapid changes in terms of counseling modalities and strategies and may feel inadequately prepared to meet the unique needs of their clients, which can result in counselor burnout. Therefore, it is beneficial for counselors to engage in professional development activities, such as workshops, continuing education, and conferences, to expand their knowledge and skills. These types of opportunities can reduce counselors’ feelings of incompetence and prevent them from progressing to the last phase (devaluing clients), even if the counselors are in the later phases of burnout.

Limitations and Future Directions

The current study provides an in-depth understanding of the expanded developmental model of counselor burnout and suggests significant implications for the counseling profession. Nevertheless, there are some limitations in the current study. First, although our research participants represented the overall characteristics of the counseling population fairly, future research should contain counselors from diverse backgrounds with regard to demographics, work settings, or specialties. Increased diversity could help us understand the unique burnout phenomenon among counselor populations working in various settings and with diverse clients.

Second, this study examined the sequential model of counselor burnout within the structure of the five subfactors of the CBI (S. M. Lee et al., 2007), including Negative Work Environment, Deterioration in Personal Life, Exhaustion, Incompetence, and Devaluing Client. Further investigation should involve external variables to explore what may contribute to the development of counselor burnout and how it may affect the counseling process and outcomes.

Lastly, a longitudinal study is necessary to capture the extended understanding of the sequential development model of counselor burnout, given that time may be a critical factor that influences burnout among professionals. By conducting a longitudinal study, counseling professionals will be able to detect the advancement of burnout and take immediate action to initiate prevention and intervention plans.

Conclusion

Professional counselors who work in a negative work environment for an extended period may start to experience a deterioration in their personal lives, which could lead counselors to emotional and physical exhaustion. Counselors exposed to prolonged exhaustion may also feel a lack of competence in counseling, which may make them prone to becoming callous toward their clients. To the best of our knowledge, the present study is the first attempt to acquire an expanded understanding of a process model of counselor burnout using the five dimensions of burnout. The research findings validated the aforementioned hypothesized process model of counselor burnout, suggesting how counselor burnout may develop from their experiences at work to the point where they may harm their clients. Counselors may utilize this model to detect the early signs of counselor burnout and to develop strategies, such as self-care or help-seeking plans, so they can avoid progressing to the later phases of counselor burnout. Failing to take immediate action and receive appropriate help can lead to a serious problem, resulting in not only violating ethical obligations given to all counselors but also potentially harming clients. Supervisors and counseling center directors may set aside time for genuine discussions to help counselors better address their burnout and encourage them to regularly adopt the sequential model of the current study to assess their experience pertaining to the five dimensions.

Conflict of Interest and Funding Disclosure

The authors reported no conflict of interest

or funding contributions for the development

of this manuscript.

References

American Counseling Association. (2014). ACA code of ethics. https://www.counseling.org/resources/aca-code-of-ethics.pdf

Bandura, A., & National Institute of Mental Health. (1986). Social foundations of thought and action: A social cognitive theory. Prentice Hall.

Bardhoshi, G., Erford, B. T., & Jang, H. (2019). Psychometric synthesis of the Counselor Burnout Inventory. Journal of Counseling & Development, 97(2), 195–208. https://doi.org/10.1002/jcad.12250

Bardhoshi, G., & Um, B. (2021). The effects of job demands and resources on school counselor burnout: Self-efficacy as a mediator. Journal of Counseling & Development, 99(3), 289–301. https://doi.org/10.1002/jcad.12375

Cook, R. M., Fye, H. J., Jones, J. L., & Baltrinic, E. R. (2021). Self-reported symptoms of burnout in novice professional counselors: A content analysis. The Professional Counselor, 11(1), 31–45.

https://doi.org/10.15241/rmc.11.1.31

Dang, Y., & Sangganjanavanich, V. F. (2015). Promoting counselor professional and personal well-being through advocacy. Journal of Counselor Leadership and Advocacy, 2(1), 1–13.

https://doi.org/10.1080/2326716X.2015.1007179

Demerouti, E., Bakker, A. B., Nachreiner, F., & Schaufeli, W. B. (2001). The job demands-resources model of burnout. Journal of Applied Psychology, 86(3), 499–512. https://doi.org/10.1037/0021-9010.86.3.499

Emerson, S., & Markos, P. A. (1996). Signs and symptoms of the impaired counselor. The Journal of Humanistic Education and Development, 34(3), 108–117. https://doi.org/10.1002/j.2164-4683.1996.tb00335.x

Evans, T. D., & Villavisanis, R. (1997). Encouragement exchange: Avoiding therapist burnout. The Family Journal: Counseling and Therapy for Couples and Families, 5(4), 342–345. https://doi.org/10.1177/1066480797054013

Freudenberger, H. J. (1975). The staff burn-out syndrome in alternative institutions. Psychotherapy: Theory, Research & Practice, 12(1), 73–82. https://doi.org/10.1037/h0086411

Fye, H. J., Cook, R. M., Baltrinic, E. R., & Baylin, A. (2020). Examining individual and organizational factors of school counselor burnout. The Professional Counselor, 10(2), 235–250. https://doi.org/10.15241/hjf.10.2.235

Garner, B. R. (2006). The impact of counselor burnout on therapeutic relationships: A multilevel analytic approach [Doctoral dissertation, Texas Christian University]. ProQuest Dissertations Publishing.

Gil-Monte, P. R., Peiro, J. M., & Valcárcel, P. (1998). A model of burnout process development: An alternative from appraisal models of stress. Comportamento Organization E Gestao, 4(1), 165–179.

https://www.researchgate.net/publication/263043320

Golembiewski, R. T., & Munzenrider, R. F. (1988). Phases of burnout: Developments in concepts and applications. Praeger.

Golembiewski, R. T., Munzenrider, R. F., & Stevenson, J. G. (1986). Stress in organizations: Toward a phase model of burnout. Praeger.

Hattie, J. A., Myers, J. E., & Sweeney, T. J. (2004). A factor structure of wellness: Theory, assessment, analysis, and practice. Journal of Counseling & Development, 82(3), 354–364.

https://doi.org/10.1002/j.1556-6678.2004.tb00321.x

Kesler, K. D. (1990). Burnout: A multimodal approach to assessment and resolution. Elementary School Guidance and Counseling, 24(4), 303–311.

Kim, J. J., Brookman-Frazee, L., Gellatly, R., Stadnick, N., Barnett, M. L., & Lau, A. S. (2018). Predictors of burnout among community therapists in the sustainment phase of a system-driven implementation of multiple evidence-based practices in children’s mental health. Professional Psychology: Research and Practice, 49(2), 132–141. https://doi.org/10.1037/pro0000182

Kim, N., & Lambie, G. W. (2018). Burnout and implications for professional school counselors. The Professional Counselor, 8(3), 277–294. https://doi.org/10.15241/nk.8.3.277

Kline, R. B. (2015). Principles and practice of structural equation modeling (4th ed.). Guilford.

Lee, J., Wallace, S., Puig, A., Choi, B. Y., Nam, S. K., & Lee, S. M. (2010). Factor structure of the Counselor Burnout Inventory in a sample of sexual offender and sexual abuse therapists. Measurement and Evaluation in Counseling and Development, 43(1), 16–30. https://doi.org/10.1177/0748175610362251

Lee, R. T., & Ashforth, B. E. (1993). A longitudinal study of burnout among supervisors and managers: Comparisons between the Leiter and Maslach (1988) and Golembiewski et al. (1986) models. Organizational Behavior and Human Decision Processes, 54(3), 369–398. https://doi.org/10.1006/obhd.1993.1016

Lee, R. T., & Ashforth, B. E. (1996). A meta-analytic examination of the correlates of the three dimensions of job burnout. Journal of Applied Psychology, 81(2), 123–133. https://doi.org/10.1037/0021-9010.81.2.123

Lee, S. M., Baker, C. R., Cho, S. H., Heckathorn, D. E., Holland, M. W., Newgent, R. A., Ogle, N. T., Powell, M. L., Quinn, J. J., Wallace, S. L., & Yu, K. (2007). Development and initial psychometrics of the Counselor Burnout Inventory. Measurement and Evaluation in Counseling and Development, 40(3), 142–154. https://doi.org/10.1080/07481756.2007.11909811

Leiter, M. P., & Durup, M. J. (1996). Work, home, and in-between: A longitudinal study of spillover. The Journal of Applied Behavioral Science, 32(1), 29–47. https://doi.org/10.1177/0021886396321002

Leiter, M. P., & Maslach, C. (1988). The impact of interpersonal environment on burnout and organizational commitment. Journal of Organizational Behavior, 9(4), 297–308. https://doi.org/10.1002/job.4030090402

Leiter, M. P., & Maslach, C. (1999). Six areas of worklife: A model of the organizational context of burnout. Journal of Health and Human Services Administration, 21(4), 472–489. https://www.researchgate.net/publication/12693291

Malach-Pines, A., & Yafe-Yanai, O. (2001). Unconscious determinants of career choice and burnout: Theoretical model and counseling strategy. Journal of Employment Counseling, 38(4), 170–184.

https://doi.org/10.1002/j.2161-1920.2001.tb00499.x

Maslach, C. (1993). Burnout: A multidimensional perspective. In W. B. Schaufeli, C. Maslach, & T. Marek (Eds.), Professional burnout: Recent developments in theory and research (pp. 19–32). Taylor and Francis.

https://www.researchgate.net/publication/263847970

Maslach, C. (1998). A multidimensional theory of burnout. In C. L. Cooper (Ed.), Theories of organizational stress (pp. 68–85). Oxford University Press. https://www.researchgate.net/publication/280939428

Maslach, C., & Jackson, S. E. (1981a). The Maslach Burnout Inventory. Consulting Psychologists Press.

Maslach, C., & Jackson, S. E. (1981b). The measurement of experienced burnout. Journal of Organizational Behavior, 2(2), 99–113. https://doi.org/10.1002/job.4030020205

Maslach, C., Jackson, S. E., & Leiter, M. P. (2018). The Maslach Burnout Inventory manual (4th ed.). Mind Garden. https://www.mindgarden.com/maslach-burnout-inventory-mbi/685-mbi-manual.html

Maslach, C., & Leiter, M. P. (2008). Early predictors of job burnout and engagement. Journal of Applied Psychology, 93(3), 498–512. https://doi.org/10.1037/0021-9010.93.3.498

Maslach, C., & Leiter, M. P. (2016). Understanding the burnout experience: Recent research and its implications for psychiatry. World Psychiatry, 15(2), 103–111. https://doi.org/10.1002/wps.20311

Maslach, C., Schaufeli, W. B., & Leiter, M. P. (2001). Job burnout. Annual Review of Psychology, 52, 397–422. https://doi.org/10.1146/annurev.psych.52.1.397

McCarthy, C., Van Horn Kerne, V., Calfa, N. A., Lambert, R. G., & Guzmán, M. (2010). An exploration of school counselors’ demands and resources: Relationship to stress, biographic, and caseload characteristics. Professional School Counseling, 13(3), 146–158. https://doi.org/10.1177/2156759X1001300302

McCarthy, W. C., & Frieze, I. H. (1999). Negative aspects of therapy: Client perceptions of therapists’ social influence, burnout, and quality of care. Journal of Social Issues, 55(1), 33–50.

https://doi.org/10.1111/0022-4537.00103

Merriman, J. (2015). Enhancing counselor supervision through compassion fatigue education. Journal of Counseling & Development, 93(3), 370–378. https://doi.org/10.1002/jcad.12035

Meyers, L. S., Gamst, G. C., & Guarino, A. J. (2013). Performing data analysis using IBM SPSS. Wiley.

Noh, H., Shin, H., & Lee, S. M. (2013). Developmental process of academic burnout among Korean middle school students. Learning and Individual Differences, 28, 82–89. https://doi.org/10.1016/j.lindif.2013.09.014

Osborn, C. J. (2004). Seven salutary suggestions for counselor stamina. Journal of Counseling & Development, 82(3), 319–328. https://doi.org/10.1002/j.1556-6678.2004.tb00317.x

Park, Y. M., & Lee, S. M. (2013). A longitudinal analysis of burnout in middle and high school Korean teachers. Stress and Health, 29(5), 427–431. https://doi.org/10.1002/smi.2477

Puig, A., Baggs, A., Mixon, K., Park, Y. M., Kim, B. Y., & Lee, S. M. (2012). Relationship between job burnout and personal wellness in mental health professionals. Journal of Employment Counseling, 49(3), 98–109.

https://doi.org/10.1002/j.2161-1920.2012.00010.x

R Core Team. (2022). R: A language and environment for statistical computing. R Foundation for Statistical Computing.

Rubin, D. B. (1986). Statistical matching using file concatenation with adjusted weights and multiple imputations. Journal of Business & Economic Statistics, 4(1), 87–94. https://doi.org/10.2307/1391390

Taris, T. W., Le Blanc, P. M., Schaufeli, W. B., & Schreurs, P. J. G. (2005). Are there causal relationships between the dimensions of the Maslach Burnout Inventory? A review and two longitudinal tests. Work & Stress, 19(3), 238–255. https://doi.org/10.1080/02678370500270453

van Dierendonck, D., Schaufeli, W. B., & Buunk, B. P. (2001). Burnout and inequity among human service professionals: A longitudinal study. Journal of Occupational Health Psychology, 6(1), 43–52.

https://doi.org/10.1037/1076-8998.6.1.43

Walsh, B., & Walsh, S. (2002). Caseload factors and the psychological well-being of community mental health staff. Journal of Mental Health, 11(1), 67–78. https://doi.org/10.1080/096382301200041470

Donghun Lee, PhD, NCC, is an assistant professor at the University of Texas at San Antonio. Sojeong Nam, PhD, NCC, is an assistant professor at The University of New Mexico. Jeongwoon Jeong, PhD, NCC, is an assistant professor at The University of New Mexico. GoEun Na, PhD, NCC, is an assistant professor at The City University of New York at Hunter College. Jungeun Lee, PhD, LPC, LPC-S, is a clinical professor at the University of Houston. Correspondence may be addressed to Donghun Lee, 501 W. Cesar E. Chavez Blvd., Durango Building 4.304, San Antonio, TX 78207, donghun.lee@utsa.edu.

Oct 31, 2023 | Volume 13 - Issue 3

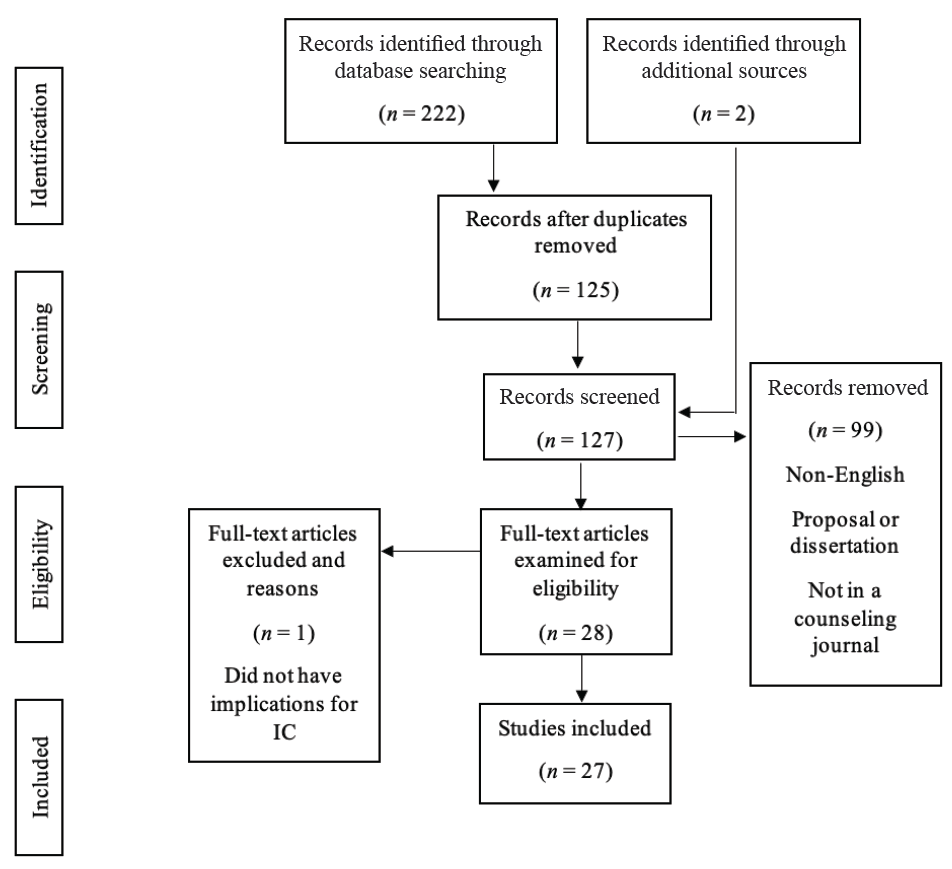

Amanda DeDiego, Rakesh K. Maurya, James Rujimora, Lindsay Simineo, Greg Searls

In the wake of COVID-19, health care providers experienced an immense expansion of telehealth usage across fields. Despite the growth of telemental health offerings, issues of licensing portability continue to create barriers to broader access to mental health care. To address portability issues, the Counseling Compact creates an opportunity for counselors to have privileges to practice in states that have passed compact legislation. Considerations of ethical and legal aspects of counseling in multiple states are critical as counselors begin to apply for privileges to practice through the Counseling Compact. This article explores ethical and legal regulations relevant to telemental health practice in multiple states under the proposed compact system. An illustrative case example and flowcharts offer guidance for counselors planning to apply for Counseling Compact privileges and provide telemental health across multiple states.

Keywords: telehealth, legislation, Counseling Compact, portability, telemental health

Maximizing the use of technology-assisted counseling techniques, telemental health (TMH) is a modality of service delivery that takes the best practices of traditional counseling and adapts practice for delivery via electronic means (National Institute of Mental Health, 2021). There are many terms to describe TMH, which include: distance counseling, technology-assisted services, e-therapy, and tele-counseling (Hilty et al., 2017). Counselors have previously been hesitant in adopting technology-supported counseling practice (Richards & Viganó, 2013). Since the onset of the COVID-19 pandemic, TMH use has grown exponentially in applications across various disciplines (Appleton et al., 2021).

Between March and August 2021, out of all telehealth outpatient visits across disciplines, 39% were primarily for a mental health or substance use diagnosis, up from 24% a year earlier, and 11% two years earlier (Lo et al., 2022). During the same period, 55% of clients in rural areas relied on TMH to access outpatient mental health and substance use services compared to 35% of clients in urban areas. Further, TMH has expanded the accessibility of mental health services to underserved populations, including people living in remote areas, and marginalized groups, including sexual minorities, ethnic and racial minorities, and people with disabilities (Hirko et al., 2020). With broader adoption of TMH, existing issues of licensure portability continue to represent barriers to service provision across state lines. Recent state and national legislation that addresses telehealth parity and clarifies language in the provision of telehealth services supports the continued expansion of TMH (Baumann et al., 2020). Thus, to address portability issues, a partnership of professional counseling organizations engaged in a decades-long effort to address barriers to portability, leading to the recent creation of the Counseling Compact.

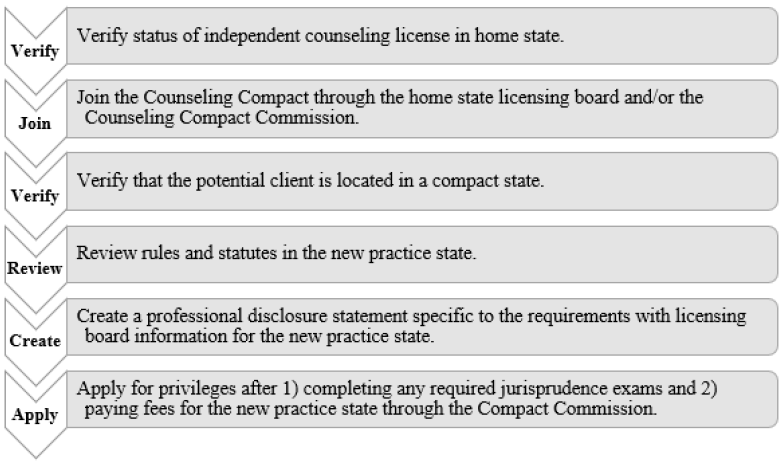

With the Counseling Compact legislation having been successfully passed in far more than the required 10 states, the Counseling Compact Commission has convened to create bylaws and informational systems to manage opening applications for privilege to practice in early 2024 (American Counseling Association [ACA], 2021). As counselors venture into a new era with privileges to practice across state lines, consideration of ethical and legal provisions of practice are critical. This article seeks to offer guidance to counselors in how to practice ethically within the privileges of the Counseling Compact. Exploration of ethical guidelines and legal statutes governing practice, a case example of ethical practice, and tools for establishing an ethical process of practice are offered.

Legal and Ethical Considerations for TMH

Provision of TMH may include use of various technology-supported methods of distance counseling. The National Board for Certified Counselors (NBCC; 2016) differentiates between face-to-face counseling and distance professional services. Face-to-face services include synchronous interaction between individuals or groups of individuals using visual and auditory cues from the real world. Professional distance services utilize technology or other methods, such as telephones or computers, to deliver services like guidance, supervision, advice, or education.

NBCC (2016) also differentiates counseling services from supervision and consultation for counselors. Counseling represents a working partnership that enables various people, families, and groups to achieve their goals in terms of mental health, well-being, education, and employment. This type of professional relationship differs from supervision, in that supervision is a formal, hierarchical arrangement involving two or more professionals. Consultation is an intentional collaboration between two or more experts to improve the efficiency of professional services with respect to a particular person. NBCC also offers guidance in defining various modalities for the provision of TMH related to counseling, supervision, or consultation (see Table 1). TMH can enhance accessibility to mental health services (Maurya et al., 2020). Barriers to care via TMH include lack of access to video-sharing technologies (e.g., tablet, personal computer, laptop), reliable internet access, private space for using TMH, and adaptive equipment for people with disabilities, as well as a lack of overall digital literacy (Health Resources & Services Administration, 2022). However, with some shared resources and community partnerships, these barriers can be addressed, and TMH can offer broad access to mental health professionals with various expertise and specialty training to begin addressing health disparities (Abraham et al., 2021). Another barrier is the lack of mental health professionals offering TMH services, in part due to challenges in counselor licensing (Mascari & Webber, 2013). TMH barriers also include challenges in counselor training as well as technical and administrative support to implement TMH services with certain client populations (Shreck et al., 2020; Siegel et al., 2021).

Table 1

Common Modalities for Provision of Telemental Health

| Modality |

Definition |

| Telephone-based |

Information is conveyed across synchronous distance interactions using audio techniques. |

| Email-based |

Asynchronous distance interaction in which information is transmitted via written text messages or email. |

| Chat-based |

Synchronous distance interaction in which information is received via written messages. |

| Video-based |

Synchronous distance interaction in which information is received by video and or audio mechanisms. |

| Social network |

Synchronous or asynchronous distance interaction in which information is exchanged via social networking mechanisms. |

Navigating Laws and Ethics

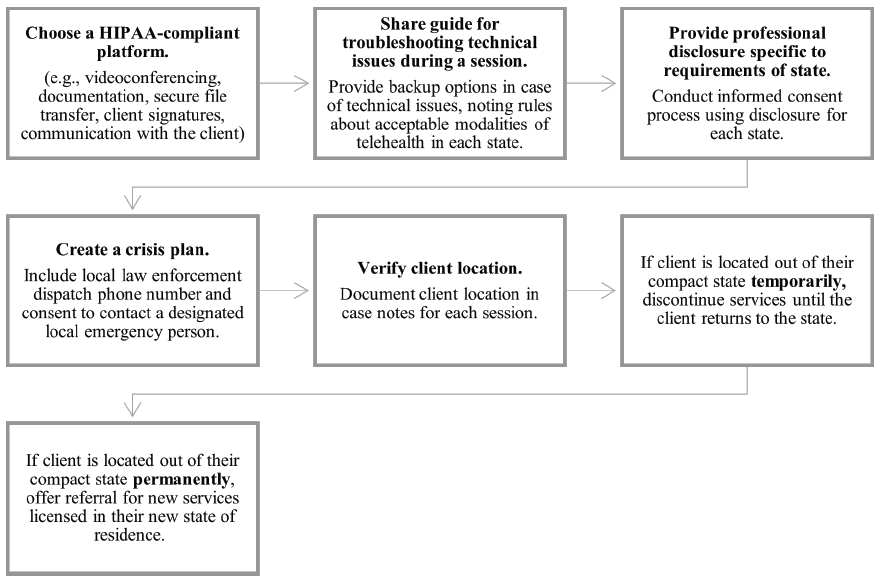

As part of responsible practice, counselors who engage in TMH need to consider ethical considerations and risks. The ACA Code of Ethics (2014) dedicates Section H to “Distance Counseling, Technology, and Social Media” (p. 17). This section was added in the 2014 iteration of the ethical code in recognition of the fact that TMH was a growing tool for the counseling profession (Dart et al., 2016). These ethical guidelines address competency, regulations, use of distance counseling tools, and online practice. According to the most recent counselor liability report from the Healthcare Providers Service Organization (2019), the most common reasons for licensing board complaints within the last 5 years are issues related to sexual misconduct, failure to maintain professional standards, and confidentiality breaches.

Mental health professionals adhere to federal and state laws regarding privacy and security of information stored electronically (Dart et al., 2016). Such federal and state laws have been enacted to protect the privacy and security of information, and counselors must adhere to them in order to avoid legal ramifications (Dart et al., 2016). The ACA Code of Ethics (2014) provides guidelines for counselors to consider when in ethical dilemmas. For example, the ACA Code of Ethics advises counselors to adhere to the laws and regulations of the practice location of the counselor and the client’s place of residence. When working within a counselor’s statutory legislation and regulation, the ACA Code of Ethics directs counselors to address conflicts related to laws and ethics through ethical decision-making models. There are several models for counselors to follow (Levitt et al., 2015; Remley & Herlihy, 2010; Sheperis et al., 2016). Counselors, then, should be familiar with these ethical decision-making models to address ethical dilemmas with consideration of legal statutes in their state of practice. The ACA Code of Ethics strongly aligns with the NBCC Policy Regarding the Provision of Distance Professional Services (2016) providing guidance for National Certified Counselors (NCCs).

Counselors need to ensure compliance with applicable state and federal law in the provision of TMH services. For instance, the Health Insurance Portability and Accountability Act (HIPAA) is a federal law that establishes legal rules in the security and privacy of medical records, and the electronic storage and transmission of protected health information (Dart et al., 2016). Counselors need to select HIPAA-compliant software and technologies to maintain the security, privacy, and confidentiality of electronic client information. For example, regarding videoconferencing, Skype is not HIPAA-compliant (Churcher, 2012), but VSee and several other vendors have the appropriate level of encryption to meet HIPAA standards for compliance (Dart et al., 2016). Once HIPAA-compliant platform usage is established, counselors need to implement and collect the following information related to clinical documentation: 1) verbal or written consent from client or representative of client in the case of minors or those declared legally unable to provide consent; 2) category of the office visit (e.g., audio with video or audio/telephone only based on acceptable practice in that state); 3) date of last visit or billable visit; 4) physical location of client; 5) counselor location; 6) names and roles of participants (including any potential third parties); and 7) length of visit (Smith et al., 2020). Finally, as part of HIPAA standards, a vendor must offer a Business Associate Agreement (BAA), which demonstrates that the software or tool aligns with HIPAA encryption and privacy standards in communication with clients, transmission of data, and storage of client data (U.S. Department of Health & Human Services [HHS], 2013).

During the COVID-19 federal state of emergency, the Office for Civil Rights, tasked with enforcement of HIPAA regulations related to telehealth, exercised discretion and declared the agency would not impose penalties on counselors for lack of compliance in the provision of telehealth, assuming the counselor demonstrates a good faith effort to adhere to standards (HHS, 2021). These guidelines related to the discretion of enforcement cited the use of teleconferencing or chat tools without the adequate level of encryption as allowable, but only during the declared state of emergency.

Ethical Considerations

The scope of practice for counselors varies depending on state licensure laws. It is critical that counselors be familiar with and act in accordance with state licensing laws. For example, if a client is based in a specific physical location, then the counselor needs to adhere to the licensing regulations and scope of practice in that physical location. Some states require licensure where the counselor is located as well as where the client is located. However, there is a lack of specificity in state licensure requirements related to the demonstration of competence with telehealth-specific practices (Williams et al., 2021).

To be able to provide mental health counseling services, mental health counselors need to consider their scope of practice based on state licensure guidelines where the client is located. This scope of practice is defined by the licensing standards of each state. Beyond scope of practice, counselors also need to consider boundaries of competence. The ACA Code of Ethics (2014) is nonspecific about how counselors demonstrate competence, only stating that they should “practice within the boundaries of their competence, based on their education, training, supervised experience and state and national professional credentials” (p. 8). Because of the lack of specificity in state telehealth practices, unless state licensure guidelines explicitly prohibit or advocate for specific telehealth practices, counselors may need to clarify interpretation of statutes or rules with licensure boards to determine specific telehealth practices.

Inherent in a counselor’s responsibility is their ability to screen clients for the appropriateness of telehealth services (Sheperis & Smith, 2021). Counselors are advised to determine whether clients have characteristics that may render them inappropriate for telehealth services, and then to make appropriate referrals (Morland et al., 2015). Some clients may not be appropriate for telehealth because of their (a) inability to access specific technology, (b) rejection of technology during the informed consent process, (c) severe psychosis, (d) mood dysregulation, (e) suicidal or homicidal tendencies, (f) substance use disorder, or (g) cognitive or sensory impairment (Sheperis & Smith, 2021). Finally, counselors are advised to utilize age- and developmentally appropriate strategies for children, adolescents, and older adults (NBCC, 2016; Richardson et al., 2009).

Once service providers, such as counselors, have appropriately screened clients for service, then informed consent is the next step. When counselors provide technology-assisted services, they are tasked to make reasonable efforts to determine clients’ intellectual, emotional, physical, linguistic, and functional capabilities while also appropriately assessing the needs of the client (ACA, 2014). When working with children, counselors need to know the age of the child or adolescent and the client’s legal ability to provide consent (Kramer & Luxton, 2016). Age of consent laws vary between states, so counselors need to familiarize themselves with their specific state legislation. This information is critical for the informed consent process and determining emergency procedures in case of a crisis (Kramer & Luxton, 2016). Counselors then need to consider and complete the informed consent process acknowledging the practice of TMH services.

In the informed consent process, it is imperative that counselors disclose risks related to TMH such as accessibility to technology, technology failure, and data breaches (ACA, 2014). Counselors are required to provide information related to procedures, goals, treatment plans, risks, benefits, and costs of services as part of the informed consent process (Jacob et al., 2011). Other considerations counselors may want to include during the informed consent process include confidentiality and limits of TMH; emergency plans; documentation and storage of information; technological failures; contact between sessions, if any; and termination and referrals (Turvey et al., 2013).

Client Crisis Plans

There are specific steps to ensure appropriate emergency management practices when working with clients via telehealth (Sheperis & Smith, 2021). For example, at intake, these are the steps counselors could take: 1) verify the client’s identity and contact information; 2) verify the current location of the client and their residential address; 3) inquire about other health care providers; 4) navigate conversation regarding contact during emergency and non-emergency situations; and 5) implement a safety plan, if needed (Sheperis & Smith, 2021; Shore et al., 2018). Moreover, counselors need to stay up to date with local state and federal requirements related to duty to warn and protection requirements (Kramer et al., 2015).

For clients and counselors operating in separate cities or states, it is necessary for counselors to gather local law enforcement and emergency service contact information and maintain a plan of action if needed (Shore et al., 2007). Counselors are also advised to plan for service interruptions if and when technical issues arise during a crisis situation (Kramer et al., 2015). Aside from emergency management practices, counselors who engage clients during a crisis still need to apply basic counseling techniques such as unconditional positive regard, congruence, and empathy (Litam & Hipolito-Delgado, 2021). Once a counselor establishes a client’s psychological safety, they can begin to work collaboratively with clients to reestablish safety and predictability; defuse emotions; validate experiences; create specific, objective, and measurable goals; and identify any resources and coping mechanisms (Litam & Hipolito-Delgado, 2021).

Licensure Portability

In the wake of the COVID-19 pandemic, as states of emergency were issued at the state and national levels, licensing requirements were waived for the sake of allowing medical professionals to offer continuity of care via telehealth (Slomski, 2020). These time-bound waivers of practice highlighted the need for licensure portability, especially for counselors, even though in many of these states the waivers were difficult to obtain and could be withdrawn at any point when the state of emergency was rescinded. The widened use of telehealth during the COVID-19 pandemic amplified the growing calls for long-term licensure portability options for counselors.

In the United States, counselors experience challenges in transferring licensure between states, as counseling licensure standards vary from state to state (Mascari & Webber, 2013). The profession of counseling, although a relatively new field as compared to other helping professions such as psychology and social work, has been working toward licensure portability over the past 30 years. Since its inception in 1986, the American Association of State Counseling Boards (AASCB) has been focused on advocacy efforts to establish consistency in counseling licensing standards and avenues for licensing portability across states (AASCB, 2022). To advance toward this goal, AASCB first partnered with organizations such as ACA, the Council for the Accreditation of Counseling and Related Educational Programs (CACREP), and NBCC. Together these groups established the professional identity of counselors through a unified definition of counseling as a profession (Kaplan & Kraus, 2018), as well as consistent training standards for professional counselors across the nation (Bobby, 2013).

In an effort to promote a unified counselor identity and facilitate licensure portability, the 20/20 initiative (Kaplan & Gladding, 2011) included an oversight committee comprised of stakeholders from various organizations to develop a consensus definition of the profession, address prominent issues facing the profession at the time, and develop principles to guide advocacy work in strengthening the counseling profession (Kaplan & Gladding, 2011; Kaplan & Kraus, 2018). Licensure portability was identified as one of these key issues critical for the future of the profession. This issue persisted, with various states assigning different licensure titles, guidelines, requirements, and continuing education standards. Common training standards across specialty areas through CACREP, which merged with the Council on Rehabilitation Education in 2017, promulgate widely used guidelines for counselor licensure (CACREP, 2017). There are various licensure portability models currently used in medical fields: (a) the nonprofit organization model, (b) the mutual recognition model, (c) the licensure language model, (d) the federal model, and (e) the national model (Bohecker & Eissenstat, 2019). In early efforts, Bloom et al. (1990) proposed model licensure language that could be used to establish national licensing standards, which was an effort toward portability under the licensure language model. AASCB previously tried to move toward a national portability system through the nonprofit organization model by establishing the National Credential Registry, which is a central repository for counselor education, supervision, exams, and other information relevant to state licensure (Bohecker & Eissenstat, 2019). However, recently the effort to establish a Counseling Compact for licensure portability under the mutual recognition model gained great momentum in the time of the COVID-19 pandemic (AASCB, 2022).

Licensing Compacts in Medicine and Allied Professions

The National Center for Interstate Compacts (NCIC) provides technical assistance in developing and establishing interstate compact agreements. According to NCIC, interstate compact agreements are legal agreements between governments of more than one state to address common issues or achieve common goals. Counseling is not the first health profession to pursue a licensing compact. Interstate compacts for medicine and allied professions have been established (Litwak & Mayer, 2021). Prior to current efforts for the Counseling Compact, similar legislation introduced compacts for physicians (Adashi et al., 2021), registered nurses (Evans, 2015), physical therapists (Adrian, 2017), psychologists (Goodstein, 2012), speech pathologists (Morgan et al., 2022), and emergency medical personnel (Manz, 2015). Other efforts to pass licensing compacts are underway for social workers (Apgar, 2022) and nurse practitioners (Evans, 2015). These compact models include multistate licensing (MSL) or privilege-to-practice (PTP) structures. A single multistate license obtained through the MSL model would allow a practitioner to practice equally in all member states, as opposed to the PTP model in which a practitioner would be licensed in their designated home state and then allowed specific privileges to use that license in other places (Counseling Compact, n.d.).

MSL compacts include licensing effective in multiple states. The MSL model is used for the Nurse Licensure Compact (Interstate Commission of Nurse Licensure Compact Administrators, 2021; National Council of State Boards of Nursing, 2015). Nurses licensed within this compact system gain multistate licenses across all member states. The Nurse Licensure Compact legislation notes efforts to reduce redundancies in nursing licensure by using an MSL model. Draft legislation within the Nurse Licensure Compact MSL system defines a “multistate license” as a license awarded in a home state that also allows a nurse the ability to practice in all other member states under the said multistate license. This includes both in-person and remote practice. So, for example, a nurse in a compact state can be vetted and licensed through the central compact system, which allows traveling nurses to switch between placements rapidly without additional licensing required for compact states. On the other hand, non-compact states issue a “single state license” which does not allow practice across states.

The PTP licensing model is used by physical therapy and EMS professionals. PTP establishes an agreement between member states to grant legal authorization to permit counselors to practice (NCIC, 2020). Unlike the MSL structure, counseling licensure is still maintained by a single state, or “home state,” but member states allow privileges to practice with clients located in other states as part of the compact agreement. This licensing model includes the definition of a “single state license,” which indicates that licenses issued by the state do not by default allow practice in any other states but the home state (Interstate Commission of Nurse Licensure Compact Administrators, 2021). Further, definitions include “privilege to practice,” which allows legal authorization of practice in each designated remote state. The Counseling Compact uses this PTP model for portability of licensing privileges across member states (Counseling Compact, 2020).

The Counseling Compact

Development of the Counseling Compact began in 2019 as a solution to the challenges of licensure portability. Historically, navigating varying licensure standards across states represented a barrier to the portability of counseling professionals and access to services for the community (Mascari & Webber, 2013). To address these barriers, organizations including NBCC (2017), ACA (2018), American Mental Health Counselors Association (2021), and AASCB (2022) have worked to unify the profession, establish common minimum licensing standards across states, and create and promote the Counseling Compact. With the support of NCIC, draft legislation for a PTP compact was developed by the end of 2020 and followed by advocacy efforts to pass legislation in a minimum of 10 states to begin the process of establishing the Counseling Compact (ACA, 2021). As of October 2023, the Counseling Compact has been passed as law in a growing list of states, including Alabama, Arkansas, Colorado, Connecticut, Delaware, Florida, Georgia, Indiana, Iowa, Kansas, Kentucky, Louisiana, Maine, Maryland, Mississippi, Missouri, Montana, Nebraska, New Hampshire, North Carolina, North Dakota, Ohio, Oklahoma, Tennessee, Utah, Vermont, Virginia, Washington, West Virginia, and Wyoming, with legislation pending in several other states (Counseling Compact, 2022).

Compact Standards